The Ultimate Guide to Global Research Platforms

Global Research Platform: Stop Data Silos From Killing Your Breakthroughs

Global research platforms are fundamentally reshaping how scientists, healthcare organizations, and pharmaceutical companies access and analyze data across continents. These platforms enable secure, high-speed collaboration on data-intensive research—connecting imaging, clinical records, genomics, and other modalities at speeds of 100 Gbps or higher—without requiring data to physically move between institutions.

Key Components of a Global Research Platform:

- High-speed infrastructure enabling data transfers at gigabits to terabits per second

- Federated architecture that allows in-situ analysis without moving data across borders

- Multi-modal data support including EHR, imaging, genomics, clinical trials, and biomarkers

- Secure collaboration environments maintaining GDPR+ compliance and data sovereignty

- International partnerships spanning 36+ countries with access to 187M+ patient lives

- Proven impact accelerating cohort recruitment by 60% and supporting 20+ FDA/EMA submissions

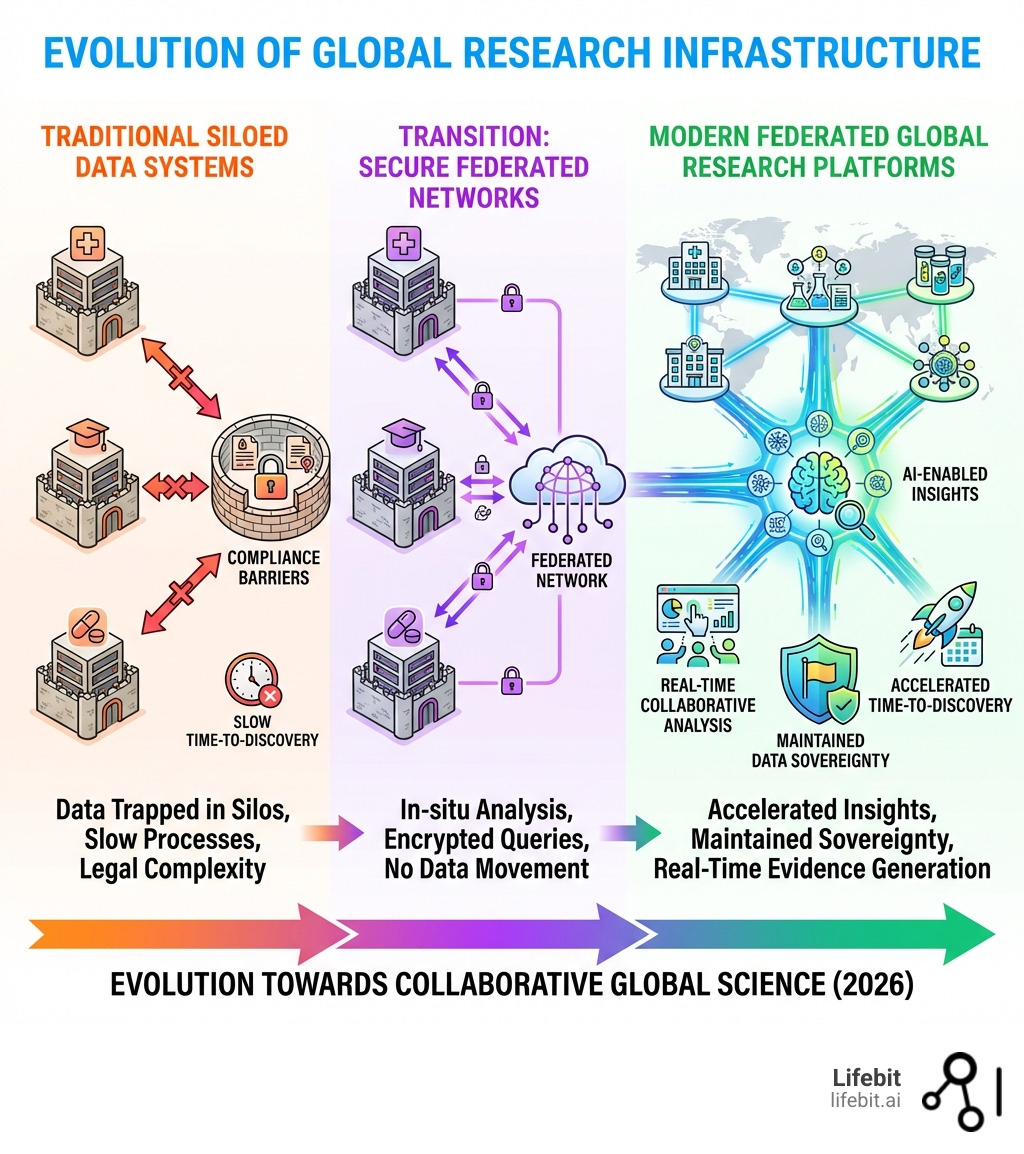

The Crisis of Data Gravity and Institutional Silos

The challenge facing research leaders today is stark: critical health data remains trapped in institutional silos, slowing drug discovery, delaying regulatory decisions, and preventing breakthrough insights that could save lives. This phenomenon, often called “Data Gravity,” describes how as datasets grow in size, they become increasingly difficult and expensive to move. Traditional approaches require physically moving sensitive patient data across borders—a process that’s slow, expensive, legally complex, and often impossible under modern privacy regulations like the EU’s GDPR or China’s PIPL.

Furthermore, the sheer volume of modern biomedical data is staggering. A single whole-genome sequence can exceed 100GB; a cohort of 10,000 patients generates petabytes of information. When you add high-resolution medical imaging and longitudinal electronic health records (EHR), the infrastructure required to simply “copy” this data to a central server becomes a multi-million dollar bottleneck. Exponentially increasing network bandwidth demands and the need for real-time evidence generation have exposed fundamental gaps in how global research infrastructure operates.

As CEO and Co-founder of Lifebit, I’ve spent over 15 years building computational biology and AI solutions that break down these barriers—from contributing to Nextflow, the breakthrough workflow framework used worldwide in genomic analysis, to establishing federated platforms that now power secure Global research platform capabilities for public sector institutions and pharmaceutical organizations across multiple continents.

Global research platform helpful reading:

- AI-Powered Clinical Trials: Real-World Examples Transforming Research in 2025

- Clinical research AI

- Clinical trial technology trends

Global Research Platform: Why Data Silos Kill Research and How to Fix It

The Global research platform (GRP) isn’t just a fancy name for a website; it is an international scientific collaboration dedicated to building the high-speed “superhighways” of the research world. Led by visionaries at the International Center for Advanced Internet Research (iCAIR) at Northwestern University, the Electronic Visualization Laboratory (EVL) at the University of Illinois Chicago, and the Qualcomm Institute at UC San Diego, the GRP’s mission is to create a seamless, worldwide environment for data-intensive science.

Back in 2019, the 1st Global Research Platform Workshop set the stage for what we see today: a move away from isolated clusters toward a unified, global fabric. At Lifebit, we’ve taken this mission to heart by developing federation services that allow researchers to query data where it lives. Think of it like this: instead of trying to ship a million delicate glass vases (your data) across the ocean to a museum, you simply send the curators to the vases. It’s safer, faster, and much more efficient.

The Primary Mission of Global Scientific Collaboration: Speed Without Compromise

The GRP operates as a “virtual organization.” It doesn’t own all the cables, but it integrates the world’s best testbeds and high-speed networks. The primary goal is to establish a worldwide “Science DMZ.” In networking terms, a Science DMZ is a portion of a network designed specifically for high-performance scientific applications, separate from the “business as usual” traffic like emails and video calls.

By bypassing traditional firewalls that inspect every packet (which causes massive latency), a Science DMZ allows for the frictionless flow of massive datasets. This is critical for projects like the Large Hadron Collider or global genomic surveillance, where delays of even a few milliseconds can disrupt complex computational workflows.

When we look at the Nature Index, we see that global research leaders like the Chinese Academy of Sciences and Harvard University are producing staggering amounts of data. To keep pace, the GRP mission focuses on:

- Customizing international fabrics: Building specialized network paths for scientific workflows that prioritize throughput over simple connectivity.

- Distributed cyberinfrastructure: Ensuring that computing power and storage are available exactly where and when they are needed, utilizing edge computing to process data closer to the source.

- Next-generation services: Moving beyond simple file transfers to complex, distributed data analysis where algorithms are dispatched to remote data nodes.

Why One Size Doesn’t Fit All: Differentiating GRP from Specialized Industry Platforms

It is easy to get confused by the many “platforms” out there. Some are built for specific industries, like Animal Health research, while others are purpose-built for commercial clinical trials. The Global research platform differentiates itself through its nonprofit, modular nature. As a 501(c)(3)-supported initiative, it focuses on “shared building blocks.”

This modularity is essential because scientific needs evolve faster than monolithic software. A researcher studying rare pediatric diseases needs different tools than a physicist studying dark matter. By providing a foundation of interoperable building blocks—such as standardized authentication protocols and high-speed data transfer nodes (DTNs)—the GRP allows specialized communities to build their own custom environments.

This is exactly how we approach our Research Collaboration Platform. We don’t believe in “walled gardens.” We believe in interoperable tools that allow a researcher in London to collaborate with a peer in New York or Singapore as if they were in the same room, using the specific analytical tools (like R, Python, or specialized AI models) that their field requires.

Global Research Platform: Move Petabyte Data 1,000x Faster

If you’ve ever tried to upload a massive video file to the cloud, you know the frustration of watching a progress bar crawl. Now, imagine that file is a petabyte-scale genomic dataset or a library of high-resolution MRIs. Standard internet speeds just won’t cut it.

The Global research platform enables high-performance data transfers at 100 Gbps or higher. To put that in perspective, 100 Gbps is roughly 1,000 times faster than a decent home internet connection. This isn’t just about speed for speed’s sake; it’s about making impossible research possible. For instance, transferring a 1-petabyte dataset over a standard 1 Gbps connection would take over 100 days; on a GRP-enabled 100 Gbps line, it takes less than 24 hours.

The upcoming 6th Global Research Platform Workshop (6GRP) in Chicago will showcase exactly how these terabit-per-second speeds are being utilized. For those of us managing sensitive biomedical data, these speeds must be paired with a Trusted Research Environment Complete Guide to ensure that while the data moves (or is accessed) fast, it never moves into the wrong hands.

The Global research platform Architecture: From 100 Gbps to Terabits

The architecture of the GRP is built on the Global Lambda Integrated Facility (GLIF). This is a world-scale network fabric donated by participants to support large-scale experimentation. It allows for:

- High-performance computing (HPC) integration: Connecting supercomputers across continents so that a researcher can run a simulation in Japan using data stored in Norway.

- Ubiquitous services: Making advanced networking tools available to domain scientists who aren’t necessarily “tech geeks.” This includes automated path provisioning and performance monitoring.

- Real-time transport: Handling data streams from telescopes, sensors, and sequencers in real-time, allowing for immediate analysis of transient scientific events.

One of the key technical breakthroughs within this architecture is the use of Data Transfer Nodes (DTNs). These are purpose-built servers designed specifically to move data over long-distance, high-latency networks. By using specialized TCP stacks and congestion control algorithms like BBR (Bottleneck Bandwidth and Round-trip propagation time), DTNs can saturate high-bandwidth links that would otherwise be throttled by standard networking hardware.

Events like the IEEE International Conference on eScience 2025 are where these architectural breakthroughs are debated and refined. We are moving toward a world where “terabit” is the new standard, and the GRP is the blueprint for that future.

Overcoming Cyberinfrastructure Challenges in 2026: No More Data Deadlocks

As we look toward 2026, the challenges aren’t just technical—they’re organizational. How do we ensure that a researcher in Canada can use a tool developed in Israel on data stored in the UK? This is the “Data Deadlock” problem, where technical, legal, and semantic barriers prevent collaboration even when the physical pipes are in place.

The answer lies in “shared building blocks” and the rise of the Federated Data Platform Ultimate Guide. These platforms address the “Data Deadlock” by:

- Standardizing APIs: Ensuring different software can talk to each other using protocols like GA4GH (Global Alliance for Genomics and Health).

- Automating Workflows: Using tools like Nextflow and Snakemake to ensure that an analysis run in one country produces the exact same result in another, regardless of the underlying hardware.

- Optimizing Bandwidth: Intelligently routing data to avoid bottlenecks and using “data-aware” scheduling to move computation to the data whenever possible.

- Semantic Interoperability: Mapping disparate data formats to common models like OMOP (Observational Medical Outcomes Partnership), ensuring that “heart attack” in one database means the same thing as “myocardial infarction” in another.

Global Research Platform: Access 187M Patient Records Without Moving Data

Scientific discovery is a team sport, but the “playing field” is often divided by strict borders. The Global research platform is designed to bridge these gaps. Today, the GRP network facilitates access to 187M+ patient lives across 36+ countries. Notably, over 90% of these patients are located outside the US, providing the diverse data needed for truly global health insights. This diversity is not just a “nice to have”; it is a scientific necessity to ensure that new drugs and treatments work across different ethnicities and genetic backgrounds.

Whether it’s searching the arXiv.org e-Print archive for the latest physics papers or accessing a Lifebit Trusted Research Environment for genomic analysis, the goal is the same: frictionless access to knowledge.

Handling Multi-Modal Data: Beyond Simple Spreadsheets

Modern research doesn’t just use one type of data. To understand a disease like Alzheimer’s or various forms of cancer, you might need to synthesize:

- Omics: DNA, RNA, and protein sequences that reveal the molecular basis of disease.

- Clinical EHR: Electronic Health Records, doctor’s notes, and prescription histories that provide the real-world context of patient health.

- Imaging: MRI, PET, CT scans, and even retinal images that show the physical progression of pathology.

- Digital/Voice: Direct digital voice acquisition and wearable data (like heart rate or sleep patterns) that offer continuous monitoring outside the clinic.

For example, Scientific research on spinal cord injury often requires combining clinical registries with imaging and biomarker data. A Global research platform must be “modality-agnostic,” meaning it treats a brain scan with the same level of precision and security as a genomic sequence. At Lifebit, our platform is built to harmonize these diverse types of data using advanced ETL (Extract, Transform, Load) pipelines that map raw data to the OMOP Common Data Model, turning a “data swamp” into a “data lakehouse” where insights can be fished out with ease.

Facilitating Secure International Data Sharing: The GDPR+ Standard

You can’t talk about global data without talking about privacy. The GRP and its partners adhere to what we call “GDPR+”—meeting the strict requirements of the General Data Protection Regulation while also accounting for local laws in countries like Canada (PIPEDA), Singapore (PDPA), and the USA (HIPAA).

With 150+ data partners, the focus is on Trusted Research Environments Explained. These are secure “clean rooms” or “air-gapped” digital environments where data can be analyzed but never downloaded or leaked. This setup gives data owners—such as national health services or private biobanks—the confidence to share their assets, knowing they maintain 100% sovereignty and control. In a federated GRP model, the data never leaves the jurisdiction of the provider; only the aggregated, non-identifiable results of the analysis are shared with the researcher.

Global Research Platform: How NHS Southampton Cut Recruitment Time by 60%

All this talk of 100 Gbps and federated architecture matters because it saves lives. The real-world impact of the Global research platform is measurable. We’ve seen technology deployments support 20+ FDA/EMA submissions, proving that federated research is now a “regulatory-grade” reality. This is a major shift; previously, regulators were skeptical of data they couldn’t physically see or touch. Today, the audit trails and reproducibility provided by platforms like Lifebit have made federated evidence a gold standard for post-market surveillance and rare disease validation.

Our Lifebit Federated Biomedical Data Platform is a prime example of this in action, allowing pharmaceutical companies to validate AI models using real-world data from multiple continents simultaneously without the risk of data exposure.

Case Study: How NHS Southampton Cut Recruitment Time by 60%

One of the most powerful examples of this infrastructure in action involves NHS Southampton. In traditional clinical trials, finding a specific cohort of patients (e.g., those with a specific genetic mutation and a specific clinical history) can take years of manual record searching across dozens of hospitals. By using a federated environment across 100 institutions, they were able to:

- Accelerate cohort recruitment by 60%: Finding the right patients for a study in record time by running automated queries across the entire network.

- Access Phenotypic Data: Linking clinical traits (phenotypes) with genetic markers (genotypes) without moving the data, allowing for more precise patient stratification.

- Improve Diversity: Reaching patient populations that were previously “hidden” in local silos, ensuring that the research findings are applicable to a broader demographic.

This is the power of a What is a Trusted Research Environment. It turns a multi-year recruitment slog into a streamlined, digital process that benefits both the researcher and the patient waiting for a cure.

Milestones in Global Research and Future Workshops

The journey of the GRP is marked by constant evolution. From the early days of the Global Lambda Integrated Facility to the upcoming 2025 Chicago workshop, the focus has shifted from “Can we connect these pipes?” to “How can we use these pipes to cure cancer?”

Key milestones include:

- 2015: The establishment of 100 Gbps trans-Atlantic links dedicated to research.

- 2019: The first GRP workshop, focusing on the integration of HPC and high-speed networking.

- 2022: The widespread adoption of federated learning, allowing AI models to be trained on global datasets without data movement.

- 2025 and Beyond: The move toward Terabit-per-second networking and the integration of quantum key distribution (QKD) for ultra-secure data sharing.

The Nature Index 2025 Science Cities supplement highlights how cities like New York, London, and Beijing are becoming hubs for this interconnected research. The GRP provides the “nervous system” for these science cities, ensuring that a breakthrough in one can be instantly validated in another, creating a global feedback loop of scientific excellence.

Global Research Platform: Your Top Privacy and Speed Questions Answered

What is the primary mission of the GRP?

The primary mission is to enable international scientific collaboration through high-performance infrastructure. It aims to provide a worldwide Science DMZ for data-intensive research, ensuring that scientists can work on massive datasets across continents with minimal latency and maximum security.

How does a global research platform handle data privacy?

Most modern platforms, including those we build at Lifebit, use a federated architecture. This means the data stays behind the firewall of the institution that owns it. Researchers send their analysis code to the data, rather than pulling the data to their own computers. This ensures compliance with GDPR and other local privacy laws while still allowing for global collaboration.

What types of data modalities are supported?

A robust Global research platform supports nearly all scientific data types, including:

- Multi-omics (Genomics, Proteomics, etc.)

- Clinical trial data

- Medical imaging (MRI, CT, Ultrasound)

- Wearable and digital health data

- Biosample metadata

You can learn more about how we secure these modalities in our Lifebit Trusted Research Environment.

Global Research Platform: Stop Moving Data and Start Moving Insights

The era of the “lone scientist” working in a basement lab is over. Today, discovery happens at the intersection of global collaboration and high-speed technology. At Lifebit, we are proud to be at the forefront of this movement. Our next-generation federated AI platform doesn’t just connect data; it unlocks its potential.

By providing secure, real-time access to global biomedical and multi-omic data, we are helping biopharma, governments, and public health agencies move faster than ever. Whether it’s through our Trusted Data Lakehouse (TDL) or our R.E.A.L. (Real-time Evidence & Analytics Layer), we are delivering the AI-driven safety surveillance and secure collaboration the world needs.

The Global research platform is more than just infrastructure; it is the foundation for the next century of scientific excellence. Are you ready to stop moving data and start moving insights? Explore the Lifebit Platform today and join the federated revolution.