Why Distributed Data Analysis is the Secret Sauce for Massive Datasets

How Distributed Data Analysis Cuts Research Time by 90% for Massive Datasets

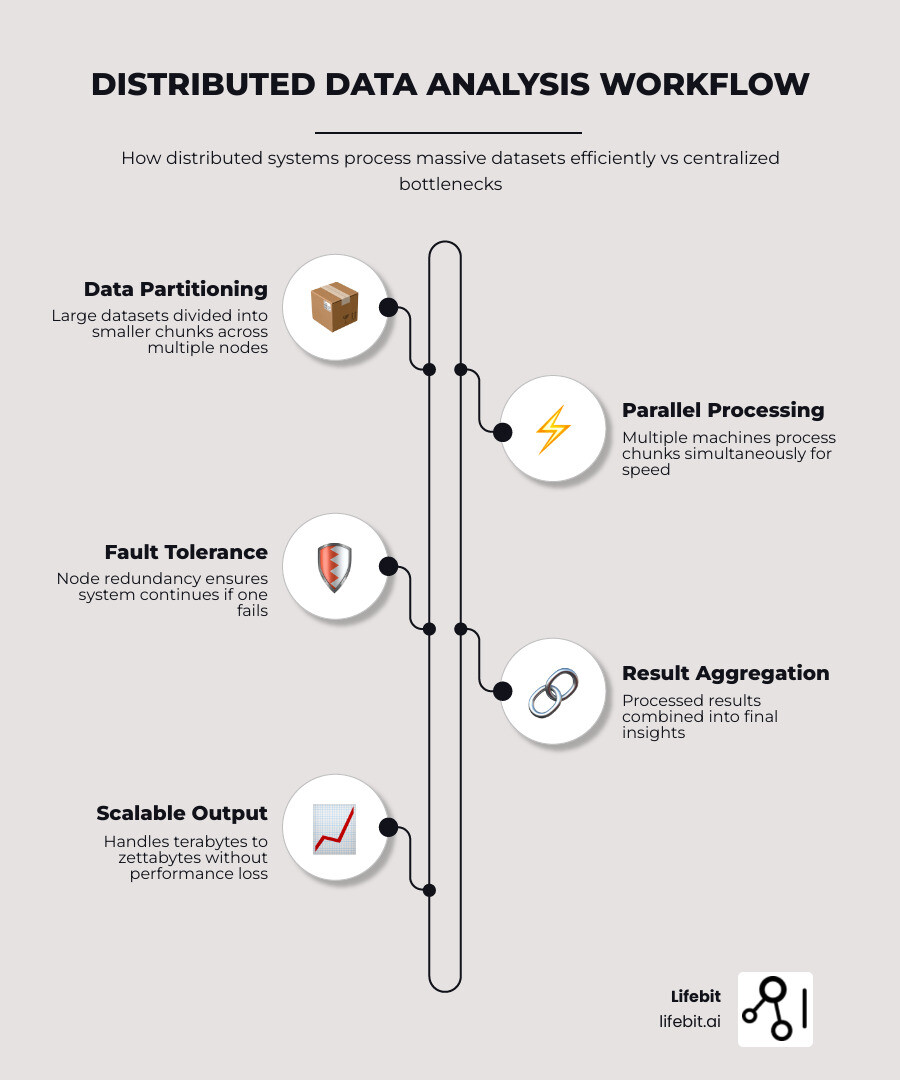

Distributed data analysis is a method of examining large datasets by spreading data processing across multiple computers or nodes instead of relying on a single machine. Here’s what you need to know:

- What it is: A computing approach that divides large datasets into smaller chunks, processes them in parallel across multiple machines, and combines the results

- Why it matters: Enables organizations to process terabytes to zettabytes of data that would overwhelm centralized systems

- Key difference: Unlike traditional centralized analysis that creates bottlenecks, distributed systems use parallel processing to dramatically reduce computation time

- Core benefit: Provides fault tolerance—if one node fails, the system continues operating without data loss

The problem is clear: digital data doubles roughly every two years, yet traditional centralized architectures struggle to keep up. When monitoring air quality across a sprawling city with thousands of sensors, or analyzing genomic data from millions of patients, a single computer simply cannot handle the load efficiently.

Distributed systems solve this by breaking work into smaller pieces that multiple machines process simultaneously. It’s like having a team of specialists working together on a big project rather than one person trying to do it all. This approach has become essential for organizations dealing with data from sources like electronic health records, IoT devices, e-commerce platforms, and clinical trials—where datasets regularly exceed what any single machine can process in a reasonable timeframe.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, with over 15 years of experience building computational biology tools and pioneering federated Distributed data analysis platforms for global healthcare. My work includes contributing to Nextflow, a breakthrough workflow framework used worldwide for genomic data analysis at scale.

Simple Distributed data analysis glossary:

Why Centralized Systems Are Killing Your Research — And How Distributed Analysis Fixes It

For decades, the standard approach to data was simple: gather everything in one big “warehouse” and run your queries. But as we enter the era of petabyte-scale research, this centralized model is hitting a wall. When you try to force massive datasets through a single central server, you encounter centralized bottlenecks that are no longer just inconveniences—they are project killers.

In a centralized system, every bit of data must travel to one location. This creates resource contention—where different tasks fight for the same CPU and memory—and massive network congestion. This is often referred to as the “Von Neumann bottleneck” on a macro scale, where the data transfer rate between the storage and the processor limits the overall speed of the system. If that central server fails, your entire research project grinds to a halt, representing a catastrophic single point of failure.

Furthermore, centralized systems rely on vertical scaling (scaling up). This means when you run out of power, you must buy a more expensive, specialized machine. However, hardware has physical limits; we are reaching the end of Moore’s Law, where the cost of doubling a single machine’s power increases exponentially while the performance gains diminish.

Distributed data analysis flips this script by embracing horizontal scaling (scaling out). Instead of moving all data to the computer, we move the computation to the data. By utilizing multiple nodes, we can execute computations simultaneously. This parallel approach drastically reduces the time required to analyze large datasets. For example, simulating climate change scenarios or running complex GWAS (Genome-Wide Association Studies) requires trillions of calculations. On a single high-end workstation, a complex genomic alignment might take weeks; on a distributed cluster of 100 nodes, it can be completed in a few hours.

At Lifebit, we’ve seen how Beyond centralized AI exploring the power of distributed architectures allows researchers to bypass these limits. It’s not just about speed; it’s about feasibility. Some questions are simply too big for one computer to answer because the RAM requirements alone would exceed the capacity of any commercially available motherboard.

Comparison: Centralized vs. Distributed Analysis

| Feature | Centralized Analysis | Distributed Data Analysis |

|---|---|---|

| Data Location | Single central repository | Spread across multiple nodes/locations |

| Scalability | Vertical (buy a bigger machine) | Horizontal (add more machines) |

| Fault Tolerance | Low (Single point of failure) | High (Redundancy across nodes) |

| Processing Speed | Limited by single CPU/RAM | Parallel processing across many CPUs |

| Cost | High upfront for specialized hardware | Cost-effective commodity hardware or cloud |

| Data Gravity | High (Data must move to compute) | Low (Compute moves to data) |

The 4 Core Components of a High-Performance Distributed Data Analysis Platform

To understand how a Distributed data analysis platform works, we need to look under the hood. A distributed system isn’t just a pile of computers; it’s a finely tuned orchestra of components working in harmony, managed by sophisticated software layers.

- Nodes: These are the individual “workers” in the system. Each node has its own processing power (CPU/GPU), memory (RAM), and storage. Nodes can be physical servers in a data center or virtual instances in the cloud. In a heterogeneous system, nodes might have different capabilities—some optimized for storage, others for heavy mathematical computation.

- Clusters and Orchestration: A collection of nodes that work together as a single unit is a cluster. To manage these, we use orchestration tools like Kubernetes or Apache Mesos. These tools act as the “conductor,” deciding which node is healthy enough to take on a new task and automatically restarting tasks if a node fails.

- Sharding (Partitioning): This is the process of breaking a massive dataset into smaller, manageable “shards.” For instance, a global user database might be sharded by region so that European data stays on European nodes. Effective sharding is critical; if data is partitioned poorly, you end up with “hot spots” where one node does all the work while others sit idle.

- Data Replication and Consensus: To prevent data loss, we store copies of the same data on different nodes. A common practice is a replication factor of three. To ensure all nodes agree on the state of the data, distributed systems use consensus algorithms like Paxos or Raft. These ensure that even if the network is spotty, the system maintains a single, consistent version of the truth.

We often talk about a Distributed data analysis platform as a “team of specialists.” One node might handle indexing, another handles heavy math, and a third manages the flow of information. This architecture allows for horizontal scalability—when your data grows, you don’t need to replace your servers; you just add more nodes to the cluster.

Handling Data-in-Motion with Distributed Data Analysis

Not all data sits still in a database. We deal with “data-in-motion”—real-time streams from IoT sensors, hospital monitors, or stock tickers. Traditional databases struggle with this because they are designed for “store then query.” Distributed systems use a “query then store” or “query while moving” approach.

This is where technologies like Apache Spark and Kafka shine. Apache Spark provides in-memory data processing, which is significantly faster than traditional disk-based processing (like the older Hadoop MapReduce). By keeping data in RAM across the cluster, Spark can perform iterative machine learning algorithms 100x faster than disk-based systems.

When we look at Workflows distributed computing biomedical data, the ability to process data as it arrives is vital. For example, in pharmacovigilance, we need to analyze real-world evidence from multiple hospitals in real-time to spot rare side effects of a new drug before they become a widespread problem. A distributed stream-processing engine can ingest millions of events per second, filtering and aggregating them on the fly to trigger alerts for medical researchers.

The Role of the CAP Theorem in System Design

In distributed systems, you can’t have it all. This is the “CAP Theorem,” which states that a distributed data store can only guarantee two out of the three following conditions:

- Consistency: Every node sees the same data at the same time. If you update a record on Node A, Node B must show that update immediately.

- Availability: Every request receives a response (even if it’s not the latest data). The system stays up even if some nodes are down.

- Partition Tolerance: The system continues to work even if the network between nodes is broken (a “network partition”).

When designing a Key features federated data lakehouse, we have to make choices based on the use case. For a bank, consistency is king—you don’t want two different balances showing up on two different ATMs. This is a “CP” system. But for a social media feed or a large-scale sensor network, availability might be more important; it’s okay if you see a post three seconds after your friend does, as long as the app doesn’t crash. This is an “AP” system. Understanding these trade-offs is fundamental to building a platform that actually meets research needs without sacrificing reliability.

Beyond Points: How Distributional Data Analysis Open ups Hidden Patterns

Wait, is it “Distributed” or “Distributional”? While they sound similar, distributional data analysis is a specific branch of statistics often used within distributed systems.

Traditional statistics looks at “points” (like your height or weight). Distributional data analysis looks at “shapes” (like the entire probability distribution of heights in a city). Because these distributions don’t live in a normal “flat” mathematical space, we need specialized metrics to compare them:

- Wasserstein Metric: Often called the “Earth Mover’s Distance,” it measures how much “work” it would take to transform one distribution shape into another.

- Fisher-Rao Metric: A way to measure the distance between probability distributions based on information theory.

- Fréchet Means: In a normal world, the “average” of 10 and 20 is 15. In distributions, the average (or barycenter) is much more complex. The Fréchet mean helps us find the “average shape” of multiple datasets spread across a distributed system.

Academic research, such as Barycenters in the Wasserstein space and Inference for density families using functional principal component analysis, provides the mathematical foundation for these complex calculations. These methods are essential when we want to compare patient populations across different global hospitals without actually moving the raw data.

Applying Regression and Time Series to Distributed Data Analysis

How do we predict the future when our data is a complex distribution? We use specialized tools like Fréchet regression and Wasserstein autoregressive models.

- Fréchet Regression: This generalizes linear regression. Instead of predicting a single number (like future stock price), it can predict an entire distribution (like the future spread of a virus across a population).

- Functional PCA (Principal Component Analysis): This helps us find the “modes of variation” in our data. It tells us which parts of the data “shape” are changing the most over time.

Research into Wasserstein regression has shown that these methods are incredibly powerful for analyzing time-series data where each data point is itself a complex distribution of information.

Stop Data Leaks: Solving Consistency and Security in Distributed Systems

Distributed systems are powerful, but they aren’t magic. They come with their own set of technical and ethical headaches that require rigorous engineering to solve.

- Data Skew and the “Straggler” Problem: Imagine a team of 10 people where one person has 90% of the work. That’s data skew. In a distributed system, the entire job is only as fast as the slowest node (the “straggler”). If one node is overloaded or has a hardware glitch, your performance tanks. We solve this with dynamic load balancing and “speculative execution,” where the system identifies a slow node and starts a duplicate of its task on a faster node, taking whichever result finishes first.

- Network Latency and Data Gravity: Moving data between nodes takes time. If nodes are in different countries, the “speed of light” becomes a real problem. We minimize this by using “data gravity”—keeping the computation as close to the storage as possible. Instead of pulling a 10TB file across the Atlantic to your laptop, you send a 10KB script to the server where the file lives.

- Security and Privacy in a Borderless System: This is the most critical challenge. How do you analyze data across 50 different hospitals without risking a data breach? Traditional security relies on a “perimeter” (a firewall). In distributed analysis, the data is often spread across different legal jurisdictions and different security zones.

At Lifebit, we champion Federated data governance. Instead of copying data, we create a “Trusted Research Environment” (TRE). A TRE is a secure digital space where the data stays put, and only the “questions” (the algorithms) travel to the data. This Privacy preserving statistical data analysis on federated databases ensures that sensitive patient information never leaves its secure home.

To further enhance security, we implement several advanced layers:

- Differential Privacy: This adds a mathematical “noise” to the results. It ensures that while the overall statistical trend is accurate, it is impossible to reverse-engineer the data to identify a specific individual.

- Secure Multi-Party Computation (SMPC): This allows multiple parties to jointly compute a function over their inputs while keeping those inputs private. No single party ever sees the other parties’ raw data.

- Homomorphic Encryption: This is the “holy grail” of data security—it allows us to perform calculations on encrypted data without ever decrypting it. The results, when decrypted, match the results of operations performed on the plaintext.

By following the “Five Safes” framework—Safe People, Safe Projects, Safe Settings, Safe Data, and Safe Outputs—distributed systems can actually be more secure than centralized ones, as they eliminate the need for risky bulk data transfers.

From E-commerce to Genomics: Real-World Impact of Distributed Architectures

Where is Distributed data analysis actually being used today? The answer is: almost everywhere that deals with “Big Data” and requires high-velocity decision-making.

- Sustainability and Smart Grids: Modern power grids are no longer one-way streets. They involve solar panels, wind turbines, and electric vehicles all feeding back into the system. Smart grids use distributed analysis to monitor millions of smart meters in real-time. This allows for “precision agriculture,” where drones and soil sensors tell a farmer exactly which square meter of a field needs water or nitrogen. By processing this data at the “edge” (on the farm itself), we reduce the need for massive cloud bandwidth and allow for instant adjustments.

- E-commerce and Fraud Detection: Global platforms like Amazon or Alibaba handle billions of transactions. When you click “buy,” a distributed cluster is running fraud detection algorithms against your purchase history, IP address, and millions of known fraud patterns in milliseconds. If this were centralized, the latency would make the checkout process unbearable. Distributed architectures allow these platforms to scale during events like Black Friday by simply spinning up more nodes in the cloud.

- Genomic Research and Precision Medicine: This is our specialty. To find the cure for a rare disease, you might need to analyze 100,000 whole genomes. No single hospital or even a single country has that many samples for a rare condition. By using a distributed and federated approach, we can link datasets from the UK, USA, Europe, and Singapore. Researchers can run a single query that spans the globe, finding the three or four patients with a specific genetic mutation without the data ever leaving its country of origin.

- Financial Services: High-frequency trading platforms use distributed systems to analyze market trends across multiple global exchanges simultaneously. In this world, a microsecond of latency can mean the difference between a profit and a loss. Distributed systems allow these firms to process vast amounts of historical data to train models while simultaneously applying those models to live market feeds.

The impact is massive. As noted in Federated technology in population genomics, this technology is moving us away from “one-size-fits-all” medicine toward truly personalized care. It allows us to build a global knowledge base of human health while respecting the sovereignty and privacy of every individual’s data.

3 Critical Questions About Distributed Data Analysis Answered

How does distributed data handle partitioning?

Partitioning (or sharding) is usually done in two ways: Hash-based or Range-based.

- Hash-based partitioning uses a mathematical formula (a hash function) to assign data to nodes. This is great for ensuring an even spread of data, preventing “hot spots.” However, it makes “range queries” (like finding all customers aged 20-30) difficult because the data is scattered randomly.

- Range-based partitioning groups data by a specific value, like a date or a zip code. This ensures that when you’re looking for data from “January 2024,” the system knows exactly which node to check. The downside is that if everyone is suddenly looking for January 2024 data, that one node will be overwhelmed.

What is the difference between distributed and federated analysis?

This is a common point of confusion, but the distinction is vital for compliance and privacy:

- Distributed analysis usually happens within one organization’s “walls” or a single cloud environment. You own all the computers and all the data; you’re just spreading the work out for speed and scale. The data is often moved and reshuffled between nodes freely.

- Federated analysis is like distributed analysis on “privacy mode.” It happens across different organizations (like two different hospitals or two different countries). Each entity keeps its data behind its own firewall and maintains full ownership. The analysis “visits” each site, performs the calculation locally, and only brings back the aggregated results (the “answers”), never the raw data. Federated analysis is the solution to data residency laws like GDPR or HIPAA.

Can small businesses benefit from distributed architectures?

Absolutely. A decade ago, you needed a team of PhDs and a multi-million dollar server room to run a distributed system. Today, thanks to the cloud and open-source software, it is accessible to everyone.

- Serverless Computing: Small businesses can use services like AWS Lambda or Google Cloud Functions to run distributed tasks without managing any servers at all.

- Dask and Ray: These are Python libraries that allow developers to scale their existing code from a single laptop to a massive cluster with very little extra effort. If your Excel sheet is getting too slow, moving to a distributed Python framework is the logical next step. Small businesses can use these tools for everything from optimizing delivery routes to analyzing customer sentiment on social media.

Scale Your Research Now: Why Distributed Data Analysis is Non-Negotiable

The era of waiting 20 hours for a report to run is over. Distributed data analysis has transformed from a niche academic concept into the backbone of the modern economy and scientific research. By breaking down massive datasets and processing them in parallel, we’ve open uped the ability to solve problems that were once considered impossible.

At Lifebit, we believe that data should work for you, not the other way around. Our federated AI platform is designed to take these complex distributed concepts and make them simple, secure, and actionable for researchers around the globe. Whether it’s finding the next breakthrough in precision medicine or optimizing global supply chains, the “secret sauce” is clear: don’t work harder, work together—across a distributed network.

Ready to dive deeper into the future of research? Check out our Federated analytics ultimate guide to see how we’re putting these principles into practice every day.