From Labs to Lives: A Guide to AI in UK Healthcare

NHS Crisis: Can AI Cut the 250,000 Staff Gap and Save Billions?

AI healthcare UK is changing how the NHS diagnoses disease, treats patients, and manages care. Here’s what you need to know:

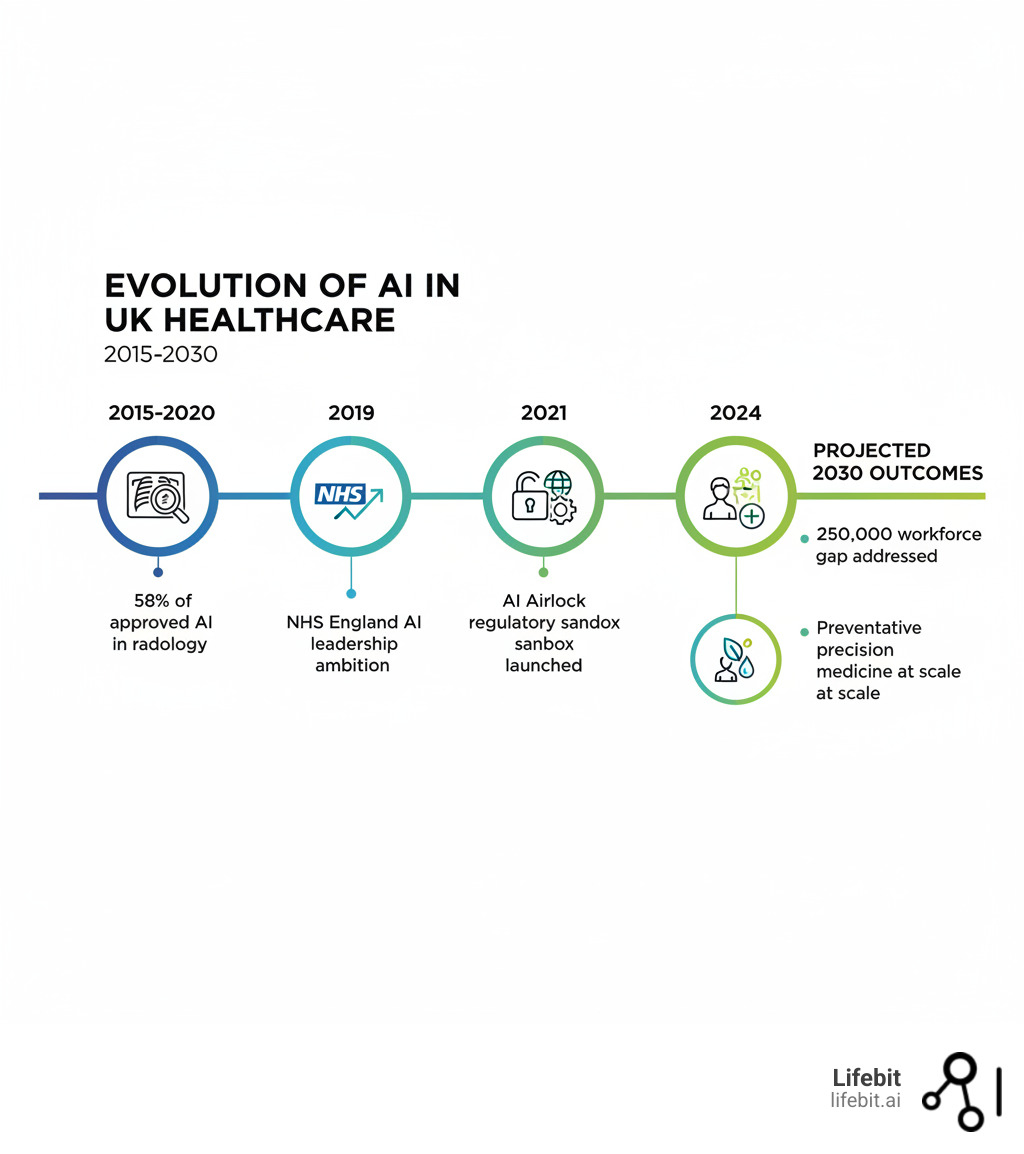

- Current Role: The UK is the first country to join the global HealthAI regulatory network, positioning itself as a leader in safe AI adoption

- Key Applications: AI is already used in radiology (58% of approved AI devices), diabetic retinopathy screening, cancer treatment planning, and predicting patient risks

- Major Benefits: Up to 90% faster radiotherapy planning, 73% reduction in patient waiting times, and potential £12.5 billion annual savings for NHS England

- Challenges: Workforce shortages (250,000 gap by 2030), algorithmic bias, data governance, and ensuring patient trust

- Regulation: The MHRA leads oversight through AI Airlock, a regulatory sandbox testing new tools before NHS-wide rollout

The NHS is under unprecedented pressure. By 2030, the staff shortage could hit 250,000 posts amid rising costs, longer waiting times, and an ageing population needing more care.

Artificial Intelligence (AI) offers a lifeline. It involves machines performing human-like tasks: learning from data, recognising patterns, and making predictions. In healthcare, this includes Machine Learning (algorithms that improve with experience) and Deep Learning (neural networks that process vast datasets).

The UK government aims to make the NHS a world leader in AI adoption. The goal is to save lives, cut waiting times, and free clinicians to focus on patient care.

AI can spot lung conditions earlier, personalise cancer treatment, and predict which patients need urgent intervention, while automating up to 70% of routine administrative tasks. Some systems have even learned to outperform 99.8% of intensive care doctors in specific survival scenarios.

But realising this potential requires robust regulation, ethical data use, and tools that clinicians trust and understand.

As Maria Chatzou Dunford, CEO of Lifebit, I’ve spent over 15 years at the intersection of genomics, AI, and healthcare data. This guide explains how AI is reshaping UK healthcare—from regulation to real-world use—and what it means for patients, clinicians, and leaders.

UK’s AI Gamble: How “Pro-Innovation” Rules Aim to Keep Patients Safe Without Killing Progress

When it comes to regulating AI in healthcare, the UK has chosen a path that’s both bold and pragmatic. The UK isn’t rushing into AI without guardrails, nor is it drowning innovation in red tape. Instead, it’s pioneering a “pro-innovation” approach—balancing technological breakthroughs with patient safety.

On June 24th, the UK became the first country to join The HealthAI Global Regulatory Network, a collaboration of health regulators focused on the safe, effective use of AI. It’s an early warning system and knowledge-sharing platform. If an AI tool shows unexpected behaviour in one country, others are alerted, allowing regulators to tackle shared challenges with adaptive AI together.

This pioneer status matters for AI healthcare UK because it positions us at the table where international standards are being shaped. We’re not following—we’re leading.

How does our approach compare globally? While the European Union pursues its landmark AI Act—a comprehensive, risk-based framework that categorises AI systems from ‘minimal’ to ‘unacceptable’ risk—and the United States adapts existing sectoral regulations like those from the FDA, the UK has chosen a third way. This ‘pro-innovation’ approach avoids a single, monolithic law in favour of empowering individual regulators like the MHRA to create bespoke rules for their domains. The philosophy is that healthcare AI evolves too rapidly for slow-moving, one-size-fits-all legislation. This agility allows the UK to respond to new technologies like generative AI without being constrained by a rigid legal text, but it also places a significant burden on sectoral regulators to keep pace.

The goal is to create an ecosystem for developing, testing, and safely deploying AI tools to benefit patients and ease the crushing pressure on our NHS.

MHRA’s AI Airlock: A “Sandbox” to Test AI Before It Touches a Patient

The Medicines and Healthcare products Regulatory Agency (MHRA) is actively helping innovation happen safely. Its flagship initiative, the AI Airlock, is a regulatory sandbox allowing companies to test new AI medical devices with the regulator providing feedback in real time, all before a wider NHS rollout.

This ‘regulatory sandbox’ provides a controlled environment where developers can test their AI medical devices on real (but anonymised) NHS data with direct oversight from the regulator. The process is collaborative; instead of a final submission for approval, developers receive iterative feedback. For example, a company developing an algorithm to predict sepsis risk could test its model’s performance against historical patient data within the Airlock. The MHRA would help assess its accuracy, identify potential biases, and ensure its decision-making process is transparent before it is ever considered for a live clinical trial. This de-risks innovation for startups and ensures that by the time a tool reaches patients, it’s been battle-tested with regulatory rigour built in from the ground up.

The MHRA is also pioneering the use of real-world evidence for AI tools. Unlike static medical devices, AI systems learn and evolve. The MHRA continuously monitors these tools in practice, gathering data on actual performance to inform regulatory decisions.

This is especially critical for adaptive and generative AI—technologies that change their behaviour based on new data. The MHRA is building frameworks that can evolve alongside the technology, ensuring safety and performance standards keep pace with innovation.

For those wanting to dive deeper, the UK’s regulatory approach to AI medical devices provides comprehensive guidance.

The £3.4B Upgrade: Can the NHS Build the Skills and Infrastructure for an AI Future?

The best AI in the world is useless if healthcare professionals don’t trust or understand it. The 2019 Topol Review highlighted the need for workforce preparedness for a digital future. With a projected 250,000 staff shortage looming by 2030, AI isn’t replacing clinicians—it’s augmenting them, freeing up time for complex decision-making and human connection.

That’s where initiatives like the Digital, Artificial Intelligence and Robotics Technologies in Education (DART-Ed) programme come in. This means comprehensive upskilling to ensure NHS staff are digitally literate and confident using AI. A radiologist needs to understand how an algorithm flags suspicious lesions, when to trust it, and when to override it. A GP needs to interpret AI-generated risk scores in the context of their patient’s full clinical picture.

The infrastructure challenge is just as urgent. The NHS is not a single entity but a complex federation of trusts, each with its own legacy IT systems, patient record software, and data storage practices. This creates digital silos, where a patient’s primary care records from their GP are disconnected from their hospital’s specialist imaging data. AI thrives on large, integrated datasets, and this fragmentation is a major barrier. The £3.4 billion investment is targeted at breaking down these silos by promoting interoperability standards like HL7 FHIR (Fast Healthcare Interoperability Resources), which allows different systems to ‘speak the same language.’ Building a secure, cloud-based infrastructure that can unify these disparate data sources is the foundational plumbing required to make widespread AI deployment a reality. Without it, even the most brilliant algorithms will be starved of the data they need to function.

Getting the infrastructure and workforce right is what transforms AI healthcare UK from potential to reality—from proof of concept to genuine patient benefit.

AI in the Trenches: How Algorithms Are Slashing Wait Times and Finding Cancer Faster

The promise of AI healthcare UK is moving from theoretical to tangible. AI isn’t just a futuristic concept—it’s already here, revolutionising how clinicians spot cancer, plan treatments, and keep patients out of hospital.

Radiologists process a huge volume of medical images daily, a task demanding time and precision—both of which are stretched thin. AI is stepping in to shoulder some of this burden, and the results are remarkable.

From Scans to Screens: AI That Spots Disease Up to 90% Faster

Radiology has become AI’s proving ground. Between 2015 and 2020, 58% of AI and machine learning-based medical devices approved in the USA and Europe were designed for radiological use. In mammogram analysis, several AI tools have been deployed across NHS trusts to act as a ‘second reader.’ The algorithm independently analyses the scan and flags suspicious areas, which are then prioritised for review by a human radiologist. Studies, including a major one published in The Lancet Oncology, have shown that this hybrid approach can maintain high accuracy while potentially reducing the workload of radiologists by nearly half, a critical gain in a specialty facing severe staff shortages.

In cancer care, the impact is profound. The InnerEye project, an open-source technology developed by Microsoft Research and now being implemented in NHS trusts like Addenbrooke’s, automates the painstaking process of tumour outlining (segmentation) on scans. This task, which can take a radiotherapist hours, is reduced to minutes. The AI’s proposed outline is then reviewed and refined by the clinician, slashing preparation time for head and neck and prostate cancer radiotherapy planning by up to 90%. For patients waiting anxiously to begin treatment, this means days or even weeks gained.

Diabetic retinopathy screening in ophthalmology showcases AI’s ability to catch problems early. These algorithms have demonstrated robust performance, identifying sight-threatening changes in the retina before patients notice symptoms. Early detection means early intervention.

Symptom checkers and AI chatbots are being trialled in primary care to help with triage. These tools augment human judgment, ensuring urgent cases get immediate attention while handling routine queries efficiently.

Perhaps most transformative are virtual wards, which use AI-powered remote monitoring to allow patients to recover safely at home instead of in a hospital. A patient recovering from major surgery or managing a chronic condition like COPD is sent home with a kit of medical-grade sensors (e.g., a continuous glucose monitor, a smart blood pressure cuff, a pulse oximeter). Data from these devices is streamed to a central clinical hub. An AI platform analyses this real-time data, establishing a baseline for each patient and using predictive algorithms to spot subtle signs of deterioration long before a human might notice. For example, it could flag a gradual decrease in oxygen saturation or a slight but steady increase in heart rate, prompting a nurse to conduct a video consultation or a home visit. This model has been shown to reduce hospital readmissions, improve patient satisfaction, and free up thousands of hospital beds—a critical advantage for an overstretched NHS.

Automating the Administrative Burden to Free Up Clinicians

Beyond direct clinical diagnosis, one of the most immediate impacts of AI is in tackling the immense administrative burden that consumes clinician time. It’s estimated that up to 70% of some routine healthcare tasks could be automated. For instance, AI-powered clinical coding tools can analyse doctors’ notes and patient records to automatically assign the correct billing and diagnostic codes, a process that is currently manual, time-consuming, and prone to error. Similarly, ambient clinical intelligence uses voice recognition and natural language processing to listen to a patient-doctor consultation and automatically generate clinical notes in the electronic health record. This allows the doctor to focus entirely on the patient rather than on a computer screen. Other applications being trialled include intelligent appointment scheduling to minimise ‘no-shows’ and optimise clinic flow, and AI-driven management of hospital bed allocation, which predicts discharge times to improve patient throughput and reduce A&E backlogs. While less glamorous than disease diagnosis, these operational efficiencies are vital for relieving pressure on the workforce and saving billions in costs.

Beyond the Lab: AI That Designs Drugs and Personalises Your Treatment

The traditional drug development path is slow and expensive, often taking over a decade and costing billions. AI is rewriting this timeline.

One breakthrough, AlphaFold, solved a 50-year-old problem in biology by accurately predicting protein structures, the molecular building blocks of our bodies. Understanding these structures is fundamental to designing drugs that work precisely.

This computational power extends to immunomics and synthetic biology, where AI helps researchers understand disease mechanisms at a molecular level, moving us toward targeted preventive strategies.

Clinical trials are also being optimised by AI. Algorithms can identify which patients are most likely to benefit from a treatment, design more efficient trials, and even predict outcomes. This saves money and gets effective treatments to patients faster.

The most exciting frontier is personalised treatment plans. AI can analyse a patient’s genetic profile, medical history, and lifestyle to create digital twins—virtual models of individuals. Clinicians can test interventions in this digital environment before prescribing a single pill. We’re moving from one-size-fits-all medicine to truly individualised care.

At Lifebit, we’ve seen how federated data platforms accelerate this revolution. By enabling secure access to global biomedical data, researchers can train AI models on diverse datasets without compromising patient privacy—a critical capability for developing equitable tools.

If you want to explore the broader implications, read about AI’s role in transforming medicine in this comprehensive review.

From catching diseases earlier to designing drugs faster, AI is fundamentally changing what’s possible. The question isn’t whether AI will reshape the NHS—it’s how quickly we can implement these tools safely and equitably.

The Power Players: Meet the UK’s AI Innovation Engine

The change of AI healthcare UK isn’t happening in isolation. It’s the result of a vibrant ecosystem where private companies, academic powerhouses, and government initiatives work together to turn research into real solutions for patients.

Private companies, from startups to tech giants, provide entrepreneurial energy, technical expertise, and investment. Technology providers are actively seeking partnerships with healthcare organisations to drive medical innovation. Imperial College London’s research, for example, showcases collaborations with pharmaceutical companies for drug target identification and with med-tech firms for cardiac imaging. These partnerships bridge the gap between lab breakthroughs and bedside applications.

Research institutions form the backbone of this innovation. Universities like Imperial College London and the University of Leeds are home to world-class teams pushing the boundaries of AI in healthcare. The NIHR (National Institute for Health and Care Research) provides vital funding for health and social care research, including AI projects.

Government initiatives provide strategic direction. The NHS AI Lab stands at the centre, driving the responsible and ethical adoption of AI. Through programmes like the AI Award, the NHS identifies and scales promising AI technologies. The NHS AI award winners demonstrate tangible benefits in areas from retinal screening to antimicrobial stewardship.

Key innovation hubs include the NHS AI Lab, the central force for safe AI adoption; the UKRI Centre for Doctoral Training in AI for Healthcare at Imperial College; the Northern Pathology Imaging Co-operative (NPIC) at the University of Leeds; the MRC London Institute of Medical Sciences at Imperial; and the Cancer Research UK Convergence Science Centre.

What makes this ecosystem powerful is how it addresses secure data access. Federated platforms enable researchers to work with sensitive health data while maintaining strict privacy standards. This allows multiple organisations to collaborate on AI development without moving patient data—a crucial capability for advancing precision medicine at scale.

This collaborative spirit—where academic rigour meets commercial innovation and government support—is what positions the UK as a leader in healthcare AI. It’s about creating an environment where algorithms can be tested, refined, and deployed to genuinely improve patient care.

The Trust Deficit: Can We Stop Biased AI and Protect Patient Data?

The transformative power of AI healthcare UK comes with profound responsibilities. As we integrate these technologies, we must confront uncomfortable truths about data quality, algorithmic fairness, and privacy. These aren’t abstract debates but decisions that directly impact patient outcomes and health equity.

Code Red for Bias: How Flawed Data Can Create Discriminatory AI—And How to Fix It

AI systems learn from data, and if that data is flawed, the AI will become a vehicle for propagating and even amplifying those flaws at an unprecedented scale. This algorithmic bias can manifest in several insidious ways:

- Selection Bias: Occurs when the data used to train the model is not representative of the population it will be used on. The well-documented failure of a melanoma detection algorithm trained predominantly on images of light skin to perform accurately on patients with darker skin is a stark example. If an AI is trained on data from only one hospital in an affluent area, it may not perform well for patients in a more diverse, less affluent region.

- Historical Bias: Arises when the data itself reflects past societal prejudices. For example, if a historical dataset shows that a certain demographic group received less pain medication for the same condition, an AI trained on this data might learn to recommend less medication for future patients from that group, thus perpetuating a historical inequity.

- Measurement Bias: Happens when the way data is collected or measured varies in quality across groups. A pulse oximeter that is less accurate on darker skin tones will feed flawed data into an AI system, leading to systematically worse predictions for Black patients.

Addressing this requires more than just good intentions. It demands a concerted effort to curate diverse and representative datasets, such as those being built by the UK Biobank. It also requires rigorous auditing of algorithms for fairness before and after deployment, using statistical metrics to check for performance disparities across different demographic groups. Without this, we risk building a two-tier system of AI-driven healthcare where the benefits are not shared equally.

But representative data isn’t enough. Healthcare professionals need to understand how an AI reached its conclusion. This is where explainability (XAI) is critical. The “black box” problem, where an AI’s reasoning is hidden, is incompatible with clinical practice. Doctors can’t blindly trust an algorithm they don’t understand.

Initiatives like model cards are gaining traction. These act like nutrition labels for AI, displaying an algorithm’s capabilities, limitations, and training data. They help clinicians understand when to trust it and when to apply scrutiny. The ICO guidance on explaining AI decisions offers practical frameworks for making these systems comprehensible.

The Digital Fortress: Protecting Patient Privacy in the Age of AI

Patient health data is among the most sensitive information that exists. A breach can expose deeply personal health information. The trust patients place in the NHS to protect this data is sacred.

UK GDPR and the common law duty of confidentiality form the legal backbone of health data protection. Compliance means embedding privacy into AI architecture from the start—a principle known as data protection by design and default.

Before implementing any AI technology in the NHS, a Data Protection Impact Assessment (DPIA) must legally be completed. DPIAs are a crucial checkpoint to identify risks and implement safeguards before patient data is used.

Caldicott Guardians serve as the conscience of data governance within NHS organisations, ensuring confidential patient information is used ethically and only when justified.

To build powerful and equitable AI, researchers need access to vast and diverse datasets. However, moving highly sensitive patient data from multiple hospitals into a single, centralised database creates a massive privacy risk and a tempting target for cyberattacks. This is the central challenge that federated learning and Trusted Research Environments (TREs) are designed to solve. Instead of bringing the data to the algorithm, the algorithm is brought to the data. Here’s how it works: a researcher can develop an AI model and send it to be trained inside the secure, firewalled environment of Hospital A. The model learns from the local data, and only the aggregated, anonymised mathematical parameters of the model are sent back to the researcher. The same process is repeated at Hospital B, Hospital C, and so on. The raw patient data never leaves the protection of its original source. This federated approach, which is the foundation of platforms like Lifebit’s, allows for the collaborative training of AI models across multiple institutions—and even across international borders—without ever compromising patient privacy. It is a paradigm shift that enables the development of robust, generalisable AI while upholding the highest standards of data protection.

Crucially, UK GDPR Article 22 grants individuals the right not to be subject to automated decision making without human review. AI can support clinical decisions, but the final judgment must rest with a human clinician.

Ultimately, the success of AI healthcare UK hinges on public trust. The memory of controversies like the care.data programme in 2014, which was scrapped due to public outcry over data sharing, looms large. To avoid repeating past mistakes, the NHS and its partners must engage in radical transparency. This means clearly communicating to the public how their data is being used, for what purpose, and with what safeguards. Initiatives like citizen assemblies and public consultations are crucial for building a social licence for AI in healthcare, ensuring that the development and deployment of these powerful tools align with public values and expectations. Trust is not a given; it must be earned and maintained through continuous dialogue, accountability, and a demonstrable commitment to putting patient safety and privacy first.

The £12.5B Question: Is Federated AI the Key to a Smarter, Healthier UK?

AI healthcare UK is no longer a distant promise. It’s already working in hospitals and labs, slashing radiotherapy planning times and improving disease screening. We are at a pivotal moment in healthcare history.

The future is preventative care that predicts risk years in advance and precision medicine that personalises treatment to a patient’s molecular profile. This isn’t science fiction—it’s the direction we’re heading.

Yet technology alone won’t get us there. The UK’s proactive regulatory framework, investment in workforce training, and commitment to ethical data governance create the guardrails for safe innovation.

The key is open uping siloed health data. Secure, federated access to the data that powers AI breakthroughs is essential to realise AI’s full potential while maintaining strict privacy.

This is where platforms like Lifebit’s make the difference. Our next-generation federated AI platform enables secure, real-time access to global biomedical and multi-omic data without compromising patient privacy. With our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer), researchers can collaborate across data ecosystems and generate real-time insights that accelerate drug findy and drive precision medicine.

We’ve spent over 15 years building the infrastructure that makes federated AI work at scale, ensuring AI healthcare UK innovations are both transformative and trustworthy.

Building a smarter, healthier UK requires collaboration. The challenges of bias, data silos, and public trust are real. But so are the opportunities: shorter waiting times, personalised treatments, and a more sustainable healthcare system.

The change is underway. Discover how to accelerate your research with federated AI and be part of shaping this exciting new chapter in UK healthcare.