The Decentralized Approach to Data Governance Explained

Why Your Data Governance Model Is Broken

Centralized data governance was designed for a slower, simpler world. Today, it is the bottleneck between your teams and the insights they need.

A single team acting as gatekeeper for all data requests and policies simply can’t keep up with explosive data volumes and the need for real-time access for AI, pharmacovigilance, and cohort analysis. Researchers, clinicians, and analytics teams wait days or weeks for approvals while critical questions pile up.

The data explosion is overwhelming centralized models. With 180 zettabytes of data expected by 2025, it’s no surprise that 77% of people believe centralized data teams suffer from a lack of domain expertise. When clinical research teams wait weeks for data access, the central model is the bottleneck.

Decentralized data governance offers a way out. Instead of concentrating decision-making and data ownership in a single central authority, it distributes them across teams and domains. This model boosts speed, leverages domain expertise, and increases agility, all while a central framework maintains consistent standards.

In practice, that means:

- Clinical research, genomics, or real-world evidence teams manage their own data products.

- A central council defines non-negotiable rules for security, privacy, and interoperability.

- Automation enforces those rules consistently across regions such as the UK, USA, Europe, Canada, Singapore, and Israel.

Key differences at a glance:

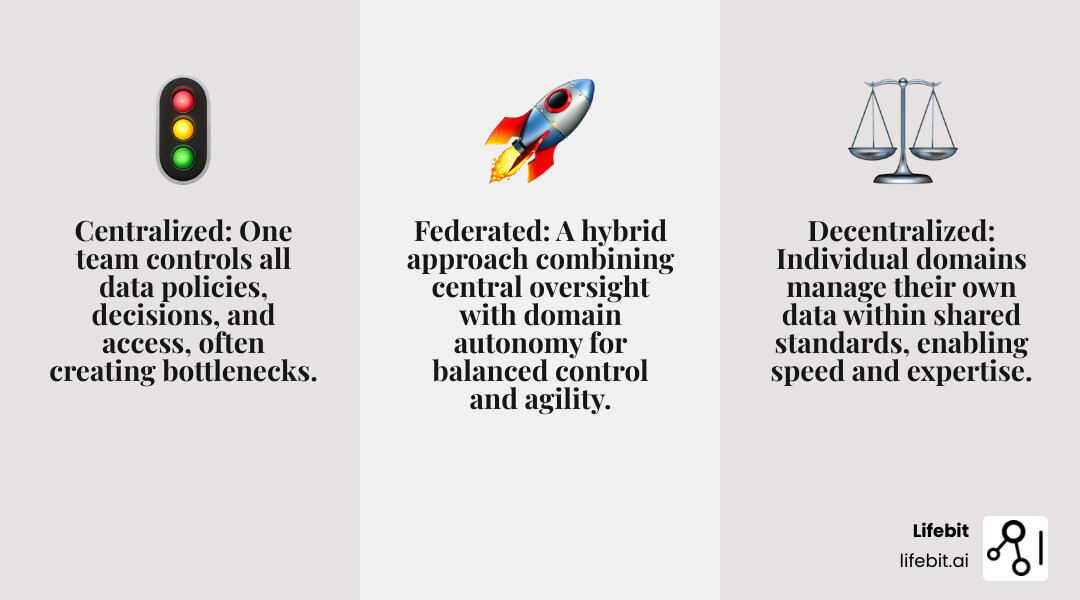

- Centralized: One team controls all data policies, decisions, and access

- Decentralized: Individual domains manage their own data within shared standards

- Federated: Hybrid approach combining central oversight with domain autonomy

Decentralized governance pushes ownership to the domain experts who understand the data’s context and quality needs. It turns governance from a slow, ticket-driven process into a scalable operating model.

I am Maria Chatzou Dunford, CEO and Co-founder of Lifebit. At Lifebit, we pioneered federated platforms for secure analytics across global biomedical data. Our next-generation federated AI platform brings this decentralized governance model to life, enabling secure, real-time analytics across Trusted Research Environments (TREs), Trusted Data Lakehouses (TDLs), and our Real-time Evidence & Analytics Layer (R.E.A.L.). I have seen how this approach transforms data from a compliance burden into a competitive advantage for pharma, governments, and public sector organizations.

Simple guide to decentralized data governance:

Centralized vs. Decentralized: Which Data Governance Model Wins?

The core question of modern data governance is whether decisions are better made centrally or by many. As data volumes grow, traditional top-down approaches are failing. We need models that empower teams while maintaining control, which is where decentralized and federated governance come in. Data governance models define who makes decisions about data and how they are enforced. Most modern organizations, especially those operating across regions like the UK, USA, and Europe, find their sweet spot in a hybrid approach.

Let’s break down the main models:

| Attribute | Centralized Governance | Decentralized Governance | Federated Governance |

|---|---|---|---|

| Decision-Making | Single central authority | Distributed across individual domains/teams | Central guidance with local autonomy |

| Control | High, uniform | High within domains, low enterprise-wide | Balanced: global standards, local implementation |

| Speed | Slow (bottlenecks) | Fast (within domains) | Moderate to Fast (empowered domains, clear guidelines) |

| Consistency | High (but often rigid) | Low (risk of fragmentation) | High (through shared standards and tools) |

| Scalability | Poor (struggles with growth) | Good (new domains can easily join) | Excellent (designed for growth and complexity) |

| Domain Expertise | Low (central team distant from data context) | High (teams closest to data) | High (domain expertise with central support) |

| Accountability | Central team | Individual domains | Shared (central for standards, domains for execution) |

| Complexity | Simple to define, complex to scale | Simple to implement locally, complex to coordinate globally | Moderate to define, simpler to scale and operate |

| Risk of Silos | High (central team owns all, others are consumers) | High (if not coordinated) | Low (interoperability built into design) |

Centralized Governance: The Traditional Fortress

Centralized data governance is like a fortress where a single authority dictates all data policies. They are the gatekeepers for data quality, security, and compliance. This model emerged from a need for control and standardization, particularly in eras of on-premise data warehouses.

Benefits of this approach include high consistency and clear accountability, which are crucial for meeting strict regulatory requirements in industries like banking or healthcare, where audit and security norms (e.g., GDPR, HIPAA) demand strong central oversight. For example, Georgia-Pacific uses a centralized model to ensure uniform product data standards across its manufacturing operations.

However, this model creates significant drawbacks in the modern data landscape. Centralized teams become bottlenecks, slowing innovation as every data request must pass through a single queue. They often lack domain expertise, leading to one-size-fits-all policies that are either too restrictive for some teams or too lenient for others. This inflexibility frustrates business users, who may bypass official channels and create “shadow IT” systems, ironically increasing security and compliance risks.

Decentralized Governance: Empowering the Domains

Decentralized data governance is like a marketplace where each domain manages its own data, tailoring policies to its specific needs. This model flips the centralized approach on its head, prioritizing speed and autonomy.

The primary benefits are agility, speed, and domain expertise. Teams can act swiftly without waiting for central approval, leveraging their deep insights into the data’s context, quality, and use cases. This fosters a strong sense of ownership and accountability at the domain level. The statistic that 77% of people believe centralized teams lack domain expertise underscores why organizations are turning to decentralized governance. For instance, GE Aviation’s self-service framework empowered employees to access data independently, leading to thousands of data products created by users worldwide.

However, going fully decentralized can introduce challenges. Without a coordinating mechanism, it risks creating a “data swamp” or a “wild west” scenario. Different domains might define the same metric (e.g., “customer lifetime value”) in conflicting ways, leading to inconsistent reporting and a lack of enterprise-wide trust. This fragmentation makes interoperability difficult and can duplicate costs for technology and compliance efforts.

Federated Governance: The Best of Both Worlds

Recognizing the limits of both extremes, many forward-thinking organizations are adopting a federated data governance model. This hybrid approach balances centralized standards with decentralized execution, creating a scalable and agile framework.

A central governance council, composed of representatives from business domains and central functions (like IT, security, and legal), defines global, non-negotiable policies for security, privacy, and interoperability. It also provides shared tools and platforms to ensure consistency. However, the individual domains are empowered to manage their own data products, define their own quality rules, and make decisions within these centrally-defined guardrails. This delivers enterprise-level consistency with operational flexibility, making it a popular choice for large, global organizations.

For example, the Scottish Environment Protection Agency (SEPA)’s federated center of excellence embeds data experts in business teams, enabling data access for over 1,000 employees while maintaining governance. Similarly, Panera’s ‘One Panera’ model encouraged data sharing by having managers maintain metadata under central standards. This aligns with the principle of sorting out the decisions that should remain at the local level from those that must be made globally.

5 Principles to Open up Speed, Quality, and Cost Savings with Your Data

Moving from a centralized bottleneck to a dynamic, data-driven organization requires a new mindset. When implemented thoughtfully, decentralized data governance can transform data from a compliance burden into a business accelerator, empowering teams to make faster, more informed decisions.

Core Principles of a Decentralized Data Governance Model

Successful decentralized data governance models are built on these foundational principles:

-

Federated Ownership: Responsibility is distributed to domain experts who know the data best. Each data domain (e.g., clinical research, marketing) has a designated Data Product Owner who is accountable for the data’s strategic value, quality, and usability. They are supported by Data Stewards who handle the day-to-day management, ensuring data is properly documented, secured, and compliant. This fosters deep ownership and allows for custom policies that fit the data’s context. SEPA’s model, for instance, embeds data experts in business teams for effective daily data management.

-

Data as a Product: Data is treated not as a technical byproduct but as a valuable product with a lifecycle, owners, and consumers. To be effective, data products must be designed for consumption and adhere to key principles. They must be Findable (via a data catalog), Accessible (with clear access request protocols), Interoperable (using standard formats and APIs), and Reusable (well-documented for broad use). Furthermore, they must be Trustworthy (with clear lineage and quality metrics) and Secure (with built-in controls).

-

Self-Service Access with Guardrails: To unlock agility, teams need self-service tools to discover, understand, and access data frictionlessly, often through a central data marketplace or portal. This freedom is enabled within a framework of automated guardrails—policies and controls that enforce security, privacy, and compliance without requiring manual intervention for every request. This empowers users while ensuring the organization’s rules are consistently applied.

-

Policy Automation (Policy-as-Code): Manual policy enforcement is slow, inconsistent, and error-prone. With ‘policy-as-code,’ governance rules (e.g., “mask all patient identifiers for non-clinical users”) are written as code and integrated into data platforms and pipelines. This ensures that policies are applied automatically and consistently across the entire data estate, reducing errors and speeding up compliance. Businesses using automation like generative AI for decentralized compliance report 50% fewer non-compliance incidents.

-

Interoperability and Standardization: To prevent the decentralized model from creating new silos, a central authority must define and enforce global standards for metadata, APIs, and data formats. This ensures that data products from different domains can be easily discovered, combined, and understood. The central team provides the “glue” by sorting out decisions that should remain local from those that must be global, fostering a cohesive data ecosystem rather than a collection of isolated assets.

Key Benefits for Your Organization

Adopting decentralized data governance offers tangible business benefits:

- Increased Agility and Speed: By pushing decisions to the data’s edge, teams respond faster to business needs, accelerating time-to-insight and innovation.

- Scalability for Growth: A federated approach is inherently scalable. New domains can be onboarded with clear guidelines, allowing the governance framework to grow with the organization without overwhelming a central team.

- Improved Data Quality through Domain Expertise: Empowering domain experts who understand the data’s nuances leads to more accurate, reliable, and trustworthy information, which is the foundation for all analytics and AI.

- Fostering a Data-Driven Culture: When teams have ownership and easy access to data, they become more invested in its value and governance. This creates a culture where data is a shared asset used to drive decisions at all levels.

- Cost Efficiency by Reducing Central Team Workload: Automation and domain empowerment shift the central team’s focus from manual, repetitive tasks like access approvals to high-value strategic enablement, such as building better tools and coaching domain teams. Porto, an insurance company, became 40% more efficient with a federated model and automation.

Overcoming the Challenges: Mitigation Strategies

While compelling, a decentralized approach has potential pitfalls. Acknowledge and mitigate them:

- Potential for Data Silos:

- Mitigation: Enforce interoperability through a mandatory, shared data catalog, universal metadata standards, and API-first data product design. Promote a culture of data sharing through incentives.

- Ensuring Consistency:

- Mitigation: A central governance council must define and communicate clear, non-negotiable global policies. Use policy-as-code to ensure these rules are enforced uniformly and automatically.

- Managing Security and Compliance Risks:

- Mitigation: Implement a zero-trust security posture with strong encryption, granular access controls (ABAC/RBAC), and continuous monitoring. Automate compliance checks within data pipelines.

- Conflict Resolution Between Domains:

- Mitigation: Establish clear escalation paths and a governance council to mediate cross-domain issues. Implement data contracts to formalize expectations between data producers and consumers.

- Need for a Strong Central Platform and Clear Communication:

- Mitigation: Invest in a robust, user-friendly shared platform that makes governance easy. Maintain continuous communication through regular meetings, newsletters, and dedicated support channels.

The 5-Step Playbook to Launch Your Decentralized Governance Model

Transitioning to an agile, decentralized data governance approach is a strategic journey that requires careful planning, executive buy-in, and cultural change. It’s not just a technology project but a shift in how the organization operates. Here’s a practical playbook to guide your organization through this transformation.

Step 1: Establish a Federated Governance Charter & Council

First, secure executive sponsorship and form a cross-functional governance council. This council should include leaders from key business domains, IT, data architecture, legal, and security. Its first task is to create a federated governance charter. This document is the constitution for your data governance program and should clearly define:

- Vision and Mission: What are you trying to achieve with data governance?

- Principles: The core beliefs guiding your approach (e.g., “data is a shared asset”).

- Scope: Which data, domains, and processes are in scope initially.

- Roles and Responsibilities: High-level definitions for the council, data owners, etc.

- Decision Rights: A clear framework (like RACI or DACI) for how global vs. local decisions are made.

- Global Policies: The initial set of non-negotiable rules for security, privacy, and interoperability across all operations (UK, USA, Europe, Canada, Singapore, Israel).

Step 2: Map Business Domains and Assign Ownership

Next, map your data landscape into logical business domains. A domain should represent a distinct area of the business with clear expertise, such as ‘Clinical Trials Data,’ ‘Pharmacovigilance Data,’ ‘Genomics Data,’ ‘Marketing Analytics,’ or ‘Supply Chain Logistics.’ Avoid defining domains based on technology systems. For each domain, assign a Data Product Owner (accountable for the data’s value) and one or more Data Stewards (responsible for daily management). Use a RACI (Responsible, Accountable, Consulted, Informed) matrix to clarify these roles for critical data assets and processes, ensuring there are no gaps or overlaps in ownership.

Step 3: Implement a Shared Technology Platform

Technology is the enabler of federated governance. Implement a shared platform that makes it easy for domains to govern their data while adhering to central standards. This platform is the backbone of your decentralized data governance and should include:

- Data Catalog & Metadata Management: A central, searchable inventory of all data products, so users can find what they need. Tools like Collibra, Alation, or open-source solutions like Amundsen are key.

- Access Control Solutions: A robust engine to manage and enforce access policies (e.g., RBAC and ABAC), integrating with identity management systems.

- Data Quality Monitoring: Tools for domain owners to define and monitor quality rules for their data products.

- Policy Automation Engine: A system to execute policy-as-code for consistent enforcement.

Step 4: Enable Self-Service with Automated Guardrails

With the platform in place, empower your teams. Enable self-service access through a data marketplace or portal where owners can publish their certified data products. This is where policy-as-code comes to life. Implement automated guardrails to enforce security and privacy rules without manual review. For example, a policy could automatically mask sensitive data columns for any user whose role is not ‘Approved Researcher’ or block queries that attempt to join datasets in a non-compliant way. Technologies like generative AI can further automate the classification of sensitive data and suggest appropriate policies, reducing incidents by up to 50% compared to traditional systems.

Step 5: Measure, Monitor, and Iterate

Finally, treat governance as an ongoing, agile process, not a one-time project. Define Key Performance Indicators (KPIs) to measure the success and health of your program. These should include:

- Operational Metrics: Time-to-data access, number of data products published, percentage of automated policy decisions.

- Quality Metrics: Data quality scores, number of data quality issues resolved.

- Business Value Metrics: User satisfaction scores (NPS), number of analytics projects enabled, adoption rate of the data platform.

- Compliance Metrics: Number of compliance incidents, audit success rates.

Conduct regular audits and use feedback loops from domain teams and data consumers to continuously refine and improve the framework, policies, and platform.

How Decentralized Governance Open ups Data Mesh, AI, and Bulletproof Compliance

In today’s technological landscape, decentralized data governance is not just an organizational choice; it’s a strategic imperative. It is the essential backbone that allows modern data architectures to thrive and enables the responsible, scalable use of AI.

Aligning with Data Mesh and Data Fabric

Modern architectures like Data Mesh and Data Fabric are fundamentally incompatible with centralized, top-down governance. They require a decentralized data governance model to function effectively.

- How Decentralized Governance is a Core Pillar of Data Mesh: Data Mesh is a sociotechnical paradigm that decentralizes analytics based on four principles: 1) domain-oriented ownership, 2) data as a product, 3) self-serve data platform, and 4) federated computational governance. The federated governance model described in this article is the practical implementation of this fourth principle. It provides the standards, policies, and automation needed to ensure that a distributed network of data products remains interoperable, secure, and trustworthy. Without it, a data mesh quickly devolves into a “distributed data swamp,” as explored in Concepts and Approaches for Data Mesh Platforms.

- The Role of a Data Fabric in Unifying Metadata and Enforcement: A Data Fabric is a technology layer that complements the Data Mesh architecture. It provides a unified layer that connects disparate data sources and automates metadata management, data integration, and policy enforcement. A data fabric acts as the technical enabler for a decentralized data governance model, providing a single pane of glass for monitoring and enforcing policies across a distributed landscape.

Implications for Security, Privacy, and Compliance

For regulated sectors like biopharma and public health operating in regions with strict laws like the UK, USA, and Europe, decentralized data governance is critical for achieving bulletproof security and compliance.

- Automated Policy Enforcement for Regulations: It enables automated enforcement of complex regulations like GDPR, HIPAA, and the California Consumer Privacy Act (CCPA). Systems can automatically apply data masking, enforce purpose-based access controls, or manage data retention policies, reducing human error and ensuring continuous adherence.

- Reducing the Risk of Fines: This automation drastically reduces the risk of non-compliance fines, which under GDPR can be up to 20 million euros or 4% of a company’s global turnover.

- Enabling a Zero-Trust Security Posture: This model aligns perfectly with a zero-trust security approach, where every access request is explicitly verified, regardless of whether it originates inside or outside the network. This is critical for protecting distributed data assets.

- Solving for Data Sovereignty in Global Operations: For global organizations, it helps manage complex data sovereignty laws. Data can remain in its local jurisdiction (e.g., a hospital in Germany) to comply with national laws, while federated analytics allows secure, compliant analysis to be performed across borders without moving the raw data. This is a key technical solution to legal challenges like the Schrems II ruling in Europe.

Fueling Responsible AI and Machine Learning

Decentralized data governance is the foundation for building responsible, ethical, and effective AI, as the quality of any AI system is dictated by the quality of its data.

- Ensuring High-Quality, Well-Governed Data for AI Models: By empowering domain experts to own data quality, organizations ensure that AI models are trained on accurate, complete, and trustworthy data. This is vital, as 61% of organizations are evolving their operating models due to AI technologies, yet 60% of organizations fail to realize AI value without solid data governance.

- Data Lineage for Model Explainability (XAI): A federated model ensures detailed data lineage is tracked at the domain level. This lineage is vital for AI model explainability, allowing teams to trace a model’s prediction back to the exact data it was trained on, which is a requirement for debugging and for regulatory scrutiny in critical applications like clinical diagnostics.

- Mitigating Bias and Managing Ethical Risk: Effective governance extends beyond data to the AI models themselves. By defining fairness policies within each data domain and treating AI models as governed products within an MLOps framework, organizations can proactively test for and mitigate algorithmic bias. This ensures that AI systems are not only powerful but also fair and ethical.

Your Top 3 Questions About Decentralized Governance, Answered

We often encounter common questions when discussing the shift to decentralized data governance. Let’s address them head-on.

Is decentralized governance less secure than a centralized model?

No. When implemented correctly, decentralized data governance is often more secure than a traditional model. Here’s why:

- Automated Policy Enforcement: Policies are automated (policy-as-code), reducing the human error common in manual checks.

- Granular Access Controls: Fine-grained access controls (combining RBAC and ABAC) grant access based not just on a user’s role, but also on context like their project or the data’s sensitivity.

- Clear Audit Trails: Every data access is logged and auditable, providing transparency and accountability for any security investigations.

- Least Privilege: The principle of least privilege, granting users only minimum necessary access, is easier to enforce in a domain-centric model with automated guardrails.

What is the role of the central team in a decentralized model?

The central team’s role shifts from gatekeeper to enabler. Their key responsibilities are:

- Provide the Platform and Tools: Building and maintaining the shared technology platform (data catalog, access controls).

- Set Global Standards and Principles: Defining overarching, non-negotiable policies for security, privacy, and interoperability.

- Coach Domain Teams: Providing training and support to help domain owners implement governance effectively.

- Resolve Cross-Domain Conflicts: Acting as a mediator for issues that span multiple domains.

- Measure and Monitor: Tracking KPIs to audit the framework’s overall effectiveness and compliance.

Can this model work in highly regulated industries like healthcare or finance?

Yes, it’s ideal for them, especially through a federated approach. Here’s how decentralized data governance works for industries with strict rules like GDPR and HIPAA in the UK, USA, and Europe:

- Central Team Defines Strict Compliance Rules: The central governance council sets non-negotiable compliance rules, often automated as policy-as-code and embedded in the shared platform.

- Domain Teams Implement Locally: Individual domain teams (e.g., a clinical trials unit) are then responsible for implementing these rules within their specific data context.

- Enables Secure Research on Sensitive Data: This model enables secure research on sensitive data. At Lifebit, our federated platform allows analysis to run on data where it resides, addressing data sovereignty while ensuring compliance. This balances scientific progress with the imperative of patient data protection.

Conclusion: The Future of Data Is Federated

Traditional, centralized data governance is broken. It creates bottlenecks and lacks the domain expertise needed in today’s data-driven world.

The shift towards decentralized data governance, particularly in its federated form, is a fundamental evolution. It moves governance from a gatekeeper to an enabler, balancing central standards with domain agility. This approach empowers teams, accelerates innovation, and ensures compliance.

For organizations in biomedical research, clinical trials, and pharmacovigilance, this federated approach is essential. It open ups the full potential of data for life-saving R&D while upholding the highest security, privacy, and compliance standards.

At Lifebit, we are at the forefront of this change. Our next-generation federated AI platform enables secure, real-time access to global biomedical and multi-omic data, empowering biopharma, governments, and public health agencies to conduct large-scale, compliant research with built-in federated governance and advanced AI/ML analytics.

Learn how a federated biomedical data platform can transform your research.