The Definitive Guide to Centralized vs. Decentralized Data Governance

Drowning in 180 Zettabytes? Here’s How to Regain ControlBefore It’s Too Late

Centralized vs decentralized data governance is the decision that will determine whether you move fast or stall, secure data or invite risk, and innovate or fall behind as global data races toward 180 zettabytes by 2025.

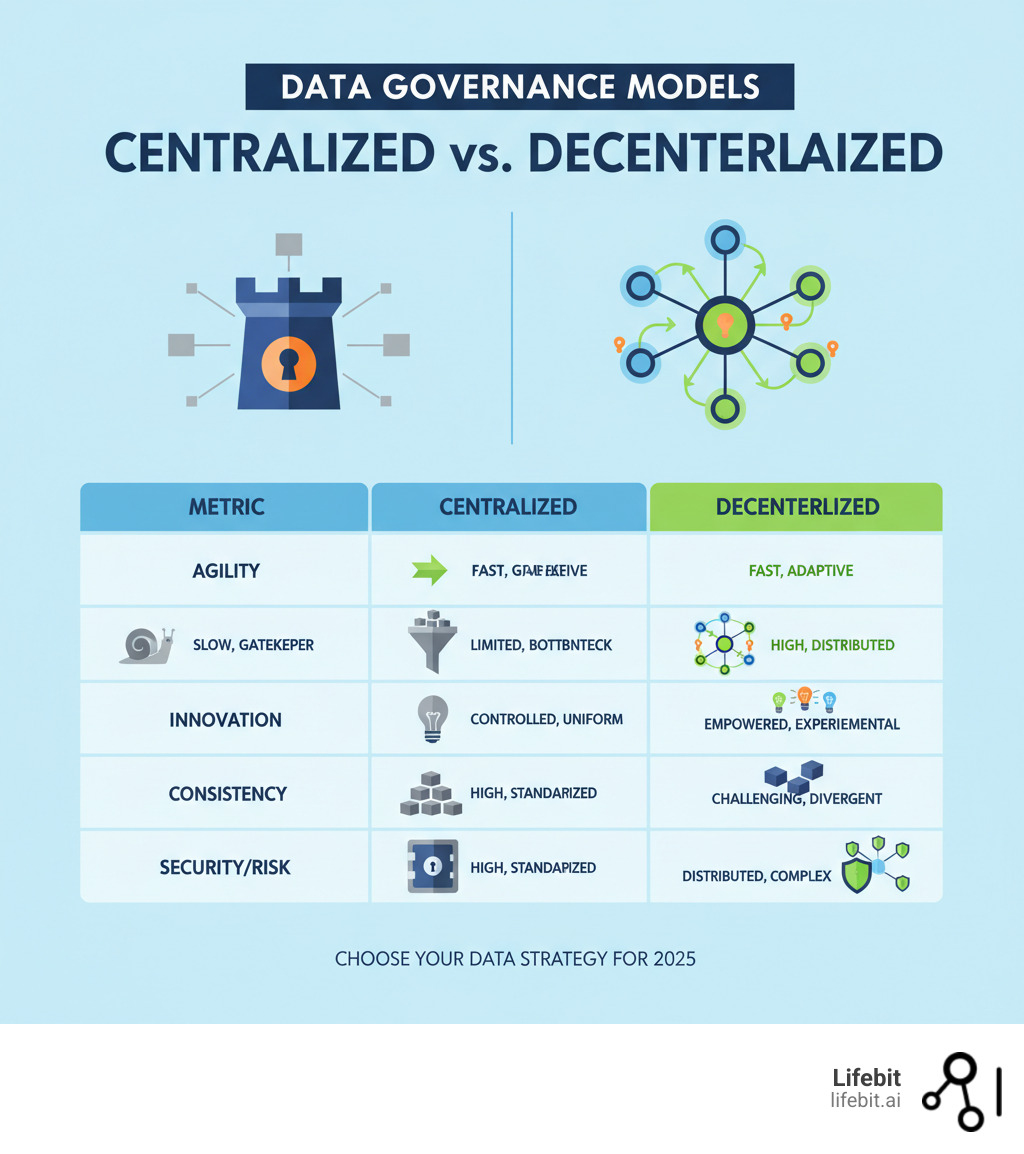

Quick Answer: Centralized vs Decentralized Data Governance

| Centralized Governance | Decentralized Governance |

|---|---|

| Control: Single authority manages all data policies | Control: Teams manage their own data domains |

| Speed: Slower decisions, potential bottlenecks | Speed: Faster decisions, more agility |

| Consistency: High standardization across organization | Consistency: Risk of inconsistent policies |

| Security: Easier to enforce uniform security | Security: Distributed attack surface |

| Innovation: May stifle innovation with bureaucracy | Innovation: Encourages experimentation |

| Best for: Highly regulated industries, large enterprises | Best for: Fast-moving organizations, diverse business units |

Both approaches can workand both can fail. Centralization reduces risk but can bottleneck research and AI initiatives. Decentralization open ups speed but can create silos and compliance gaps. In pharma, healthcare, and government, the wrong call can slow findies, jeopardize patient safety, and rack up fines.

Im Maria Chatzou Dunford, CEO and Co-founder of Lifebit. After 15+ years in genomics and healthcare, Ive seen how the right model accelerates science while preserving security and compliance.

Centralized Fortress Governance: Stop Expensive Errors Before They Spread

Picture your most valuable data in a fortified command center. Thats centralized data governance: one accountable team sets policies, grants access, and enforces a single source of truth across the organization. Its clear whos in charge and where to go when you need data.

Benefits: Consistency, Compliance, and Risk Reduction

Streamlined oversight delivers standardized policies across every team and system.

Easier compliance with regulations like GDPR and HIPAA: one team updates controls once, everywhere.

Higher data quality from experts dedicated to standards, lineage, and trust.

Less duplication by consolidating sources and tooling, cutting cost and confusion.

Georgia-Pacific is a real-world example: with centralized governance, they unified quality measurement and optimized supply chains across countries and business lines.

Drawbacks: Bottlenecks, Bureaucracy, and Missed Opportunities

Slow decisions can delay AI projects and research timelines.

Bureaucratic drag discourages experimentation when approvals take weeks.

The risk of data silos rises when teams hoard data to avoid red tape.

A single point of failure can halt research and reporting if the central platform goes down.

Limited domain context can produce policies that look right but hinder real clinical or scientific workflows.

Decentralized Frontier Governance: Ship Faster, Innovate More

Swap the fortress for a network of expert outposts. Decentralized data governance lets domains own their data, make local decisions, and adapt fast. Research, clinical, and product teams govern differentlyand thats the point.

Benefits: Agility, Scale, and True Ownership

Faster decisions without a central queue.

Innovation thrives when teams can test tools and methods on their timeline.

Scales naturally as data and teams grow; no single bottleneck.

Clear ownership and accountability improve stewardship and quality.

Resilience increases: one domains outage doesnt take down the whole org.

Drawbacks: Chaos, Complexity, and Security Gaps

Data chaos from conflicting definitions and metrics undermines trust.

Worse data silos and incompatible formats block collaboration.

Security gaps multiply when controls vary by team, expanding the attack surface.

Integration headaches slow company-wide analytics and inflate costs.

Duplicated work is common without visibility. Brainly learned this the hard way: early decentralization bred chaos until clearer ownership and coordination were introduced.

Centralized vs. Decentralized: The Showdown That Impacts Your Bottom Line

Here’s where the rubber meets the road. The choice between centralized vs decentralized data governance isn’t just about IT architecture—it’s about whether your organization thrives or struggles in the next five years. Every dimension we examine reveals trade-offs that directly impact your operations, security posture, and ultimately, your success.

Scalability, Efficiency, and Speed

Think of centralized systems like a single highway toll booth during rush hour. When traffic is light, cars zip through quickly. But as volume increases, that single checkpoint becomes a nightmare bottleneck.

Centralized governance works beautifully for smaller datasets and simpler operations. You get clean decision-making and streamlined processes. But remember that 180 zettabytes heading our way by 2025? That’s when centralized systems start buckling under pressure. The central team becomes the gatekeeper for everything—data access requests, new tool approvals, schema changes. What once took hours now takes weeks. Innovation grinds to a halt because every experiment needs approval from the data governance council. Imagine a retail company wanting to launch a personalized marketing campaign. The marketing team must submit a formal request to the central data team, which joins a long queue, delaying a time-sensitive initiative by weeks or even months.

Decentralized systems handle growth like a sprawling network of side streets. Coordination gets trickier, but traffic keeps flowing. Teams can process data in parallel, make local decisions quickly, and adapt to changing needs without waiting for central approval. The marketing domain’s data team can immediately start prototyping their campaign. The math is simple: distributed processing power scales with your data growth, while centralized bottlenecks just get worse over time.

Security, Compliance, and Privacy

Here’s where things get interesting. Both models have compelling security arguments—and both have scary vulnerabilities.

Centralized governance makes compliance officers sleep better at night. One set of policies, one audit trail, one security framework to maintain. When you’re dealing with GDPR fines that can reach 4% of global revenue, this uniformity feels like a security blanket. But that same centralization creates what security experts call a “honey pot”—a single, incredibly attractive target for cybercriminals. When hackers breach your central repository, they don’t just get some data; they get all your data. A successful ransomware attack could encrypt the entire data warehouse, halting all operations nationwide.

Decentralized systems flip this equation. A breach in one node doesn’t expose your entire data ecosystem. It’s like the difference between losing one safe versus losing the master vault. This distributed approach aligns perfectly with data sovereignty principles, where organizations maintain control over their data regardless of location. For global companies, this is a massive advantage, as it allows them to comply with laws requiring citizen data to remain within national borders without building costly, fully duplicated infrastructures.

However, ensuring consistent security across dozens of autonomous teams is where many organizations stumble. One team might lag in applying critical security patches or misconfigure cloud storage permissions, creating a weak link that can compromise the entire network if teams aren’t properly coordinated through a federated framework.

For sensitive applications requiring Clinical Data Governance or a Secure Research Environment, the distributed nature of decentralized systems can actually improve security—if implemented correctly with strong federated oversight.

Data Consistency, Quality, and Integration

This is where centralized vs decentralized data governance becomes a battle between perfection and pragmatism.

Centralized systems excel at maintaining pristine data quality. When everyone follows the same rules, uses the same definitions, and updates data on the same schedule, you get beautiful consistency. Integration becomes straightforward because all data speaks the same language.

Decentralized systems face the “active user problem”—different teams defining the same metric differently, leading to conflicting reports and confused executives. Marketing says you have 100,000 active users, while Product claims 150,000, and Finance reports 75,000. Same data, different definitions, total chaos. Consider a multinational manufacturer where one plant measures ‘efficiency’ by units per hour and another by ‘uptime percentage.’ The resulting reports are incomparable, rendering enterprise-wide analytics useless without a massive, manual reconciliation effort.

Achieving unified views across decentralized systems requires sophisticated metadata management and strong coordination protocols. Teams must invest heavily in synchronization algorithms and data harmonization processes. The good news? Modern approaches like implementing Data Lakehouse: Best Practices can bridge these gaps, offering unified platforms that handle both structured and unstructured data across distributed environments.

The reality is that most organizations need elements of both approaches. Pure centralization kills agility. Pure decentralization creates chaos. The winning strategy? Finding the right balance for your specific context.

The Hybrid Solution: Federated Governance and Data Mesh—Get the Best of Both Worlds

The truth is, centralized vs decentralized data governance doesn’t have to be an either-or battle. Most organizations find they need something smarter—a hybrid approach that combines the best of both worlds. This is where federated governance and Data Mesh shine, offering the control you need with the speed your teams crave.

Think of it like running a successful restaurant chain. You want consistent quality and brand standards across all locations (centralized principles), but you also need each restaurant to adapt to local tastes and operate efficiently day-to-day (decentralized execution). The magic happens when you get this balance right.

Federated Data Governance: Balance Speed and Control

Federated Data Governance is like having a wise constitutional government. A central authority sets the big-picture rules—the “constitution” of your data world—while individual teams and business units handle the day-to-day governance within those guidelines.

Here’s how it works: Your central team establishes the overarching principles, policies, and standards that everyone must follow. This “constitution” isn’t just a document; it’s a set of automated policies and shared resources. It typically includes a master business glossary, enterprise-wide security and privacy policies, approved technology standards, and clear protocols for data sharing. But the actual implementation and daily management gets distributed to the teams who know their data best.

This approach delivers remarkable results. Porto, Brazil’s major insurance and banking leader, made the switch to federated governance and boosted their data governance team’s efficiency by 40%. They empowered individual data domains to build their own pipelines while maintaining central oversight—getting faster results without sacrificing control.

Brainly, the world’s most popular education app, tells a similar story. They started with pure decentralization but hit chaos—data silos everywhere, conflicting definitions, and teams stepping on each other’s toes. Their federated approach solved these headaches by assigning clear ownership while maintaining cross-domain collaboration.

The beauty of Federated Data Analysis is that teams can move fast without breaking things. They have the autonomy to innovate within proven guardrails, eliminating bottlenecks while maintaining the consistency and compliance your organization needs.

Data Mesh: The New Standard for Scalable, Secure Data

Data Mesh takes federated thinking even further. It’s not just a governance model—it’s a complete paradigm shift in how we think about data ownership and management.

Instead of treating data as something that gets managed by a central IT team, Data Mesh treats data as a product owned by the people who understand it best. Your sales team owns sales data, your research team owns research data, and so on. Each domain becomes a mini-business, responsible for their data’s quality, security, and usability.

Domain-oriented decentralization means each business area takes full responsibility for their analytical data. They know their data better than anyone else, so they’re best positioned to govern it effectively. Data as a product thinking is the core principle, requiring each domain to create data assets that are discoverable, addressable, trustworthy, and interoperable, complete with clear documentation and quality metrics.

The magic ingredient is self-serve data platforms that give domain teams the infrastructure and tools they need to manage their data products independently. No more waiting weeks for the central IT team to provision resources or make changes.

Federated computational governance is the linchpin that prevents chaos. It establishes an operating model where a guild of data stewards from all domains collaborates to create global rules. Crucially, these rules (e.g., for security, privacy, interoperability) are then automated and embedded directly into the self-serve platform. This ensures compliance by design, allowing for decentralized execution without sacrificing enterprise-wide standards.

This approach proves especially powerful in complex fields like genomics and biomedical research, where data diversity and scale demand highly distributed yet interoperable architectures. Our work on Federated Architecture in Genomics shows how this thinking transforms scientific collaboration while maintaining the highest security and compliance standards.

The result? Teams move faster, data quality improves because owners care more, and your organization scales without hitting the bottlenecks that plague traditional centralized systems. It’s the best of both worlds—the consistency and compliance you need with the speed and innovation your teams want.

How to Pick the Right Data Governance Model—And Avoid Expensive Mistakes

Here’s the truth: there’s no magic formula for choosing between centralized vs decentralized data governance. What works for a fast-growing biotech startup won’t work for a global pharmaceutical giant. The wrong choice can cost you millions in compliance fines, missed research opportunities, and frustrated teams.

I’ve seen organizations spend years undoing the wrong governance decision. A major healthcare network chose pure centralization and watched their research teams abandon innovative projects because approval took six months. Another biotech company went fully decentralized and faced a $2 million GDPR fine because different teams had conflicting privacy policies.

The key is understanding your unique context before you commit. Gartner’s research on adaptive governance shows that successful organizations tailor their approach to their specific business context rather than following a one-size-fits-all template.

Key Factors to Weigh Before You Choose

Your organizational size and structure matters more than you might think. If you’re a large enterprise with multiple subsidiaries across different countries, pure centralization becomes a bottleneck nightmare. Your London research team shouldn’t wait for approval from headquarters in San Francisco to access routine genomic datasets. But if you’re a smaller organization with 50 employees, the overhead of a complex federated model might crush your agility.

Industry regulations can make or break your choice. In highly regulated sectors like clinical research or financial services, centralized control over critical compliance elements isn’t optional—it’s survival. HIPAA, GDPR, and FDA requirements demand consistent policies that a purely decentralized approach struggles to maintain. Yet many successful life sciences companies use federated models where central policies guide local execution.

Your data complexity and maturity level reveals whether your teams can handle distributed ownership. Organizations with sophisticated data cultures and diverse, complex datasets often thrive with Data Mesh approaches. But if your teams are still learning basic data quality principles, jumping to decentralized ownership is like giving car keys to someone who just learned to walk.

Business goals should drive everything. Are you optimizing for rock-solid consistency and risk reduction? Centralized models excel here. Prioritizing innovation speed and market responsiveness? Decentralized approaches release your teams. Most successful organizations today land somewhere in between with federated governance.

Your organizational culture is a critical, often overlooked, factor. A traditional, top-down, command-and-control culture will naturally resist decentralized ownership. Conversely, a culture that already embraces cross-functional collaboration and autonomy is fertile ground for a federated or Data Mesh approach. Forcing a decentralized model on a siloed organization is a recipe for failure.

Your existing technology stack and resources determine what’s actually feasible. Implementing a Data Mesh requires significant investment in self-serve platform capabilities and skilled personnel. Don’t choose an approach your infrastructure can’t support.

The Critical Role of People and Platforms

Regardless of whether you choose centralized, decentralized, or federated governance, two components are absolutely essential for success: the right people and the right platforms.

Data Stewards are the human element of governance. These are not IT staff, but subject matter experts from within the business domains who are formally responsible for the quality, definition, and lifecycle of specific data assets. In a centralized model, they advise the central team. In a federated or Data Mesh model, they are the empowered owners, accountable for their domain’s data products. Identifying, training, and empowering these stewards is one of the most important steps in building a sustainable governance program.

Data catalogs act as your organization’s data GPS system. Think of them as Google for your data—a central metadata repository that helps everyone find, understand, and trust the information they need. Modern catalogs provide automated data lineage to show where data came from, a business glossary to link technical assets to plain-language definitions, and collaboration features to certify datasets. In centralized models, catalogs provide the definitive inventory. In decentralized environments, they prevent chaos by connecting disparate data products across teams.

Trusted Research Environments become critical when you’re handling sensitive biomedical data. These secure, controlled spaces let researchers analyze patient data, genomic information, and clinical trial results without compromising privacy or security. They enforce strict access controls, track every interaction, and enable secure collaboration across organizations.

In our work with pharmaceutical companies and government health agencies, we’ve seen how Trusted Research Environments open up the value of sensitive data while maintaining the trust that regulators and patients demand. They’re not optional—they’re the infrastructure that makes modern biomedical research possible.

These tools and roles aren’t nice-to-have add-ons. They’re the non-negotiable foundation of any effective governance strategy. Without them, even the best-designed governance model will crumble under the weight of invisible data and security gaps. For a comprehensive view of how these pieces fit together, explore our complete guide to building a Data Governance Platform.

Conclusion: Future-Proof Your Data—Act Now or Pay Later

The choice between centralized vs decentralized data governance isn’t really a choice at all—it’s a false dilemma that’s been holding organizations back. The real question isn’t which extreme to pick, but how to blend the best of both worlds to create something more powerful.

Here’s what we’ve learned: centralized governance gives you control and consistency, but it can turn your data team into a bottleneck factory. Decentralized governance releasees speed and innovation, but it can also release chaos if you’re not careful. Neither pure approach survives contact with the messy reality of modern data challenges.

The organizations winning today have figured out that balance is the secret sauce. They’re adopting federated approaches that set clear global standards while empowering domain experts to move fast within those guardrails. They’re treating data as a product, not just a resource. They’re building platforms that make self-service possible without sacrificing security.

This shift isn’t just trendy—it’s essential. When you’re dealing with sensitive biomedical data that could accelerate drug findy or improve patient outcomes, you can’t afford to choose between speed and security. You need both. That’s why Federated Learning in Healthcare is becoming the gold standard for organizations that need to collaborate across boundaries without compromising privacy.

The data tsunami heading our way—180 zettabytes by 2025, remember—won’t wait for you to figure this out. Organizations that act now to build federated, secure, and scalable data governance will thrive. Those that don’t will drown in bottlenecks, compliance headaches, and missed opportunities.

At Lifebit, we’ve spent years solving exactly this problem for biomedical organizations worldwide. Our federated platform doesn’t force you to choose between control and agility—it gives you both. We help research teams access global datasets securely, enable real-time collaboration across institutions, and maintain the highest standards of governance throughout.

The time for half-measures is over. Your competitors are already moving to federated approaches. Your researchers are already frustrated with data silos. Your compliance team is already worried about the next audit. Every day you wait is a day of missed findies, slower time-to-market, and increased risk.

Don’t let the complexity paralyze you. Start with the right foundation, build incrementally, and let your data governance evolve with your needs. The future belongs to organizations that can move fast without breaking things—and that future is available today.