AI in Clinical Research: Opportunities, Limitations, and What Comes Next

Your Next Drug Costs $1B and Takes 10 Years. AI Can Fix That. Here’s How.

Clinical research AI is revolutionizing how we find, test, and approve new treatments—but it’s also raising critical questions about data quality, bias, and cost. Here’s the bottom line:

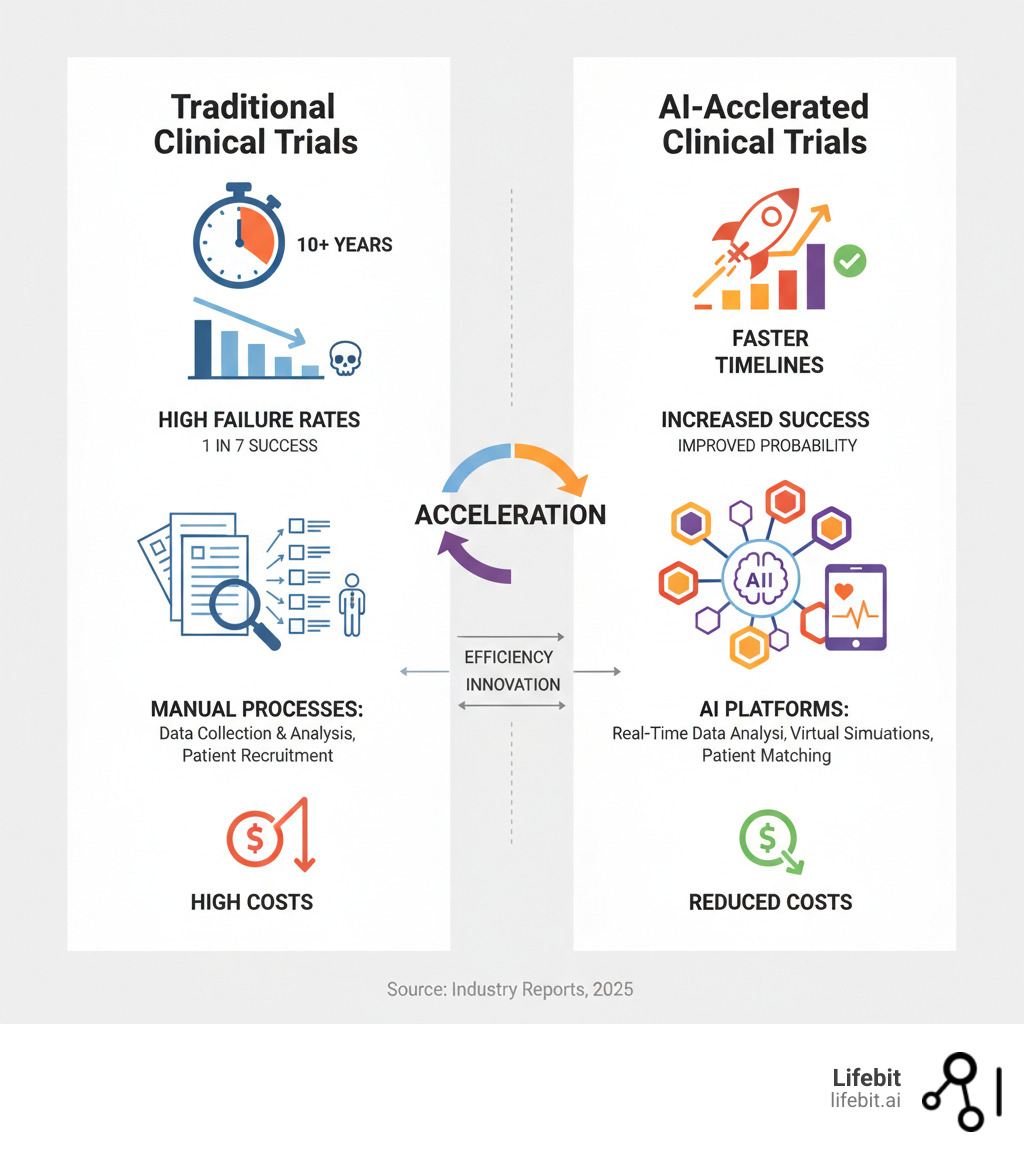

- The Gain: AI accelerates patient recruitment, optimizes trial design, and enables virtual patient simulations, potentially cutting development timelines by years.

- The Pain: Challenges include algorithmic bias, the “black box” problem, high integration costs, data privacy risks, and the need for high-quality training data.

- The Path Forward: Evolving regulations (like the EU AI Act), federated learning for secure data sharing, and industry collaboration are essential for AI’s safe and equitable deployment.

For decades, bringing a new drug to market has been painfully slow and expensive. It takes over a decade and more than a billion dollars to develop one medication, with half that time and money spent on clinical trials. Worse, only one in seven drugs entering Phase I trials ever gets approved.

This is where Clinical research AI steps in. By automating data analysis, identifying eligible patients faster, and simulating drug responses in virtual populations, AI is reshaping the entire research pipeline. But it’s not a magic bullet. The same algorithms that promise breakthroughs can amplify bias or become opaque “black boxes” that clinicians can’t trust. Success demands rigorous data governance, collaboration, and a commitment to transparency.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We build secure, federated platforms that enable researchers to analyze sensitive clinical and genomic data at scale—without ever moving it. I’ve seen how Clinical research AI can open up breakthroughs in precision medicine, and I know what it takes to get the infrastructure, ethics, and workflows right.

From Decades to Days: How AI Is Fixing the Broken Drug Development Pipeline

Here’s a sobering reality: the number of drugs approved per billion R&D dollars has halved every nine years for the past six decades. Known as “Eroom’s Law”—Moore’s Law backwards—it captures just how broken our drug development process is.

Clinical research AI is turning this around. By analyzing massive datasets that would take humans years to process, AI is optimizing every stage of the pipeline, from drug discovery to post-market surveillance.

AI can scan mountains of genomic data for target identification, spot disease patterns for biomarker discovery, and use predictive modeling with multi-modal data (genomics, proteomics, clinical records) to forecast a drug’s efficacy and side effects before a single dollar is spent on human trials. One of its most powerful applications is drug repurposing, where AI uncovers new uses for existing medications. For example, AI algorithms were instrumental in identifying baricitinib, an arthritis drug, as a potential treatment for COVID-19 by analyzing the biological pathways affected by the virus. This approach can save years of development time and make preclinical testing far more efficient.

Design Flawless Trials Before Day One

Designing a clinical trial is a massive puzzle. Clinical research AI acts as an expert puzzle-solver, optimizing protocols before you enroll a single patient.

AI refines eligibility criteria by analyzing historical data from thousands of past trials, which is critical when nearly a third of Phase III trials fail due to enrollment problems. It also transforms site selection by analyzing geographic, demographic, and performance data to identify centers most likely to successfully recruit and retain patients. By learning from past successes and failures, AI helps determine the right endpoints and trial duration, dramatically reducing the need for costly study amendments that delay timelines and burn budgets.

The science backs this up. Research from Nature explores this transformative potential in compelling detail.

Find Winning Drug Candidates in Hours, Not Years

Traditional drug discovery is a brutal slog of trial and error. Clinical research AI rewrites the rules. Through generative chemistry, AI designs novel molecules from scratch. Using models like Generative Adversarial Networks (GANs) or transformers, AI can build virtual compounds with specific desired properties, such as high binding affinity to a target protein and low predicted toxicity. It’s like having a crystal ball that shows you which compounds are worth pursuing. Companies like Insilico Medicine have already brought AI-designed drugs into human clinical trials, demonstrating a timeline from target discovery to clinical candidate in a fraction of the traditional time.

In high-throughput screening, AI analyzes vast molecular libraries at speeds no human team could match, identifying promising drug candidates in hours instead of months. This shift from physical to virtual R&D is crucial, given that 90% of new drug candidates fail in clinical development. By catching problems early and focusing resources on the most promising molecules, AI accelerates breakthroughs and saves billions.

Predict Trial Success in Real-Time

Once a trial begins, the data floods in. Clinical research AI turns this flood into clarity. Real-time data monitoring tracks trial progress, spotting trends as they emerge. Predictive analytics forecast whether a trial is likely to succeed, giving researchers the information they need to adapt, modify, or stop a study.

Most importantly, AI excels at identifying safety signals early, flagging potential adverse events before they become serious problems. The ability to analyze complex datasets from diverse sources—including genomics, imaging, and real-world data—means we can understand patient responses with unprecedented accuracy. At Lifebit, our platform provides these advanced AI/ML analytics capabilities, empowering researchers to extract meaningful insights from biomedical data. Learn more about our platform’s advanced analytics.

Stop Bleeding Money on Failed Trials: How AI Solves the 3 Biggest Bottlenecks

Clinical trials are bleeding time and money. The numbers tell a brutal story: 86% of trials miss their recruitment deadlines, and nearly a third of Phase III studies collapse because they can’t find enough patients. Even when patients enroll, poor adherence compromises study data. These aren’t abstract problems—they cost billions and delay life-saving treatments. Clinical research AI is finally offering real solutions to these long-standing bottlenecks.

Bottleneck #1: Can’t Find Patients? AI Finds Them in Hours.

Finding the right patients for a trial used to be like searching for a needle in a haystack. Clinical research AI changes everything. Instead of manually sifting through records, AI algorithms mine electronic health record (EHR) data in hours, identifying eligible patients who meet complex criteria. These systems use natural language processing (NLP) to read and understand unstructured data like clinical notes, pathology reports, and radiology findings, catching critical details that simple keyword searches miss. For an oncology trial requiring a specific genetic mutation (e.g., KRAS G12C) and a history of specific prior treatments, an AI can scan millions of records, interpreting pathology reports for the mutation and parsing clinical notes for treatment history—a task that would take a team of coordinators months to complete manually.

The impact goes beyond speed. AI-powered patient-to-trial matching promotes diversity and inclusion by systematically scanning entire patient populations, ensuring treatments are developed for a broader, more representative group. By using predictive analytics to forecast enrollment rates and genomic data integration to match patients to precision medicine studies, AI delivers faster enrollment and trials that finish on schedule.

Bottleneck #2: Patients Dropping Out? AI Keeps Them Engaged.

Getting patients into a trial is only half the battle. Keeping them engaged is where many studies stumble. Clinical research AI improves adherence through digital health technologies that meet patients where they are. Digital biomarkers from wearable sensors (like smartwatches and rings) provide continuous, objective data on everything from heart rate and activity levels to sleep patterns and temperature. Smart pill bottles send reminders for missed doses, and AI-powered voice assistants or chatbots check in with patients, answering questions and flagging concerns for clinical staff before they lead to dropouts.

This technology is the backbone of Decentralized Clinical Trials (DCTs), where many trial activities happen in patients’ homes rather than a clinic. This flexibility is a game-changer, reducing the burden on participants and broadening access to trials. AI is essential for making sense of the massive, continuous data streams from these devices, detecting subtle changes in a patient’s condition that might not be caught during periodic clinic visits. The FDA’s guidance on biomarkers recognizes the growing importance of these digital tools. At Lifebit, our federated AI platform supports the integration and analysis of this complex data, creating a complete picture of patient health without compromising privacy.

Bottleneck #3: Guessing at Efficacy? AI Tests Drugs on Virtual Patients First.

What if you could test a new drug on thousands of virtual patients before giving it to a single real person? This is the power of digital twins, one of the most exciting applications of Clinical research AI. These are virtual models of patients, built from real-world data (EHRs, genomics, imaging), that allow researchers to simulate how a drug will behave in different bodies through in-silico trials.

The implications are profound. Digital twins can create synthetic control arms, which are virtual placebo groups modeled on real-world patient data. This can dramatically accelerate trials and reduce the number of patients who need to receive a placebo. For rare diseases, where recruiting a large control group is nearly impossible, or in oncology, where giving a placebo can be ethically challenging, virtual populations can supplement or even replace traditional control arms. Researchers can test dosing strategies, identify patient subgroups most likely to benefit, and optimize trial designs—all in a risk-free virtual environment. At Lifebit, our Trusted Research Environment (TRE) provides the secure analytical infrastructure for these advanced simulations, ensuring sensitive patient data remains protected while enabling transformative research.

The 3 AI Roadblocks Costing You Billions (And How to Smash Them)

Let’s be honest: Clinical research AI isn’t a silver bullet. For every breakthrough, there’s a sobering reality check. Data quality issues can derail algorithms, algorithmic bias can worsen health disparities, and integration costs can blow budgets wide open. These are fundamental obstacles that demand thoughtful solutions. Ignoring them means wasting billions and delaying treatments patients desperately need.

Roadblock #1: The “Black Box”—Why Won’t Clinicians Trust Your AI?

Imagine an AI system recommends excluding a patient from a trial, but the only reason is “because the algorithm said so.” This is the infamous “black box” problem. Many advanced AI models, particularly deep learning networks, are brilliant at finding patterns but terrible at explaining how they reached a conclusion. This opacity is a dealbreaker in clinical research, where every decision must be justifiable, auditable, and trustworthy.

Enter Explainable AI (XAI), a field focused on making AI models transparent. XAI techniques reveal how a model makes its decisions. For example, methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can highlight which specific data points—such as a particular lab value, a phrase in a clinical note, or a region in an MRI scan—most heavily influenced the AI’s prediction. This allows a clinician to review the AI’s “reasoning” and either confirm or challenge its conclusion based on their own expertise. When patient lives are at stake, “trust the algorithm” is not good enough.

Roadblock #2: Your Data Is Biased—And It’s Poisoning Your AI

There’s an uncomfortable truth in healthcare AI: our training data has a serious diversity problem. For example, over 80% of participants in genome-wide association studies (GWAS) are of European descent. When we train AI on these lopsided datasets, we teach them to be experts on one population while remaining ignorant about others. This is an ethical failure and a scientific one.

AI trained on non-representative data can produce less accurate results for underrepresented groups, reinforcing existing health disparities. The challenge of data privacy and security adds another layer of complexity. Clinical data is deeply personal and must be protected under regulations like GDPR and HIPAA. To combat bias, we need not only more diverse data collection but also algorithmic fairness techniques, such as re-weighting data or adversarial debiasing, to actively correct for imbalances during model training. We need ironclad data governance and secure access protocols that protect privacy without stifling research. Lifebit’s federated approach addresses this by enabling analysis without moving sensitive data from its source.

Roadblock #3: The Sticker Shock—Why AI Implementation Fails on Day One

Let’s talk money. Implementing Clinical research AI is a massive, expensive undertaking. The high implementation costs include specialized hardware (like GPUs), software licensing, and cloud infrastructure. But the biggest hidden cost is often integrating new technology with legacy systems that weren’t built for AI.

A talent shortage of data scientists and bioinformaticians with deep healthcare expertise makes everything more expensive. Furthermore, data interoperability challenges present a huge obstacle. Clinical data often lives in dozens of incompatible formats and siloed systems (EHRs, LIMS, imaging archives) using different standards like HL7v2, CDA, and FHIR. The cost isn’t just buying software; it’s the massive data engineering effort to build pipelines that aggregate, clean, and harmonize this data into an analysis-ready format. Finally, there’s the question of scalability. A pilot project might work, but scaling to millions of patients requires infrastructure decisions that can lock you into expensive, inflexible solutions. Success requires strategic planning and scalable, flexible platforms designed to integrate with existing infrastructure and operate across data silos.

Don’t Get Fined into Oblivion: A 3-Step Framework for Trustworthy AI

The promise of Clinical research AI is extraordinary, but promise alone isn’t enough. We need guardrails. We’re moving faster than our regulatory and ethical frameworks can keep pace, which means we have a responsibility to build the policies and networks that ensure AI serves everyone safely. This isn’t about slowing progress; it’s about making it sustainable and trustworthy.

Step 1: Master the Regulatory Minefield (FDA & EU AI Act)

Regulators are catching up to Clinical research AI. The EU’s AI Act takes a risk-based approach, setting strict requirements for high-risk systems, which include many healthcare applications. It’s about ensuring any tool that influences clinical decisions is vetted for safety, transparency, and fairness. You can find an overview of the EU AI Act here.

In the US, the FDA is developing its AI/ML Action Plan, recognizing that algorithms evolve over time. Their framework emphasizes continuous monitoring and real-time oversight, acknowledging that an AI model approved today might behave differently tomorrow. This adaptive approach is crucial for balancing innovation with patient protection. Clear regulations give organizations the confidence to invest, knowing the rules of the game.

Step 2: Build an Ethical Moat Around Your Data

Regulations tell us what we must do; ethics tells us what we should do. For Clinical research AI, the ethical considerations are profound. Informed consent requires transparently explaining how AI is being used and what data it’s analyzing. Patients deserve to know when an algorithm is involved in their care.

Data privacy and security are non-negotiable. Protecting personal data under HIPAA and GDPR is a fundamental ethical obligation. Every data breach erodes public trust. Then there’s algorithmic accountability. If an AI system makes a recommendation, someone must be able to explain why. This requires proactive bias mitigation strategies, like training models on diverse datasets and involving ethicists and patient advocates in development. As highlighted in global health discussions, trust and data integrity are essential for responsible AI deployment in healthcare..

Step 3: Stop Working in a Silo—Collaborate or Die

No single company, university, or government can solve the challenges of Clinical research AI alone. We must work together. Industry-academia partnerships are critical for bridging the gap between research and practical application. Precompetitive consensus building is also vital, where companies agree on shared standards and best practices before competing on solutions.

Data-sharing initiatives are the most powerful catalyst for progress, but privacy is a major hurdle. This is where federated learning models come in, allowing researchers to analyze data across institutions without moving or exposing it. At Lifebit, this is exactly what our platform enables: secure, collaborative analysis of global biomedical data while maintaining strict privacy and governance. The future of clinical research will be built through knowledge sharing, open science, and a collective commitment to patients. You can find our Real-World Evidence solutions to see how we’re making this vision a reality.

Your Top 3 Questions About Clinical Research AI, Answered

Let’s tackle some of the most common questions about Clinical research AI and how this technology is reshaping the future of medicine.

How does AI help find patients for clinical trials?

Clinical research AI transforms patient recruitment by analyzing electronic health records (EHRs), genomic data, and other sources to quickly identify individuals who meet complex eligibility criteria. Instead of taking months to manually review records, AI algorithms can scan vast datasets in hours. This not only speeds up recruitment—a major bottleneck where 86% of trials face delays—but also improves the diversity of trial populations by identifying eligible patients across different demographics and locations that might otherwise be overlooked.

What are ‘digital twins’ in clinical research?

A digital twin is a virtual copy of a patient or an entire patient population, built from real-world data. These sophisticated computational models mirror the biology, disease progression, and potential drug responses of actual individuals. Clinical research AI uses these digital twins to run virtual experiments, testing different doses and patient types to predict outcomes and optimize trial design before human testing even begins. This is especially valuable for rare diseases where patient recruitment is difficult and helps address the 90% failure rate of new drugs by identifying problems earlier in development.

Is AI regulated in medical research?

Yes, and the regulatory landscape is evolving rapidly. Both the FDA in the US and the European Commission (with its AI Act) are establishing frameworks to govern the use of Clinical research AI. These regulations focus on safety, efficacy, and risk management. The EU’s AI Act categorizes AI systems by risk level, imposing strict requirements on high-risk tools used in clinical decision-making. The FDA’s AI/ML Action Plan uses a flexible, risk-based approach to promote innovation while ensuring patient safety through continuous monitoring. This oversight is essential for building trust and ensuring AI delivers on its promise to improve healthcare.

The Future Is Here: How to Deploy AI in Clinical Research, Responsibly

We stand at a remarkable inflection point. Clinical research AI is fundamentally reshaping how we find, test, and deliver treatments. We’ve seen how it slashes drug development timelines, solves recruitment bottlenecks, and enables virtual patient simulations that once seemed like science fiction.

The opportunities are staggering, but the challenges—the “black box” problem, data bias, and high implementation costs—are just as real. These aren’t reasons to stop; they’re reasons to be thoughtful and rigorous.

What gives me hope is the framework we’re building together. Regulatory bodies are establishing clear guidelines through the EU AI Act and the FDA’s AI/ML Action Plan. Researchers are developing explainable AI, and global health leaders are defining ethical standards for data use and accountability. The industry is recognizing that collaboration through open science and federated learning is the only way forward.

The missing piece? Secure, scalable infrastructure that makes responsible AI deployment possible. This is where Lifebit becomes essential. Our federated AI platform is built to solve these challenges, enabling researchers to analyze sensitive data without moving it, harmonize disparate datasets, and gain real-time insights.

- Our Trusted Research Environment (TRE) ensures genomic and clinical data remains protected.

- Our Trusted Data Lakehouse (TDL) breaks down data silos without compromising security.

- Our federated governance model allows biopharma, governments, and public health organizations to collaborate globally while maintaining full control over their data.

We are building the foundation for a future where Clinical research AI delivers on its promise safely and equitably. A future where trials are faster, breakthroughs cost less, and treatments reach more people. That future is within reach, but it requires a commitment to transparency, collaboration, and the right infrastructure.

You can find more info about federated AI platforms and how they’re enabling this change, or find our Real-World Evidence solutions that are already powering research across continents. Let’s build this future together.