Don’t Get Lost in Data – Best Data Linking Software Picks

Data Linking Software: Unify Your Data 2025

What is Data Linking Software?

Data linking software is a specialized tool designed to connect and combine related data from different sources. It helps identify and merge records that refer to the same person, object, or event, even when information is incomplete or inconsistent. Think of it as a sophisticated digital detective that solves a giant puzzle where the pieces are scattered across your entire organization. This software finds the true matches, turning messy, siloed information into a clear, connected picture.

Its main purpose is to:

- Resolve fragmented data: Bring together information scattered across various databases, applications, and cloud services.

- Eliminate duplicates: Identify and remove redundant records (deduplication) both within a single dataset and across multiple datasets.

- Create a single source of truth: Build a complete, unified, and reliable view of key business entities, often called a “golden record.”

- Improve data quality: Improve the accuracy, completeness, and consistency of data, making it trustworthy for critical operations and analytics.

The Hidden Costs of Disconnected Data

Fragmented data isn’t just an inconvenience; it carries significant and often hidden costs that impact every part of an organization. When data is siloed and inconsistent, businesses suffer from:

- Operational Inefficiency: Employees waste countless hours manually cross-referencing spreadsheets and searching for correct information, leading to lost productivity and increased operational overhead.

- Flawed Business Intelligence: Decisions based on incomplete or inaccurate data can lead to misguided strategies. A marketing team might target the same customer multiple times with different offers, wasting budget and creating a poor customer experience. A supply chain manager might miscalculate inventory needs due to duplicate supplier records.

- Compliance and Security Risks: In regulated industries like finance and healthcare, failing to identify all data related to a single individual can lead to severe compliance violations (e.g., GDPR’s “right to be forgotten”). It also makes it harder to detect fraud, as malicious actors often exploit fragmented systems.

- Poor Customer Experience: When a customer service agent doesn’t have a complete view of a customer’s history—including past purchases, support tickets, and interactions—it results in frustrating, repetitive conversations and erodes customer loyalty.

Data linking software directly addresses these issues by creating a complete, 360-degree view of customers, patients, or operations, enabling reliable business insights and better decisions.

The Power of a Single Source of Truth

The ultimate goal of data linking is to establish a single source of truth (SSoT). An SSoT is a trusted, consolidated data repository that provides a holistic view of each entity. This becomes the definitive, go-to source for all departments, ensuring everyone is working from the same information. The benefits are transformative:

- For Analytics and AI: Machine learning models and AI algorithms thrive on clean, integrated data. An SSoT provides the high-quality fuel needed for accurate predictions, effective personalization, and reliable insights.

- For Business Operations: It streamlines processes from sales and marketing to finance and support. For example, with a single customer view, a sales team can see every touchpoint and tailor their approach accordingly.

- For Strategic Decision-Making: Leadership can confidently make strategic decisions knowing that the underlying data is complete and trustworthy, eliminating guesswork and internal disputes over which numbers are “correct.”

As Dr. Maria Chatzou Dunford, CEO and Co-founder of Lifebit, I’ve spent over 15 years building cutting-edge tools for precision medicine and biomedical data integration, directly tackling the complexities of data linking software to empower data-driven findies. This article will guide you through the best data linking software options available to help you unify your fragmented data landscape.

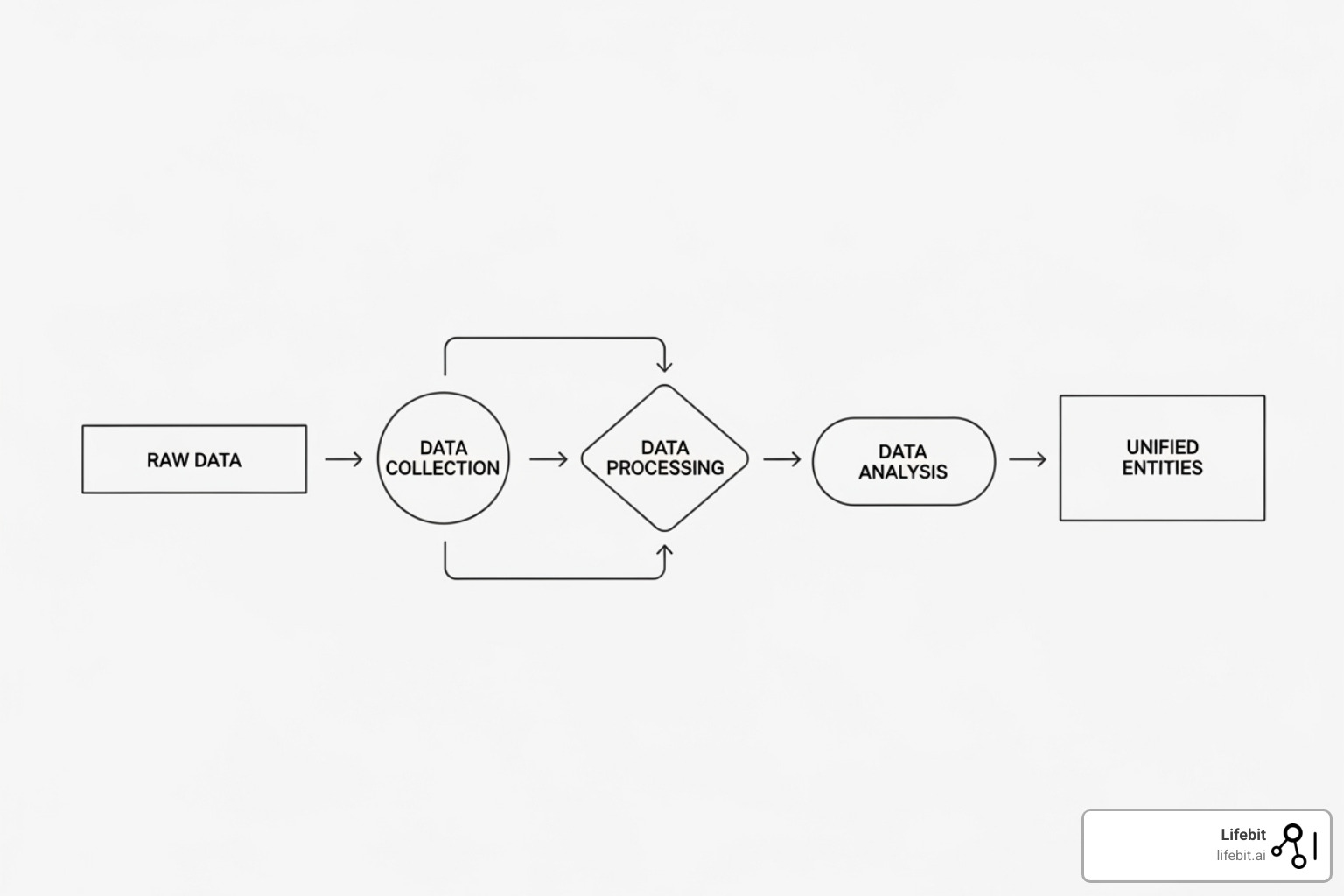

The Data Linking Process: From Raw Data to Unified Entities

The data linking software process methodically organizes, cleans, and connects scattered data pieces into a complete picture. This systematic approach is crucial, as siloed information creates real costs. Studies show 65% of organizations face linking issues during data migrations, and nearly 40% lose data from broken connections. The journey from messy data to a unified dataset involves five key steps, a process of Data Harmonization: Overcoming Challenges that transforms chaos into clarity.

Step 1: Data Pre-processing and Standardization

This foundational step prepares raw, messy data for the linking engine. It’s the “clean room” where data is made consistent and usable. Key activities include:

- Data Cleansing: This involves identifying and correcting errors, inconsistencies, and inaccuracies. For example, an address field might contain “St.”, “St”, and “Street” for the same road; cleansing standardizes these to a single format. It also handles null values and corrects obvious typos.

- Formatting and Standardization: All data is converted into a uniform structure. This means ensuring all dates follow the same format (e.g., YYYY-MM-DD), phone numbers are stripped of special characters (e.g.,

(555) 123-4567becomes5551234567), and text is converted to a consistent case (e.g., lowercase). - Parsing: Complex data fields are broken down into their constituent parts. A single “fullname” field might be parsed into “firstname,” “middlename,” and “lastname.” Similarly, a full address string is parsed into street number, street name, city, state, and postal code. This allows for more granular and accurate field-level comparisons later.

- Data Enrichment: Missing information is filled in by leveraging external or internal data sources. For instance, a record with only a postal code could be enriched with city and state information. This adds valuable context and improves the chances of finding a correct match.

In healthcare, this standardization is vital. As explained in What is Health Data Standardisation?, frameworks like OMOP and HL7 ensure that clinical data from different electronic health record (EHR) systems can be reliably aggregated and linked.

Step 2: Indexing and Blocking

To avoid the immense computational complexity of comparing every single record with every other record (an O(n²) problem), this step intelligently narrows down the search space. Indexing and blocking group records into smaller, manageable buckets where matches are likely to occur. This improves efficiency by orders of magnitude.

- Blocking Keys: Logical groups, or “blocks,” are created based on one or more attributes. For example, a simple blocking key could be the first three letters of a last name and the five-digit zip code. Only records within the same block (e.g., “SMI-90210”) are compared against each other. The challenge is to create blocks that are small enough to be efficient but large enough to not miss potential matches that have minor variations in the blocking key.

- Phonetic Indexing: This technique groups records that sound similar, which is crucial for names that are often misspelled. Algorithms like Soundex or Metaphone convert a name like “Johnson” and its misspelling “Jonson” into the same phonetic code, ensuring they land in the same block for comparison.

This step transforms a task that could involve billions of potential comparisons into thousands of meaningful ones, dramatically increasing speed without sacrificing accuracy.

Step 3: Field and Record Comparison

Within each block, the software carefully compares candidate record pairs field by field to calculate a similarity score. Two main approaches are used:

- Deterministic Matching: This is a rules-based approach that requires records to match perfectly on a set of predefined unique identifiers. For example, a rule might state: “If Social Security Number and Date of Birth are identical, it is a match.” This method is fast and simple but brittle, as it fails if there are any errors or variations in the key fields.

- Probabilistic Matching: For messier, real-world data, probabilistic matching is far more powerful. Based on the statistical principles of the Fellegi-Sunter model, this approach calculates match weights and similarity scores to determine the likelihood of a match. It uses sophisticated matching algorithms like Jaro-Winkler or Levenshtein distance to compare strings, recognizing that “John Doe, 123 Main St” and “Jon Doe, 123 Main Street” are highly likely to be the same person despite the variations. It assesses the probability of two fields agreeing if the records are a true match (m-probability) versus the probability of them agreeing by chance (u-probability), providing a nuanced score that reflects real-world uncertainty. This advanced approach to Record linkage is essential for handling the nuances of most datasets.

Step 4: Classification and Evaluation

Once a similarity score is calculated for a pair of records, the software classifies it based on predetermined match thresholds. Typically, there are three outcomes:

- Match: The score is above the upper threshold, indicating a high probability that the records refer to the same entity. These are automatically linked.

- Non-Match: The score is below the lower threshold, indicating the records are clearly different. They are discarded from further consideration.

- Potential Match: The score falls between the two thresholds. These are ambiguous cases that are flagged for clerical review, where a human data steward makes the final decision.

This step is a balancing act between accuracy, precision (minimizing false positives, i.e., incorrectly linking different entities), and recall (minimizing false negatives, i.e., failing to link true matches). For example, if a model identifies 100 pairs as matches, and 95 of them are actually correct, its precision is 95%. If there were 110 true matches in the dataset and the model found those 95, its recall is 86.4% (95/110). Good software allows users to tune these thresholds to meet specific business needs, such as prioritizing high precision for financial data or high recall for public health research.

Step 5: Entity Resolution and Merging

In the final step, entity resolution takes all the pairs classified as a “match” and merges them into a single, consolidated, unified view. For example, records for “Jane Smith,” “J. Smith,” and “Jane M. Smith” are combined into one golden record. This process creates a single customer view or a definitive source of truth.

To handle conflicting information during the merge (e.g., two different addresses or phone numbers), merge-purge rules are applied. These rules can be configured based on business logic, such as:

- Most Recent: Keep the value with the latest timestamp.

- Most Frequent: Keep the value that appears most often across the source records.

- Source Precedence: Trust data from one source system (e.g., a core CRM) over another (e.g., a temporary web form).

This transforms fragmented information into valuable Real-World Data that can drive insights. The result is harmonized information, a product of good data stewardship and technology, ready to power analytics and confident decisions.

Key Features to Evaluate in Data Linking Tools

Choosing the right data linking software depends on your specific needs, data volume, and linking challenges. Here are the key features to evaluate to ensure you select a tool that is powerful, scalable, and user-friendly.

Matching Algorithms and Accuracy

The core of any data linking software is its library of matching algorithms, which must be robust enough to handle messy and incomplete real-world data. Look for a comprehensive suite of algorithms, including:

- Fuzzy Matching: For handling typos, transpositions, and abbreviations in text fields (e.g., Jaro-Winkler, Levenshtein).

- Phonetic Algorithms: For matching sound-alike words, especially names (e.g., Soundex, Metaphone, NYSIIS).

- Numeric Matching: For comparing numbers with slight variations, such as phone numbers or addresses.

- N-gram Analysis: For comparing strings based on shared substrings, which is effective for detecting partial matches.

- Geospatial Matching: For linking records based on geographic proximity (e.g., latitude/longitude coordinates).

- Domain-Specific Logic: The ability to create custom rules and logic custom to your industry, such as understanding healthcare-specific identifiers or financial transaction patterns.

High accuracy is vital, as incorrect links (false positives) can lead to poor decisions and erode trust in the data. Advanced solutions can achieve 96% accuracy or higher, ensuring reliable matches. Learn more about these capabilities in our guide to Database Matching Software.

Scalability and Performance

Your data linking software must be able to grow with your datasets. As data volumes explode, performance cannot become a bottleneck. Key considerations include:

- Processing Large Datasets: The software should be architected to efficiently process millions or even billions of records without a significant drop in performance.

- Big Data Integration: It must connect seamlessly to big data backends like Hadoop, Spark, and cloud data warehouses (e.g., Snowflake, BigQuery, Redshift).

- Parallel and Distributed Processing: Look for tools that can distribute the processing load across multiple servers or nodes (horizontal scaling). This is essential for handling massive linking jobs in a timely manner.

- Processing Speed: Performance benchmarks are critical. Some tools can link a million records in under a minute, while others are optimized to scale to over 100+ million records. The ability to handle large jobs quickly reduces manual work and accelerates time-to-insight, highlighting the importance of robust infrastructure like Data Warehouses.

Integration and Usability

Powerful software is useless if it’s too difficult to integrate into your existing data ecosystem or too complex for your team to use. Evaluate:

- Application Programming Interface (API): A well-documented, robust API (typically RESTful) is essential for programmatic access, allowing developers to embed linking capabilities directly into data pipelines and applications.

- Graphical User Interface (GUI): An intuitive, user-friendly GUI empowers non-technical users, such as data stewards and business analysts, to configure matching rules, manage workflows, and conduct clerical reviews without writing code.

- Workflow Automation: The ability to build, schedule, and monitor automated linking workflows saves significant time and reduces the risk of manual errors.

- Data Source Connectors: Pre-built connectors for common databases (SQL, NoSQL), cloud storage (S3, Azure Blob), and enterprise applications (Salesforce, SAP) drastically reduce integration time and effort.

At Lifebit, we ensure Lifebit Onboarding: Seamless Integration for Success so our platforms fit into your existing ecosystem without disruption.

Data Governance and Security

When dealing with sensitive data, especially PII or PHI, security and governance features are non-negotiable. A trustworthy platform must include:

- Role-Based Access Control (RBAC): Granular control over who can access, view, or modify data and linking configurations.

- Data Encryption: End-to-end encryption for data both at rest (in the database) and in transit (across the network).

- Audit Trails: Comprehensive logging of all user activities, rule changes, and data modifications to ensure accountability and support compliance audits.

- Compliance Certifications: Adherence to industry standards and regulations like GDPR, HIPAA, and SOC 2.

Machine Learning Capabilities

Modern data linking platforms leverage AI and machine learning to improve accuracy and efficiency over time. Key capabilities include:

- Supervised Learning: The model learns from a set of pre-labeled examples of matches and non-matches to build a highly accurate matching model.

- Unsupervised Learning: The model can find patterns and clusters in the data on its own, which is useful for initial exploration when no labeled data is available.

- Active Learning: This is a powerful feature where the model identifies the most ambiguous or uncertain record pairs and presents them to a human expert for review. This feedback is then used to retrain and improve the model, creating a continuous learning loop that gets smarter with each review. This dramatically reduces the manual effort required for clerical review over time.

A Guide to Data Linking Software Approaches

Choosing data linking software means selecting an approach that aligns with your organization’s resources, technical expertise, and strategic goals. The choice is often framed as “build vs. buy”—do you have an engineering team ready to construct a custom solution from scratch, or do you need a ready-made platform that delivers results quickly? Let’s explore the main approaches to find the right path for you.

Flexible and Customizable Data Linking Solutions (The “Build” Approach)

For organizations with strong in-house technical teams and highly unique data challenges, building a custom solution using open-source libraries can be an attractive option. Popular tools include the Python recordlinkage and Dedupe libraries, Java frameworks like Duke, and even large-scale projects like Splink from the UK’s Ministry of Justice.

Advantages:

- Maximum Flexibility: You have complete control to tailor every aspect of the linking process, from pre-processing steps to the matching algorithms themselves.

- No Licensing Cost: The software itself is free, eliminating upfront licensing fees.

- Deep Customization: The solution can be perfectly integrated with your existing proprietary systems and workflows.

- Strong Community Support: A large developer community often provides support through forums and documentation.

Disadvantages and Hidden Costs:

- High Development and Maintenance Costs: While the software is free, the cost of hiring and retaining skilled data engineers and data scientists to build and maintain the system is substantial. This can easily exceed the cost of an enterprise license.

- Significant Personnel Risk: These systems often become dependent on the one or two experts who built them. With an estimated 10% annual turnover in tech, there’s a significant risk of losing that core expert, leaving you with a complex “black box” system that no one else understands. Maintenance costs can skyrocket to over $250,000 over time.

- Long Time-to-Value: Building a robust, scalable, and accurate linking solution from scratch is a long-term project, often taking many months or even years to deliver tangible business value.

- Lack of Enterprise Features: Open-source tools typically lack the user-friendly GUIs, advanced security features, audit trails, and dedicated support that businesses require.

Enterprise-Grade Data Linking Software (The “Buy” Approach)

For organizations seeking a faster, more reliable, and scalable path, commercial enterprise-grade data linking software is a compelling alternative. These platforms are purpose-built to solve data linking challenges at scale.

Key Benefits:

- Enterprise Support: You get access to dedicated 24/7 support teams with service-level agreements (SLAs) to resolve issues quickly and minimize downtime.

- Advanced Security and Compliance: These solutions come with built-in data encryption, role-based access control, and audit trails. They are often certified for compliance with regulations like GDPR, HIPAA, and SOC 2, significantly reducing your compliance burden.

- Pre-built Connectors: Save months of integration work with a wide array of out-of-the-box connectors for enterprise systems (SAP, Salesforce), databases (Oracle, SQL Server), and cloud platforms (AWS, Azure, GCP).

- User-Friendly GUI: An intuitive graphical interface empowers business users and data stewards to manage linking processes, review potential matches, and monitor data quality without needing to write a single line of code.

- Faster Time-to-Value: With pre-built components and streamlined deployment, enterprise solutions can be implemented in weeks, not months, allowing you to realize a return on investment much faster.

- Robust Data Governance Features: They include built-in tools to manage data quality, track data lineage, and enforce governance policies, aligning with modern approaches like Federated Data Governance.

While these solutions have licensing costs, they often provide a significantly higher ROI by drastically reducing development time, mitigating personnel risk, and lowering long-term operational overhead.

The Hybrid Approach: Best of Both Worlds?

A third option is emerging that combines the strengths of both approaches. Some modern enterprise platforms offer the reliability, security, and usability of a commercial product but also provide an SDK or API that allows data scientists to extend the platform’s functionality. This allows them to inject custom Python or R models, create bespoke matching logic, or build unique pre-processing steps within the governed, scalable framework of the enterprise solution. This hybrid model offers the best of both worlds: the speed and security of a commercial platform with the flexibility to tackle highly specialized problems.

Real-World Impact: Use Cases Across Industries

Data linking software is a changeal technology that creates tangible value across nearly every industry. By creating a complete, 360-degree view of data, it enables smarter decisions, improves efficiency, and mitigates risk. Let’s explore some real-world examples of its impact.

Healthcare and Life Sciences

In healthcare, where data is notoriously siloed across hospitals, clinics, labs, and payers, data linking software is critical for improving patient outcomes and accelerating research.

- Longitudinal Patient Records: It links patient records from disparate systems to create a complete, chronological patient health journey. This is a core function of a Clinical Data Integration Platform and gives clinicians a holistic view for better diagnoses, safer prescriptions, and more effective care.

- Pharmacovigilance and Drug Safety: It improves drug safety by identifying and deduplicating adverse event reports from multiple sources. By linking this data to patient outcomes, regulators and pharmaceutical companies can detect safety signals faster.

- Clinical Trial Recruitment: Finding eligible patients for clinical trials is a major bottleneck. By linking EMR data, genomic data, and trial eligibility criteria, researchers can identify and contact suitable patient cohorts in days instead of months, dramatically accelerating medical innovation.

- Multi-Omics Data Integration: It enables the combination of complex biological data (genomics, proteomics) with clinical records. This linkage is fundamental to advancing precision medicine and developing personalized treatments.

At Lifebit, we understand that linking sensitive health data requires more than just technology; it demands a robust privacy-preserving framework. We explore the profound potential and challenges of this vital work in our article, Health Data Linkage: Promise and Challenges.

Government and Public Sector

Governments use data linking software to improve the efficiency of public services, inform evidence-based policy, and increase transparency and accountability.

- Social Program Administration: It helps identify eligible families for benefits by linking data from tax, housing, and social welfare agencies. It can also create a single view of debt for local councils, allowing them to consolidate a citizen’s various debts (e.g., council tax, parking fines) and offer a more supportive, consolidated repayment plan.

- Law Enforcement and National Security: Connecting records across different agencies helps detect complex fraud, tax evasion, and criminal networks by uncovering hidden relationships and patterns in the data.

- National Statistics: It is essential for combining census records with other administrative data (e.g., health, education) to produce more accurate and timely national statistics for policymaking.

- Education: Linking student data from K-12 through higher education and into the workforce allows policymakers to track long-term outcomes, evaluate the effectiveness of educational programs, and identify areas for improvement.

For instance, a defense health agency successfully used this software to deduplicate and link hospital records across a staggering 200 million data points, showcasing its immense large-scale impact.

Finance and Business

In the competitive business world, data linking is a cornerstone of customer-centricity, risk management, and operational excellence.

- Master Data Management (MDM): It is the core technology behind MDM initiatives, creating a single, authoritative source of truth for critical business entities like customers, products, suppliers, and assets.

- 360-Degree Customer View: It cleans and merges customer data from all touchpoints—CRM, e-commerce platforms, marketing automation tools, and customer support logs—to enable hyper-personalized marketing, proactive customer service, and increased customer lifetime value.

- Risk Assessment and Compliance: In finance, it is used to identify ultimate beneficial ownership across complex corporate structures and to link financial data for policy research. For Anti-Money Laundering (AML) and Know Your Customer (KYC) compliance, it connects transaction data, customer details, and global watchlists to flag suspicious activity and prevent financial crime.

Retail and E-commerce

- Supply Chain Optimization: By linking data from disparate systems—including suppliers, logistics partners, and internal inventory—retailers can gain end-to-end visibility into their supply chain, allowing them to identify bottlenecks, predict disruptions, and improve resilience.

- Improved Personalization: To compete with digital giants, retailers link online browsing history, in-store purchase data, loyalty program activity, and social media sentiment to create a unified customer profile. This enables them to deliver highly relevant product recommendations and personalized offers that drive sales and loyalty.

Conclusion: Building a Connected and Secure Data Future

Data linking software is the unsung hero of the data-driven world, changing messy, fragmented datasets into clear, golden records. It turns disconnected information into a powerful asset that drives real decisions and meaningful outcomes. The benefits are game-changing: improved data integrity, actionable insights, improved operational efficiency, and the confidence to make data-driven decisions. For any organization—from government agencies to businesses and healthcare innovators—this software is essential.

But here’s where things get really exciting. As we look toward tomorrow, the conversation isn’t just about linking data anymore. It’s about doing it securely, privately, and intelligently. The future belongs to privacy-preserving techniques that protect sensitive information while still open uping powerful insights.

For complex biomedical research, where every data point could hold the key to saving lives, federated platforms offer something remarkable. They let you link and analyze sensitive data across different locations without actually moving it anywhere. It’s like having a conversation with someone in another room without leaving your chair – you get all the benefits of collaboration while maintaining complete control over your most precious assets.

This is exactly why we at Lifebit have poured our hearts into building something special. Our platform delivers real-time evidence and analytics with AI-driven safety surveillance, all wrapped up in a Secure Data Environment (SDE) that never compromises on security. Our approach to Federated Data Analysis shows how you can achieve groundbreaking research while maintaining the strictest data privacy and control.

The future of data isn’t just connected – it’s intelligently connected, securely connected, and designed to empower rather than overwhelm. We’re building bridges between data islands while keeping each island safe and sovereign.

Ready to see what this connected, secure future looks like for your organization? We’d love to show you how a next-generation federated AI platform can enable secure, real-time access to global biomedical and multi-omic data, empowering your journey toward data-driven findies. Explore Lifebit’s federated data platform and let’s build your connected and secure data future together.