Cloudy with a Chance of Data: Mastering Multi-Cloud Integration

Why Multi-Cloud Data Integration Matters in 2025

Multi cloud data integration is the practice of connecting, changing, and managing data across multiple public cloud providers—such as AWS, Azure, and Google Cloud—without centralizing everything in one vendor’s ecosystem. Here’s what you need to know:

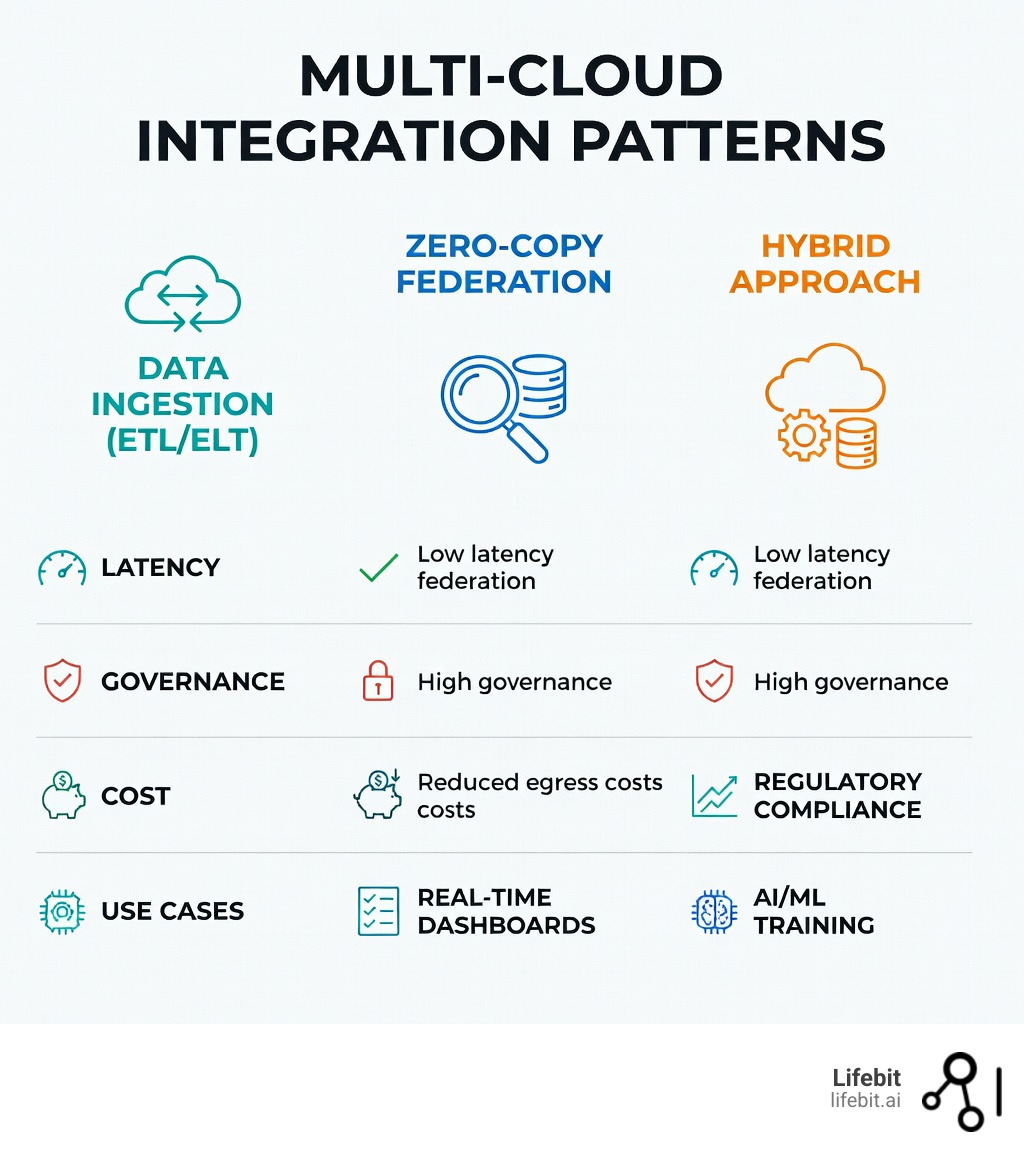

Key Multi-Cloud Data Integration Strategies:

- Data Ingestion (ETL/ELT) – Move data into a central lakehouse for governance and compliance

- Zero-Copy Federation – Query data in place without moving it, reducing egress costs

- Hybrid Approach – Combine ingestion for governed core data with federation for real-time freshness

- API-Driven Integration – Use standardized connectors to link SaaS, on-premises, and cloud systems

- Event-Driven Architecture – Stream data via CDC (Change Data Capture) for real-time insights

Why Organizations Adopt Multi-Cloud:

- 81% work with two or more cloud providers to avoid vendor lock-in

- 82% report better disaster recovery by distributing workloads across clouds

- 31% use four or more providers to optimize for best-of-breed services

The challenge? More than 70% of enterprises cite managing multi-cloud environments as a top struggle. Data silos, security gaps, and architectural complexity can quickly turn flexibility into chaos—especially in regulated industries like healthcare and pharma where compliance, data sovereignty, and real-time analytics are non-negotiable.

I’m Dr. Maria Chatzou Dunford, CEO and Co-founder of Lifebit, a federated genomics and biomedical data platform that enables secure multi cloud data integration across public sector institutions and pharmaceutical organizations. Over the past 15 years, I’ve built tools that power precision medicine and multi-omic analytics in high-compliance, distributed environments—from Nextflow workflows to federated AI platforms that analyze data in situ without moving it.

Must-know multi cloud data integration terms:

Why Multi-Cloud Data Integration is the New Enterprise Standard

The days of being a “one-cloud shop” are rapidly fading into the rearview mirror. As we look toward 2025, the adoption of multi-cloud strategies has become more than a trend—it’s a survival mechanism. According to Gartner, 81% of organizations are already working with two or more providers. This shift is driven by the realization that no single cloud provider can be everything to everyone.

We are seeing a massive shift where more than 85% of organizations will accept a cloud-first principle by 2025, and over 50% of those will rely specifically on multi-cloud strategies to drive innovation. This isn’t just about having options; it’s about building a resilient, high-performance architecture that can withstand vendor outages and regulatory shifts. Furthermore, the rise of the “Sovereign Cloud”—where data must reside within specific legal jurisdictions—has made multi-cloud an operational necessity for global enterprises.

Primary Business Drivers for Multi-Cloud Data Integration

Why are we all juggling so many clouds? It comes down to a few critical drivers that go beyond simple redundancy:

- Best-of-Breed Services: One provider might have the best AI/ML tools (like Google Cloud’s Vertex AI), while another offers superior enterprise integration (like Microsoft Azure’s Active Directory ecosystem) or massive-scale compute (like AWS). Multi-cloud lets you pick the “best tool for the job” without compromising on performance.

- Risk Mitigation & Disaster Recovery: Relying on one vendor is a single point of failure. 82% of companies use multi-cloud to ensure that if one provider goes down, their business doesn’t. This is particularly critical in the financial sector, where the Digital Operational Resilience Act (DORA) in the EU now mandates that firms manage third-party ICT risks, often necessitating a multi-vendor strategy.

- Cost Optimization & Arbitrage: Dynamic pricing models allow teams to shift workloads to where they are cheapest. 451 Research notes that 31% of organizations use four or more providers to maintain this financial flexibility. By leveraging spot instances or reserved capacity across different clouds, FinOps teams can significantly reduce the total cost of ownership (TCO).

- Data Sovereignty and Residency: Many countries now require health or financial data to stay within their borders. Multi-cloud allows us to store data in a local Azure data center in the EU while running analytics in AWS North America. For more on this, check out our insights on cloud data management. This ensures compliance with local laws like the CCPA in California or the LGPD in Brazil without sacrificing global operational efficiency.

- Avoiding Vendor Lock-in: The fear of being “held hostage” by a single provider’s pricing or roadmap is a major motivator. A multi-cloud data integration strategy ensures that data remains portable, allowing organizations to pivot their infrastructure as the market evolves.

Multi-Cloud vs. Hybrid Cloud: Key Differences

It’s common to hear these terms used interchangeably, but they represent very different philosophies. Multi-cloud is about using multiple public cloud vendors (AWS + Azure + GCP). Hybrid cloud is about bridging the gap between your private on-premises infrastructure and the public cloud. Understanding this distinction is vital for resource allocation and security planning.

| Feature | Multi-Cloud | Hybrid Cloud |

|---|---|---|

| Vendors | Multiple Public (AWS, Azure, GCP) | Single Public + On-Premises |

| Primary Goal | Best-of-breed services & no lock-in | Maintaining legacy systems & data control |

| Complexity | High (Different APIs and security models) | Moderate (Standardized bridge to one vendor) |

| Governance | Distributed across multiple providers | Centralized between cloud and local |

| Connectivity | Public Internet or Cloud Interconnects | Dedicated VPN or Direct Connect |

| Data Gravity | High (Data spread across regions) | Moderate (Data often anchored on-prem) |

Overcoming the 3 Biggest Multi-Cloud Integration Challenges

While the benefits are clear, the “organizational mess” that results from multi-cloud can be daunting. More than 70% of enterprises identify managing a multi-cloud environment as a top challenge, according to Flexera’s State of the Cloud Report. The complexity isn’t just technical; it’s operational and financial.

- Architectural Complexity and the Talent Gap: Every cloud has its own language, its own CLI, and its own security paradigms. Integrating them requires a diverse skill set that many small IT teams simply don’t have. Finding engineers who are experts in AWS, Azure, and GCP simultaneously is like finding a unicorn. This often leads to “shadow IT,” where different departments use different clouds without centralized oversight.

- Data Egress Costs and the “Hotel California” Effect: Moving data out of a cloud (egress) is expensive. Cloud providers make it free to bring data in but charge a premium to take it out. If you’re constantly shuffling petabytes between AWS and Azure for cross-cloud analytics, your “cost optimization” strategy will quickly backfire. This creates a “Hotel California” effect: you can check in your data, but you can never (affordably) leave.

- Data Gravity and Latency: Data is heavy. Once it settles in one cloud, the applications tend to follow it because of the latency involved in accessing data over a network. If your database is in AWS East and your AI model is in GCP West, the round-trip time for every query can cripple real-time performance. Managing this “gravity” requires sophisticated caching and edge computing strategies.

Solving Security and Governance in Multi-Cloud Data Integration

Security is the number one concern for us at Lifebit. In a multi-cloud world, your attack surface is much wider. Each cloud provider has a different Shared Responsibility Model, and a misconfiguration in one can expose your entire ecosystem. To stay safe, we recommend a “Zero Trust” architecture. This means never trusting a connection just because it’s “internal”—every request must be verified, authenticated, and authorized based on identity and context.

Centralized Identity Access Management (IAM) is vital. You don’t want to manage users in three different places. Using a unified identity provider (IdP) like Okta or Azure AD across all clouds ensures that when an employee leaves, their access is revoked everywhere instantly. Furthermore, staying compliant with GDPR or HIPAA requires rigorous healthcare data integration standards that ensure data residency and encryption are maintained across all regions. This includes managing encryption keys in a way that no single cloud provider has full control over your data.

Managing Data Quality and Schema Drift

When data lives in different places, it tends to change. One system might update its format (schema drift), causing downstream analytics to break. For example, a CRM update in Salesforce might change a date format that your AWS-based data warehouse expects. We use active metadata management to track these changes in real-time. By maintaining a biopharma data integration guide mindset, we ensure that data remains semantically consistent—meaning a “patient ID” in one cloud means the exact same thing in another. This requires a robust Data Catalog that acts as a single source of truth for data definitions across the entire multi-cloud landscape.

Core Patterns: ETL, Federation, and Zero-Copy Approaches

How do we actually move the data? There are three main patterns we see in the wild, and according to Forrester, these are the backbone of 2024-2025 cloud strategies. Each has its own trade-offs regarding cost, speed, and complexity.

- ETL/ELT (Extract, Transform, Load): The classic approach. You move data into a central lakehouse (like Databricks or Snowflake). It’s great for deep historical analysis and complex transformations but can be slow and expensive due to egress fees and storage duplication. Modern ELT focuses on loading raw data first and transforming it within the target warehouse using tools like dbt (data build tool).

- Real-time CDC (Change Data Capture): This streams changes as they happen using logs. If a record is updated in your on-prem Oracle database, a tool like Debezium or Fivetran captures that change and streams it to your cloud analytics within seconds. This is essential for use cases like real-time inventory management or fraud detection where every second counts.

- Data Federation (Zero-Copy): This is the “look but don’t touch” approach. You query the data where it lives using a virtualization layer. No movement, no egress fees, and no data duplication. This is the core of the “Data Mesh” philosophy, where data is treated as a product and managed by the teams that know it best, rather than being dumped into a central swamp.

When to Use Zero-Copy Federation vs. Data Ingestion

Choosing between moving data (ingestion) and querying it in place (federation) is a massive architectural decision. We’ve put together a data integration platform complete guide to help, but here is the rule of thumb:

- Use Data Ingestion when: You need a “single source of truth” for high-performance reporting, require heavy transformations that would be too slow to run on-the-fly, or need to comply with strict regulatory auditing where you must maintain a physical copy of the data for a specific period. It is also better for machine learning training where the model needs to iterate over the same dataset millions of times.

- Use Zero-Copy Federation when: You have petabyte-scale data that is too expensive to move, want to avoid egress costs, or need real-time freshness for a live dashboard. Techniques like “predicate pushdown” allow the query to run at the source (e.g., the database in Azure), only sending the tiny result set back across the network to the requester in AWS. This is particularly powerful for cross-border research where data cannot legally leave a specific region.

The Role of AI and Automation in Multi-Cloud Data Integration

AI isn’t just a user of data; it’s now the mechanic fixing the pipes. Gartner research highlights that AI-orchestrated pipelines are becoming standard. These tools use machine learning to:

- Auto-Map Schemas: Automatically identifying that “CustNum” in one system is the same as “ClientID” in another, reducing manual mapping time by up to 80%.

- Anomaly Detection: Monitoring data flows to detect sudden spikes in volume or changes in data distribution that might indicate a system failure or a security breach.

- Self-Healing Pipelines: If a connection to an Azure API drops, the AI can automatically retry, switch to a backup route, or alert the team with a pre-filled root-cause analysis.

- Cost Optimization: AI can predict when egress costs will be lowest or suggest moving a workload to a different cloud provider based on current spot instance pricing. This automation is what allows lean data teams to manage massive multi-cloud ecosystems that would have previously required dozens of engineers.

Real-World Use Cases and 2025 Future Outlook

The future of multi cloud data integration is already here in several sectors. CloudZero reports that 82% of resilient companies are using these architectures today to solve complex, real-world problems:

- Personalized Medicine & Genomics: This is where Lifebit excels. We combine genomic data stored in a secure government cloud (like Genomics England) with clinical trial data from a pharmaceutical company’s private Azure instance. By using federated analytics, researchers can find the right drug for the right patient without the sensitive genomic data ever leaving its secure environment.

- Global Fraud Detection: Large banks use real-time CDC to stream transaction data from on-prem mainframes to cloud-based AI engines in GCP. By integrating data across multiple regions and clouds, they can stop fraudulent trades in under 30 seconds, even if the transaction spans multiple currencies and jurisdictions.

- Supply Chain Resilience: Manufacturers are integrating IoT sensor data from factory floors (processed at the edge) with shipping data from AWS and market demand forecasts from Azure. This multi-cloud view allows them to predict disruptions—like a port strike or a weather event—and automatically reroute shipments before the delay impacts the customer.

- Retail Omnichannel Experience: Retailers integrate in-store point-of-sale data with online browsing behavior and social media sentiment. Often, these data sources live in different clouds due to acquisitions or legacy partnerships. A unified multi-cloud integration layer allows for a 360-degree view of the customer in real-time.

Best Practices for Scalable Multi-Cloud Implementation

If you want to succeed, you can’t wing it. We recommend these “gold standards” for any enterprise embarking on a multi-cloud journey:

- Infrastructure as Code (IaC): Use tools like Terraform or Pulumi to deploy your integration layers. If it’s not in code, it’s not reproducible. IaC ensures that your security groups, VPCs, and data pipelines are identical across AWS, Azure, and GCP.

- Containerization and Orchestration: Use Docker and Kubernetes. If your integration logic is containerized, it can run on AWS EKS just as easily as on Azure AKS. This “write once, run anywhere” approach is the secret to true cloud portability.

- FinOps and Cost Governance: Monitor your egress costs daily. Use tagging to track which projects are driving cloud spend. Don’t let a “rogue query” across clouds burn your entire monthly budget. Implement automated “kill switches” for queries that exceed a certain cost threshold.

- Metadata-Rich Architecture: Treat your metadata (the data about your data) as a first-class citizen. This is essential for clinical data integration software to ensure lineage and trust. You need to know not just what the data is, but where it came from, who has accessed it, and how it has been transformed.

- Standardize on Open Formats: Avoid proprietary data formats that lock you into a specific vendor’s tools. Use open standards like Apache Parquet, Avro, or Iceberg. These formats are supported by almost every major cloud analytics tool, ensuring your data remains accessible regardless of which cloud you use for compute.

Frequently Asked Questions about Multi-Cloud Data Integration

How does multi-cloud integration impact data egress costs?

Egress costs occur when you move data out of a cloud provider. Frequent synchronization between clouds can lead to “bill shock.” To minimize these fees, use Zero-Copy federation, keep your compute resources in the same region as your data, or use dedicated private interconnects (like AWS Direct Connect or Azure ExpressRoute) which often have lower egress rates than the public internet.

What is the difference between data federation and data virtualization?

They are cousins, but with different scopes. Data federation specifically refers to mapping multiple autonomous sources into a single virtual database. Data virtualization is a broader term that includes the abstraction, integration, and delivery of that data to users. Virtualization often includes additional features like data masking, caching, and performance optimization layers that go beyond simple federated querying.

How can businesses ensure compliance across different cloud regions?

By using a federated governance model. Instead of moving data to a central (and potentially non-compliant) location, use a platform that allows you to “bring the code to the data.” This ensures the data never leaves its regulated region (maintaining data sovereignty) while still providing the aggregated insights you need for global decision-making.

What is a Data Mesh, and how does it relate to multi-cloud?

Data Mesh is an architectural paradigm that decentralizes data ownership. In a multi-cloud context, this means each business unit owns its data on whichever cloud best suits their needs, but they provide standardized “data products” to the rest of the organization. Multi-cloud integration tools act as the “connective tissue” that allows these distributed products to be discovered and used securely.

Can I use multi-cloud integration for legacy on-premises systems?

Yes, this is often referred to as a hybrid multi-cloud strategy. By using modern integration platforms, you can treat your on-premise data centers as just another “zone” in your multi-cloud ecosystem. This allows you to modernize your analytics in the cloud while keeping sensitive or legacy data on-site.

Conclusion

Mastering multi cloud data integration is no longer a “nice-to-have”—it is the foundation of the modern, data-driven enterprise. Whether you are avoiding vendor lock-in or seeking the best AI tools on the market, the ability to weave data together across multiple clouds is what will define the winners of 2025.

At Lifebit, we’ve built our federated AI platform to solve these exact challenges for the world’s most sensitive biomedical data. Our Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL) allow you to run advanced multi-omic analytics across a distributed, multi-cloud ecosystem without ever compromising on security or sovereignty.

Ready to turn your multi-cloud chaos into a competitive advantage?

Master your multi-cloud strategy with Lifebit