Record Linkage 101 – How to Make Your Data Play Nice Together

What Is Data Linkage and Why Should You Care?

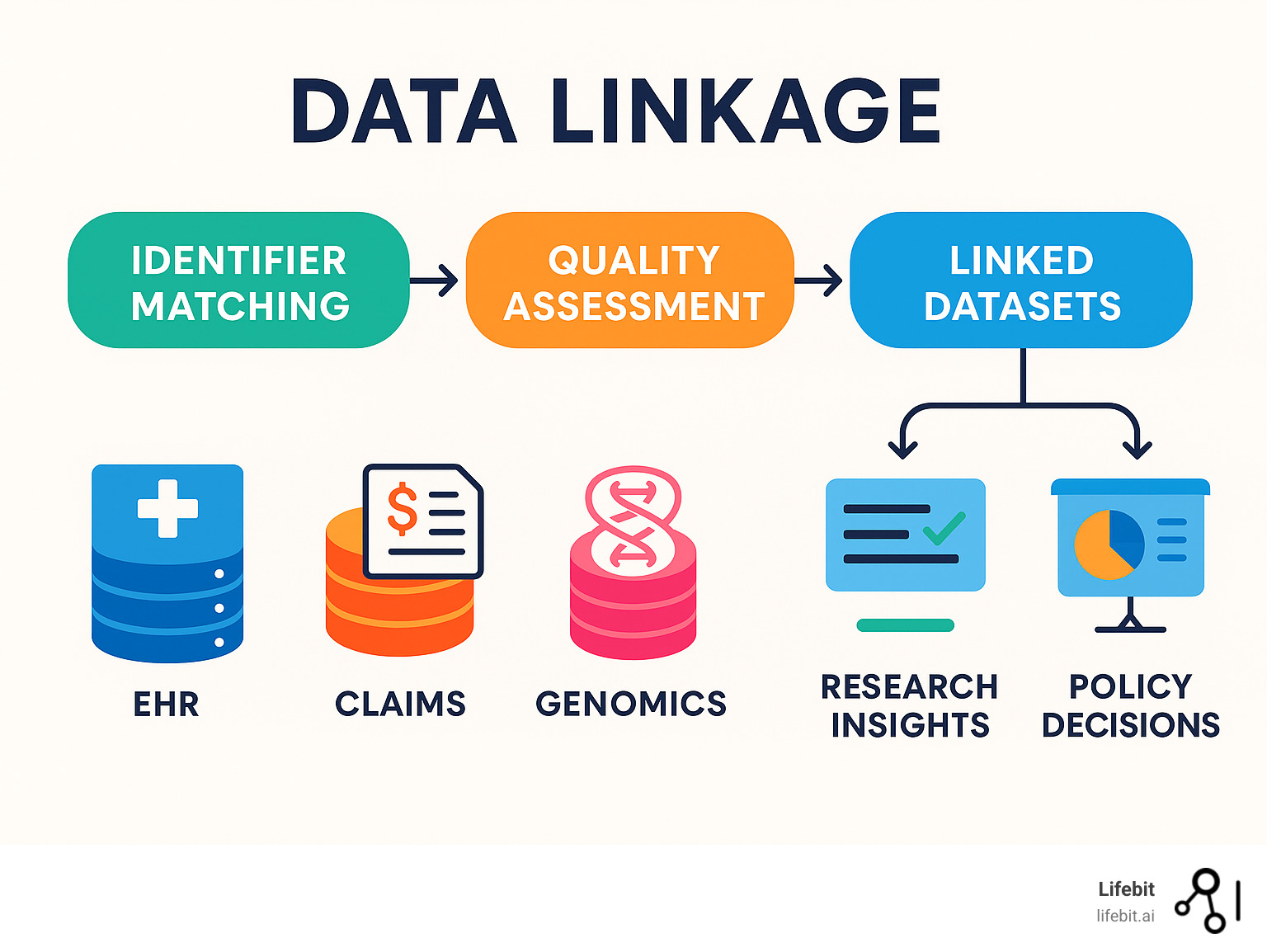

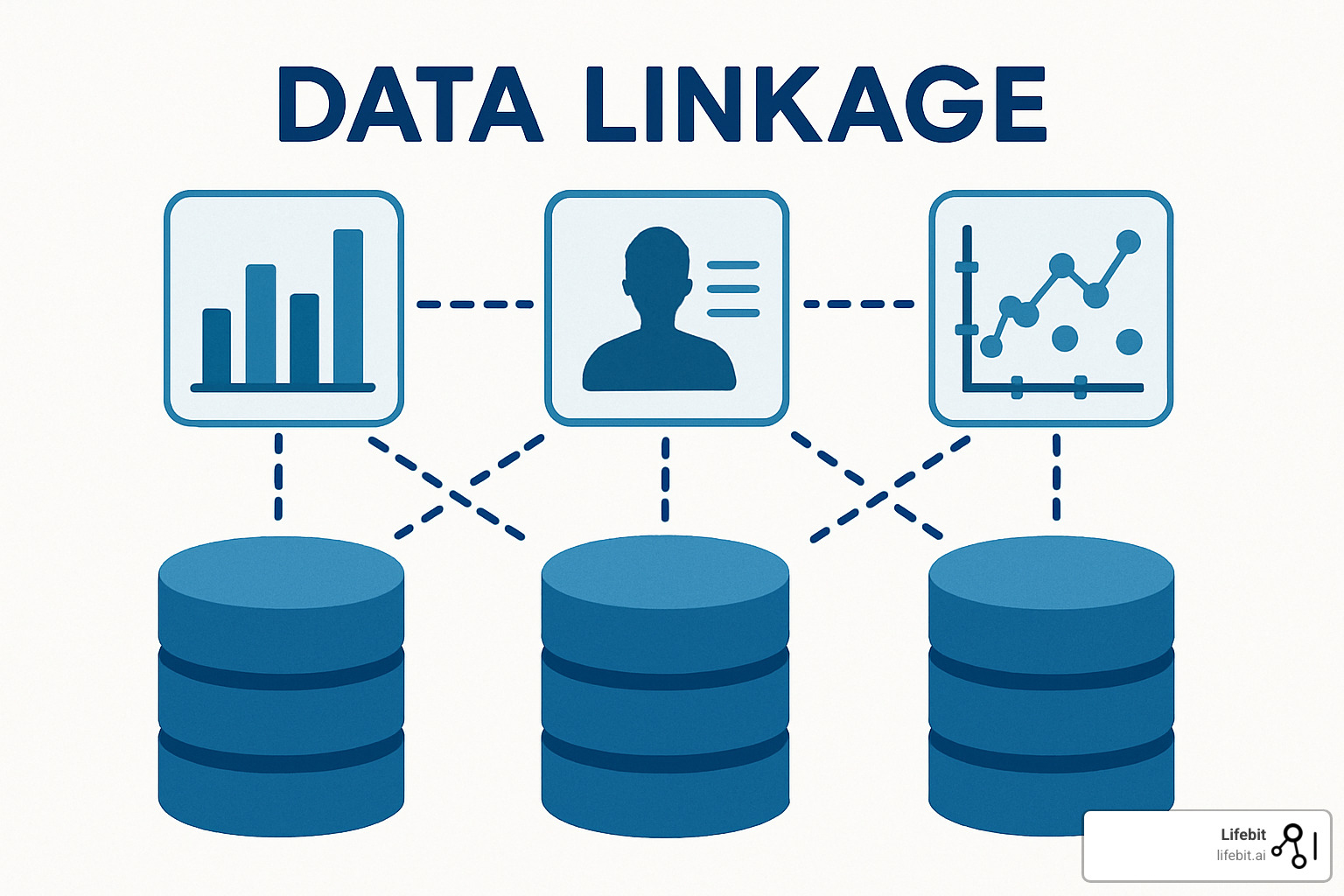

Data linkage is the process of bringing together information from different sources about the same person or entity to create a richer, more complete dataset. Instead of looking at fragmented pieces of health information scattered across hospitals, insurance claims, and research databases, data linkage lets you see the full picture.

Key aspects of data linkage:

- Purpose: Combine records from multiple databases without collecting new data

- Method: Match records using identifiers like names, dates of birth, or unique ID numbers

- Output: Improved datasets that reveal insights impossible to find in isolated sources

- Applications: Population health research, clinical trials, policy evaluation, and real-world evidence generation

The power of linked data becomes clear when you consider that health databases are typically built around specific services or conditions. For organizations managing massive, siloed datasets across EHRs, claims systems, and genomics platforms, data linkage transforms disconnected information into actionable intelligence.

The scale is impressive too. England’s linked electronic health records cover more than 54 million people, creating one of the world’s largest research resources. Population Data BC provides access to longitudinal data on 5.4 million residents, while the WA Data Linkage System has connected over 150 million records from more than 50 datasets.

As Dr. Maria Chatzou Dunford, CEO and Co-founder of Lifebit with over 15 years of expertise in computational biology and biomedical data integration, I’ve seen how data linkage opens up the potential of federated healthcare analytics. My work building cutting-edge tools for precision medicine has shown me that the real breakthroughs happen when we connect previously isolated data sources.

Data Linkage 101: Why It Matters

Organizations that share data externally generate three times more measurable economic benefit than those keeping everything locked in silos. But the real magic of data linkage goes far beyond the bottom line.

Your health story isn’t contained in just one place. It’s scattered across your GP’s records, hospital visits, prescription databases, and maybe even your fitness tracker. Data linkage brings these pieces together to reveal the complete picture of what keeps you healthy (or what doesn’t).

When we connect datasets across health, education, housing, and social services, we start seeing how a child’s school environment affects their long-term health outcomes, or how neighborhood air quality influences hospital admissions decades later. This cross-sector approach gives policymakers the evidence they need to make decisions that actually improve lives.

The longitudinal nature of linked data is particularly powerful. Even when your address changes or you switch insurance providers, data linkage can follow your health journey across decades. Population-level linked data also solves a practical problem: it’s incredibly cost-efficient. Instead of recruiting thousands of people for expensive cohort studies, researchers can use existing administrative records to answer the same questions.

Real-World Pay-offs

The COVID-19 pandemic gave us a masterclass in data linkage under pressure. Researchers in England linked primary care records with hospital admissions and death certificates to create a cohort of 17 million adults – almost overnight. This massive linked dataset revealed critical ethnic differences in COVID-19 outcomes that completely reshaped public health responses.

Beyond pandemic response, data linkage has transformed cancer research and treatment monitoring. The SEER-Medicare linked database in the United States connects cancer registry data with Medicare claims for over 5 million cancer patients, enabling researchers to track treatment patterns, costs, and outcomes across decades. This linkage revealed that certain chemotherapy regimens, while appearing effective in clinical trials, showed different real-world effectiveness patterns when examined across diverse populations.

Screening programmes rely on data linkage to work effectively. Cancer screening coordinators use sophisticated linkage methods that intentionally capture 90% of true matches, even if it means accepting some false positives. The NHS Cervical Screening Programme links screening records with cancer registries and death certificates to monitor programme effectiveness, identifying that organized screening reduces cervical cancer incidence by up to 80%.

Clinical trials are getting smarter about follow-up too. Instead of calling participants every few months, researchers increasingly link trial data with administrative records. The SWEDEHEART registry in Sweden demonstrates this approach, linking clinical trial participants with national health registers to provide complete long-term follow-up data on cardiovascular outcomes, reducing study costs by 60% while improving data completeness.

Pharmacovigilance represents another critical application. Drug safety monitoring systems like the FDA Sentinel Initiative link claims data, electronic health records, and adverse event reports across 100 million patients to detect safety signals that might take years to emerge in traditional reporting systems. This linked approach identified cardiovascular risks associated with certain diabetes medications months earlier than conventional methods.

Perhaps most powerfully, data linkage makes social determinants of health visible. When researchers connect health records with education, housing, and income data, they can pinpoint exactly where interventions will make the biggest difference. Studies linking housing quality data with emergency department visits have shown that children living in homes with lead hazards have 2.5 times higher rates of developmental delays, leading to targeted remediation programs.

Economic Impact and Healthcare Efficiency

The economic implications of data linkage extend far beyond research efficiency. Healthcare systems using linked data for population health management report 15-25% reductions in preventable hospitalizations. By identifying high-risk patients across multiple care settings, providers can intervene earlier and more effectively.

Fraud detection through data linkage saves healthcare systems billions annually. Medicare’s Fraud Prevention System uses sophisticated linkage algorithms to identify suspicious billing patterns across providers, saving an estimated $4 billion in fraudulent payments in 2022 alone.

Health technology assessment relies heavily on linked data to evaluate real-world effectiveness of new treatments and devices. The Swedish National Quality Registers link clinical data with health economic outcomes, providing evidence that influences reimbursement decisions across Europe. This approach has accelerated the adoption of cost-effective treatments while preventing the widespread use of interventions that show limited real-world benefit.

Key Concepts You’ll See Again

Records are simply individual rows of data representing one person across different databases. Identifiers are the fields we use for matching – things like your name, date of birth, address, or unique ID numbers. Master keys are unique identifiers that group all records belonging to the same person.

Linkage error is inevitable. We make mistakes in two ways – false matches (incorrectly linking records from different people) and missed matches (failing to link records that actually belong to the same person). Every data linkage project involves balancing this trade-off.

Blocking is a preprocessing step that reduces computational burden by only comparing records that share certain characteristics, like the same birth year or first letter of surname. Clerical review involves human experts manually examining uncertain matches to establish ground truth for algorithm training and validation.

Data Linkage Methods Explained

Choosing the right data linkage method feels a bit like picking the right tool for a job. The three main approaches each have their sweet spot, and understanding when to use which one can make or break your linkage project.

Deterministic Data Linkage: The Exact-Match Route

Deterministic linkage demands exact agreement on specified identifiers before declaring a match. No maybes, no close enough – either the records match perfectly or they don’t.

England’s National Hospital Episode Statistics showcases this approach with their three-step algorithm looking for exact agreement on NHS number, date of birth, postcode, and sex. When you have a unique identifier like the NHS number that follows every citizen throughout their healthcare journey, deterministic linkage becomes incredibly powerful.

The scalability of deterministic linkage is impressive. Because it uses simple comparison rules, it can process millions of records quickly without requiring massive computational resources. Privacy protection gets a boost through encryption and hashing techniques, enabling secure data linkage without exposing sensitive personal information.

Hierarchical deterministic matching represents a sophisticated evolution of basic exact-match approaches. The Canadian Institute for Health Information (CIHI) employs a seven-step deterministic algorithm that starts with the most reliable identifiers and progressively relaxes matching criteria. Step one requires exact matches on health card number, birth date, and sex. If that fails, step two allows for minor variations in health card numbers while maintaining exact matches on other fields. This cascading approach captures 95% of true matches while maintaining false match rates below 0.1%.

Preprocessing standardization becomes critical for deterministic success. The Australian Institute of Health and Welfare developed comprehensive data cleaning protocols that standardize name formats, address components, and date representations before attempting matches. Their approach includes phonetic standardization for names, postal code validation and correction, and systematic handling of missing data fields.

But deterministic linkage has its limits. Data entry errors, name changes after marriage, and address moves can cause missed matches. Research from the Nordic countries shows that deterministic linkage using personal identification numbers achieves near-perfect accuracy (>99.5%) but this performance degrades rapidly when unique identifiers are unavailable or unreliable.

Probabilistic Data Linkage: When Perfect IDs Don’t Exist

Real-world data is messy, and probabilistic linkage accepts that messiness. Instead of demanding perfection, it weighs the evidence across multiple fields to make intelligent matching decisions. This approach, built on the Fellegi-Sunter model, has been the workhorse of data linkage for decades.

Each potential record pair gets scored based on how well different identifiers align. An exact name match might add 8 points, while a disagreement on gender subtracts 3 points. The total match weights determine whether you’ve found a genuine match.

Expectation-Maximization (EM) algorithms automatically learn optimal matching parameters from your data. Instead of manually setting weights for each field, the EM algorithm iteratively estimates the probability that field agreements indicate true matches versus random coincidences. The Western Australian Data Linkage System uses this approach across 150+ datasets, achieving linkage quality that rivals manual review while processing millions of records.

String comparison functions form the backbone of probabilistic matching. Jaro-Winkler similarity excels at detecting transposition errors and minor spelling variations in names. Levenshtein distance counts the minimum character edits needed to transform one string into another. Soundex and Metaphone algorithms capture phonetic similarities, crucial for names that sound identical but have different spellings.

The research reveals the fundamental trade-off in probabilistic linkage. Set a conservative threshold and you’ll get fewer than 1% false matches – but you’ll miss 40% of true matches. Lower the bar and you’ll capture 90% of true matches while accepting a 30% false match rate.

Blocking strategies dramatically improve computational efficiency. Instead of comparing every record with every other record (an O(n²) problem), blocking groups records that share certain characteristics. Sorted Neighbourhood approaches sort records by key fields and only compare records within sliding windows. Canopy clustering creates overlapping blocks based on cheap approximate matching, then applies expensive exact matching within blocks.

Good linkage algorithms typically achieve sensitivity and PPV (positive predictive value) exceeding 95%, but reaching these benchmarks requires careful threshold tuning. The latest research on nationwide EHR linkage demonstrates how these methods perform at scale.

Emerging ML-Driven Data Linkage

Machine learning is bringing a fresh perspective to data linkage. Instead of relying on predefined rules or simple statistical models, gradient-boosting and neural networks learn optimal matching patterns directly from your data.

Deep learning architectures specifically designed for record linkage are showing remarkable results. Siamese neural networks learn to map record pairs into a similarity space where matching records cluster together. Recurrent neural networks excel at processing variable-length text fields like addresses and names, capturing sequential patterns that traditional methods miss.

Active learning approaches minimize the manual review burden by intelligently selecting which record pairs humans should examine. Instead of randomly sampling uncertain matches, active learning algorithms identify the most informative examples – those that will most improve the model’s performance. This approach can reduce manual review requirements by 70% while maintaining linkage quality.

Transfer learning enables models trained on one linkage task to adapt quickly to new datasets or domains. A model trained on linking hospital records can be fine-tuned for linking insurance claims with minimal additional training data. This capability is particularly valuable for organizations working with multiple data sources or expanding linkage operations.

These ML approaches excel at capturing complex, non-linear relationships that traditional methods miss. Privacy-preserving techniques like synthetic training data and federated learning enable model development without exposing sensitive records.

The beauty of ML-driven approaches lies in their adaptability. As your data characteristics change, the models can continuously learn and improve. Hybrid approaches that combine deterministic rules, probabilistic methods, and machine learning represent the cutting edge of data linkage methodology.

Preparing, Cleaning & Standardising Your Data

The quality of your data linkage results depends heavily on data preparation. Even the most sophisticated algorithms struggle with inconsistent formatting, typos, and parsing errors.

Data Cleaning Checklist

String standardization forms the foundation of effective linkage. Force all text fields into consistent case (usually uppercase), remove extra spaces, and strip punctuation that doesn’t aid matching.

Date formatting requires special attention. Convert all dates to a standard format (like YYYY-MM-DD) and handle partial dates consistently.

Address parsing breaks complex address strings into components: house number, street name, unit number, city, postal code. This granular approach allows partial matches when someone moves within the same building.

Name parsing separates full names into first, middle, and last name components. This enables matching even when name order varies or middle names are sometimes included and sometimes omitted.

Phonetic coding using algorithms like Soundex or Metaphone captures names that sound similar but are spelled differently.

String distance measures quantify how similar two text strings are. Edit distance counts the minimum character changes needed to transform one string into another.

Here’s what raw versus cleaned data might look like:

| Field | Raw Data | Cleaned Data |

|---|---|---|

| Name | “smith, john a.” | “SMITH” / “JOHN” / “A” |

| DOB | “1/15/85” | “1985-01-15” |

| Address | “123 main st apt 2b” | “123” / “MAIN” / “ST” / “2B” |

| Phone | “(555) 123-4567” | “5551234567” |

Tools & Open-Source Helpers

Several tools can accelerate your data linkage projects:

Link Plus from the CDC provides a user-friendly interface for probabilistic linkage. FEBRL offers Python-based tools for data cleaning and matching. ChoiceMaker provides commercial-grade matching with machine learning capabilities.

For organizations managing clinical data integration across multiple sources, specialized platforms can provide end-to-end solutions. Clinical Data Integration Software offers comprehensive approaches to these challenges.

Quality Assessment, Error Management & Reporting

Even the best data linkage algorithms make mistakes. The key isn’t achieving perfect linkage (that’s impossible), but understanding your errors well enough to account for them properly.

Measuring Linkage Quality

The gold standard for evaluating data linkage quality involves having human experts manually review a sample of potential matches. This clerical review creates your “ground truth” and provides training data to improve future linkage runs.

Stratified sampling for clerical review ensures you examine matches across the full spectrum of match scores. Don’t just review the uncertain middle ground – include some high-confidence matches (to catch systematic errors) and some clear non-matches (to validate your rejection criteria). The Population Data BC approach involves reviewing 2,000-5,000 record pairs per linkage project, stratified across score ranges to ensure representative coverage.

Inter-rater reliability testing ensures consistent clerical review standards. Multiple reviewers independently examine the same record pairs, and agreement rates above 95% indicate reliable review processes. When disagreements occur, structured discussion and consensus-building improve future consistency.

Negative controls act like a smoke detector for your linkage algorithm. These test cases should never match—think records with different genders or birth dates decades apart. If your algorithm links these impossible pairs, you know something’s seriously wrong.

Temporal validation examines whether linked records maintain logical chronological relationships. A person shouldn’t appear to give birth before their own birth date, or receive medical care after their recorded death. The Veterans Affairs linkage system includes 47 different temporal validation rules that flag biologically or logically impossible sequences.

Implausible links analysis examines linked records for biological or logical impossibilities. A person appearing to give birth at age 5 signals linkage errors that need investigation. Geographic validation checks whether linked records show reasonable travel patterns – someone shouldn’t appear in hospitals 1,000 miles apart on the same day.

Cluster analysis looks at how many records link to each unique individual. If you’re seeing unusually large clusters—like one person apparently having 50 hospital visits in a single day across different cities—you might be over-linking records. The Australian Institute of Health and Welfare flags any individual with more than 20 linked records in a single dataset for manual review.

Completeness assessment compares your linked dataset against external benchmarks. If you’re linking birth certificates with hospital discharge records, the number of linked birth records should approximate known birth rates for your population. Significant discrepancies suggest systematic linkage failures.

When measuring quality, researchers focus on several key metrics. Sensitivity tells you what percentage of true matches you successfully identified, while specificity shows how well you avoided linking records that don’t belong together. Positive predictive value reveals what proportion of your identified matches are actually correct. Good linkage algorithms typically achieve values exceeding 95% across these measures.

Advanced Quality Metrics

Capture-recapture methods estimate the total number of true matches in your dataset, even when you don’t have complete ground truth. By comparing linkage results from different algorithms or parameter settings, you can estimate how many matches all methods missed.

Bias assessment examines whether linkage quality varies across demographic groups. The UK Biobank linkage validation revealed that linkage success rates varied by ethnicity, age, and socioeconomic status, leading to algorithm adjustments that improved equity in linkage quality.

Longitudinal consistency checks whether the same individuals link consistently across multiple time periods. If someone links successfully in 2020 and 2022 data, they should also link in 2021 data (assuming they appear in all three datasets).

Handling Linkage Error in Analysis

Once you understand your linkage quality, the real challenge begins: how do you account for those errors in your research analysis?

Multiple imputation treats linkage uncertainty like any other missing data problem. Instead of assuming one “correct” linkage, you generate multiple plausible scenarios, analyze each separately, then combine the results. The MICE (Multiple Imputation by Chained Equations) approach can incorporate linkage uncertainty alongside other sources of missing data.

Probabilistic sensitivity analysis systematically varies linkage error assumptions to test result robustness. If your study conclusions remain stable across reasonable error scenarios (say, 2-10% false match rates), you can report findings with confidence. If conclusions flip dramatically with small error rate changes, linkage uncertainty dominates your results.

Quantitative bias analysis helps you understand how different levels of linkage error might affect your study conclusions. Monte Carlo simulations can explore scenarios like “what if 5% of my matches are wrong?” The E-value approach quantifies how strong linkage errors would need to be to explain away your observed associations.

Sometimes certain groups are harder to link than others. Inverse probability weighting can adjust for these differential linkage rates, helping maintain the representativeness of your linked dataset. This approach requires modeling the probability of successful linkage as a function of observable characteristics.

Sensitivity analyses test whether your substantive conclusions change when you use different linkage thresholds or algorithms. Robust findings should remain consistent across reasonable parameter choices. The triangulation approach compares results from deterministic, probabilistic, and machine learning linkage methods to identify findings that replicate across approaches.

Bayesian approaches naturally incorporate linkage uncertainty into statistical inference. Instead of treating linkage as a preprocessing step, Bayesian methods simultaneously estimate linkage probabilities and substantive parameters of interest, properly propagating uncertainty throughout the analysis.

For transparent reporting, established guidelines like RECORD and GUILD provide frameworks for describing your linkage methods clearly. Health Data Standardisation offers additional guidance on maintaining quality standards throughout the entire linkage process.

Privacy, Security & Getting Access to Linked Data

When you’re working with data linkage, you’re handling some of the most sensitive information that exists. Getting the privacy and security right isn’t just a nice-to-have; it’s absolutely essential for legal compliance and keeping the public’s trust.

The Five Safes framework gives us an internationally recognized roadmap: safe projects that serve the public good, safe people who are properly trained, safe data that’s been de-identified, safe settings with secure computing environments, and safe outputs that don’t allow re-identification.

Consent requirements vary depending on your jurisdiction and data sources. De-identification is almost always required, removing or encrypting direct identifiers before linkage occurs. Modern trusted research environments provide secure computing spaces where researchers can access linked data without the ability to download individual records.

Applying for Linked Data Access

Getting access to linked data often feels like navigating a maze of approvals, but each step serves an important purpose in protecting people’s privacy.

Data custodian approvals come first. These are the organizations that actually own and manage the datasets you want to link. Each has its own approval process, timeline, and requirements. Some can take months, so building extra time into your project timeline is wise.

Human Research Ethics Committees (HREC) review whether your research meets ethical standards. Even though you’re using existing administrative data, ethics approval is typically required.

Major linked data systems have streamlined these processes. SDLE at Statistics Canada provides social data linkage services. Wales’ SAIL Databank offers population-scale linked data with strong privacy protections. PopData BC serves British Columbia with longitudinal data on millions of residents. Australia’s AIHW provides national linkage services, while England’s ONS Secure Research Service offers similar capabilities for UK researchers.

Balancing Transparency & Trust

Data linkage research depends on public trust. Building that trust requires genuine transparency and community engagement.

Public engagement means actively communicating with communities about how their data is being used and what benefits result. When people understand that linked data research led to better COVID-19 responses or improved cancer screening programs, they’re more likely to support it.

Ethnic bias mitigation deserves special attention because linkage quality often varies across different demographic groups. Regular assessment and algorithm adjustment help ensure fair linkage quality across all populations.

Privacy impact assessments systematically evaluate risks throughout the entire linkage process. The cutting edge involves secure multiparty computation techniques that enable record matching across organizations without ever sharing raw identifiers.

As one expert put it: “The biggest barriers to realising the full potential of data linkage are public trust and the inefficiencies in data-access processes.” Health Data Linkage: Promise & Challenges explores how we can steer these complex issues while open uping the full potential of connected health data.

Frequently Asked Questions about Data Linkage

What is the difference between deterministic and probabilistic data linkage?

Deterministic linkage is the perfectionist approach. Two records link only if they agree perfectly on specific fields like NHS numbers, birth dates, and postcodes. No wiggle room, no exceptions. This works beautifully when your data is pristine, but one tiny typo and you’ve lost a valid match.

Probabilistic linkage is more like a detective weighing evidence. Instead of demanding perfection, it asks “how likely is it that these records belong to the same person?” Maybe the surnames match perfectly (+15 points), the first names are similar (+8 points), but the addresses differ slightly (-3 points). Add it all up, and if the total score crosses your threshold, you’ve got a match.

The practical choice comes down to your data quality. Got clean data with reliable unique identifiers? Deterministic linkage will serve you well. Wrestling with inconsistent, error-prone datasets? Probabilistic methods are your friend.

How do I know if linkage errors bias my results?

Start by looking at who gets left out. If your linkage success varies dramatically across different groups, you might be accidentally excluding important populations.

Test your assumptions by running the same analysis with different linkage thresholds. Switch from conservative matching to liberal matching and see what happens to your conclusions. If your main findings flip dramatically, linkage uncertainty is driving your results more than the actual data patterns.

Compare the linked and unlinked records on whatever characteristics you can observe. Are the people who didn’t get matched systematically different? These patterns tell you whether your linked dataset truly represents your target population.

The goal isn’t perfect linkage – that’s rarely achievable. The goal is understanding your limitations and being transparent about how they might affect your conclusions.

Do I always need individual consent for data linkage projects?

Consent requirements for data linkage depend on a complex web of factors that vary dramatically across jurisdictions and contexts.

Government administrative data often operates under different rules than clinical research data. Many countries allow approved researchers to link health administrative records without individual consent, provided there are strong ethics oversight and privacy protections.

The purpose of your research matters enormously. Public health surveillance and approved academic research often get more flexibility than commercial applications. De-identification levels also influence consent requirements.

Here’s the practical advice: start the conversation early. Consult with ethics committees, legal counsel, and data protection officers during project planning. The regulatory landscape keeps evolving, and what’s acceptable in one place might be prohibited elsewhere.

Conclusion

The world of data linkage is changing rapidly. What started as simple exact-match algorithms has evolved into sophisticated AI-driven systems that can connect millions of records while preserving privacy and maintaining accuracy.

Federated analytics represents the next frontier. Instead of moving sensitive data around the globe, we’re bringing the analysis to where the data lives. This approach solves the tension between collaboration and privacy, letting researchers work together without compromising data sovereignty.

The AI acceleration we’re witnessing makes previously impossible linkage tasks feel routine. Machine learning algorithms can now handle complex string variations and data quality issues that would have stumped traditional methods. What used to require months of manual work can happen in hours, with better accuracy.

Governance frameworks are finally catching up with the technology. We’re seeing more nuanced approaches to consent, better frameworks for balancing transparency with privacy, and clearer pathways for researchers to access linked data.

At Lifebit, we’ve built our platform around these emerging realities. Our Trusted Research Environment (TRE) provides the secure workspace that modern data linkage demands, while our Trusted Data Lakehouse (TDL) handles the complex harmonization that makes multi-source linkage possible. The R.E.A.L. (Real-time Evidence & Analytics Layer) delivers the kind of instant insights that researchers have dreamed about for decades.

When we successfully link health records with social services data, we reveal the housing conditions that affect recovery rates. When we connect clinical trials with long-term administrative follow-up, we understand real-world treatment effectiveness. These translate into better clinical decisions, more effective public health policies, and ultimately, healthier communities.

Data linkage has matured from a specialized research technique into essential infrastructure for evidence-based healthcare. Whether you’re planning your first linkage project or optimizing systems that connect millions of records, the algorithms matter, but what really matters is using connected information to serve human needs better.

Ready to see how federated data linkage can transform your research capabilities? Find more about the Lifebit Platform and explore how we’re making secure, scalable data connections accessible to organizations worldwide.