Unboxing Docker’s AI Magic for Seamless Deployments

AI for docker: Seamless Magic 2025

The Strategic Advantage of Docker for AI

The synergy of AI for docker is rapidly changing how organizations develop, deploy, and manage intelligent applications, making complex AI/ML projects feasible and efficient.

Docker is essential for AI/ML projects because it offers:

- Consistency: Ensures AI models run identically across all environments.

- Reproducibility: Guarantees experiments can be replicated precisely, crucial for research.

- Simplified Deployment: Packages everything an AI application needs into a portable unit.

- Isolation: Prevents dependency conflicts and improves security.

- Scalability: Enables easy scaling of AI agents and models.

- Resource Efficiency: Optimizes the use of underlying hardware, including GPUs.

AI and machine learning (ML) projects often face a significant hurdle: managing complex environments and dependencies. Developers frequently encounter the frustrating “it works on my machine” problem, where code runs perfectly in one setting but fails elsewhere. This inconsistency slows down development, complicates collaboration, and hinders deployment.

Docker solves these challenges. It provides a consistent, isolated, and portable environment for your AI/ML code. Think of it as a standardized shipping container for your software. Everything your AI application needs—code, runtime, libraries, settings—is packaged together. This ensures your models behave the same way, everywhere.

This approach is vital for organizations handling sensitive data, like in healthcare or public sector research. It allows for secure, compliant, and real-time analysis of large datasets without moving the data itself.

I’m Dr. Maria Chatzou Dunford. My 15+ years in computational biology, AI, and high-performance computing, including contributions to Nextflow, have shown me the profound impact of AI for docker in changing healthcare data analysis. At Lifebit, we leverage Docker’s containerization to enable scalable and compliant AI-powered evidence generation for secure, federated data environments.

Why Docker is the Bedrock for Modern AI/ML

Ah, the classic developer’s lament: “It works on my machine!” This seemingly innocent phrase often hides a deeper, more frustrating truth – the dreaded “dependency hell” or environment chaos. In the intricate world of AI and machine learning (ML), where specific versions of libraries, frameworks, and drivers are absolutely critical, this problem gets amplified tenfold. But fear not! Docker swoops in like a superhero, providing a consistent and portable environment that ensures your code runs seamlessly across all systems. It truly is the foundational piece, the bedrock, upon which modern AI for docker workflows are built.

So, why is this so incredibly important? First off, it’s vital for reproducible research. In scientific and machine learning endeavors, being able to precisely repeat your experiments isn’t just a nice bonus; it’s a fundamental requirement. Docker neatly packages everything up, guaranteeing that your models and experiments will produce the exact same results, no matter where they’re run. Secondly, it beautifully tackles the scalability needs of AI. As our models grow in complexity and usage, they need to scale efficiently. Docker’s lightweight nature and isolation capabilities make this not just possible, but easy.

What’s more, Docker provides crucial isolation. This doesn’t just prevent those annoying dependency conflicts (saving us countless hours of head-scratching debugging!), but also significantly boosts security. Each container runs in its own little bubble, minimizing the risk of one application messing with another or vulnerabilities spreading. All of this makes Docker an indispensable foundation for MLOps, streamlining the entire journey from initial development all the way to deployment and beyond. If you’re keen to dive deeper into making your machine learning delivery smoother, we highly recommend exploring some Lessons on Continuous Delivery in Machine Learning.

Consistency Across Development, Staging, and Production

One of Docker’s biggest gifts to AI for docker projects is its uncanny ability to create environment parity. Imagine this: your data scientists build an amazing AI model using a specific version of TensorFlow and Python. Without Docker, making sure that exact environment is perfectly copied onto a staging server for testing, and then onto a production server for deployment, can be a real nightmare. You’d spend ages battling “configuration drift,” where tiny differences in system libraries or package versions lead to unexpected errors.

Docker sweeps this problem away by packaging your application and all its dependencies into an unchanging image. This image then effortlessly spins up identical containers across your development, staging, and production environments. No more endless struggles with Python virtual environments clashing with system-wide installations; Docker brings its own completely encapsulated world. This consistency doesn’t just make your life easier; it dramatically speeds up your development cycle and makes onboarding new team members an absolute breeze. Everyone works with the same, consistent dependencies, right from the very start.

Reproducibility and Simplified Deployment

Reproducibility is truly the holy grail in AI/ML research. If we can’t reproduce our results, well, our findings are, frankly, questionable. Docker directly addresses this “reproducibility crisis” by making sure your experiments can be replicated precisely, every single time. It achieves this by packaging your models, code, and their exact dependencies into a Docker image. This image can then be versioned using tags, giving you a clear, traceable history of your model’s evolution.

When it comes to deployment, Docker transforms what could be a complex, multi-step headache into a single, neat command. With a pre-built Docker image, deploying your AI for docker model becomes as simple as typing docker run my_ai_model:v1.0. This streamlined process integrates beautifully with Continuous Integration/Continuous Deployment (CI/CD) pipelines, letting you automate the building, testing, and deployment of your AI applications. This simplicity is particularly helpful when tackling AI Challenges in Research and Drug Findy, where rapid iteration and reliable deployment are key. Docker truly makes deploying ML models as services a smooth operation, allowing for easy scaling and management.

Core Docker Features Simplifying AI Workflows

When you’re diving into AI for docker development, you’ll quickly find that Docker isn’t just one tool – it’s an entire ecosystem designed to make your life easier. The platform offers a comprehensive suite of features that seem almost tailor-made for the unique challenges we face in AI/ML workflows.

Think of Dockerfiles as your recipe book for creating the perfect AI environment. These simple text files contain step-by-step instructions that tell Docker exactly how to build your application’s container. No more guessing games or “it worked yesterday” moments – just clear, reproducible instructions.

Docker Compose is where things get really exciting for multi-service applications. Instead of juggling multiple containers manually, you can define your entire AI stack – database, model server, API, frontend – in a single configuration file. It’s like having a conductor for your container orchestra.

Docker Hub serves as your go-to marketplace for pre-built environments. Why spend hours setting up a TensorFlow environment from scratch when someone has already done the heavy lifting? You can find thousands of ready-to-use images that’ll get you up and running in minutes.

For AI developers, GPU support is absolutely crucial. Docker’s seamless integration with NVIDIA drivers means your deep learning models can tap into the full power of your graphics cards without the usual driver headaches. Multi-stage builds help keep your final images lean and secure by separating what you need to build your app from what you need to run it.

When you’re ready to scale beyond a single machine, Docker Swarm and Kubernetes integration provide the orchestration power you need for production-grade deployments. These tools help you manage hundreds or thousands of containers across multiple servers with ease.

Essential Docker Images for AI for Docker Workflows

The magic of AI for docker workflows really shines when you find the treasure trove of pre-built images available on Docker Hub. These aren’t just any images – they’re carefully crafted environments that can save you days of setup time and countless troubleshooting headaches.

Starting with the foundation, the official Python image serves as the bedrock for most machine learning projects. It’s clean, well-maintained, and gives you a solid starting point for building your custom AI environments.

When it comes to deep learning frameworks, both PyTorch and TensorFlow offer official images that come pre-loaded with all the necessary CUDA drivers and libraries. This means you can skip the notorious “CUDA installation dance” that has frustrated countless developers.

For GPU-accelerated workloads, the NVIDIA CUDA runtime images are your best friend. They provide all the GPU drivers and libraries your deep learning models need, packaged neatly inside the container where they can’t conflict with your host system.

Interactive development gets a boost with Jupyter Docker Stacks. These images come loaded with Jupyter Notebook, popular data science libraries, and even GPU support for those compute-intensive experiments.

The Ollama image has become a game-changer for anyone working with large language models locally. It simplifies the entire process of deploying and running LLMs, making local experimentation accessible to everyone.

Finally, MLflow containers help you manage the entire machine learning lifecycle, from tracking experiments to packaging models and managing registries.

Managing Multi-Container AI Applications with Docker Compose

Real-world AI applications are rarely simple, single-container affairs. Modern AI for docker applications typically involve multiple moving parts: a vector database storing embeddings, an LLM service for language processing, a Flask API handling inference requests, and maybe a sleek frontend for users to interact with your AI.

Managing all these interconnected services manually would be like trying to conduct an orchestra while playing every instrument yourself. That’s where Docker Compose becomes your conductor’s baton.

With Docker Compose, you can define your entire AI ecosystem in a single docker-compose.yml file. Picture this: you’re building a smart document search system. Your setup might include a Qdrant vector database for similarity searches, an Ollama service running your chosen LLM, a Python Flask application that orchestrates everything, and a React frontend for users.

Instead of starting each service individually and hoping they can find each other, Docker Compose brings up your entire stack with one simple command: docker compose up. The networking between containers happens automatically, and your local development environment mirrors your production setup perfectly.

This approach has become even more powerful now that Compose is now production ready, supporting deployments to cloud services like Google Cloud Run and Azure. You can build and test locally, then deploy anywhere with confidence. It’s the bridge that takes your AI prototype from your laptop to serving real users in production.

The Rise of AI for Docker: Intelligent Container Management

While AI has been busy generating code, revolutionizing developer productivity by up to 10X, these tools primarily focus on source code, which accounts for only 10% to 15% of an application. What about the other 85% to 90%? We’re talking about databases, language runtimes, frontends, and all the intricate configurations defined by Dockerfiles, Docker Compose files, and Docker images. This is where the concept of AI for docker truly takes center stage, moving beyond simple code generation to intelligent container management.

Docker has introduced Docker AI, an AI-powered product designed to boost developer productivity by tapping into the vast universe of Docker developer wisdom. This isn’t just another code generator; it’s about providing context-specific, automated guidance for defining and troubleshooting all aspects of an application beyond just source code. Imagine having an intelligent assistant, affectionately known as Gordon, that understands your containers, predicts challenges, and actively helps you solve them. This is the dawn of AI-native DevOps, where AI is intrinsically woven into every stage of the DevOps lifecycle.

How Docker AI Boosts Developer Productivity

Docker GenAI Gordon is a game-changer, revolutionizing how we interact with containerized environments. It brings AI-driven intelligence directly into our Docker workflows, simplifying tasks that once required extensive manual effort or deep expertise. Here’s how it boosts our productivity:

- Automated Dockerfile Generation: Starting a new project? Gordon can generate optimized Dockerfiles, ensuring best practices and error-free deployment.

- Troubleshooting Assistance: When a container misbehaves, Gordon can analyze logs and configurations to provide AI-powered troubleshooting. It can even suggest fixes, reducing downtime and operational bottlenecks. We can simply ask

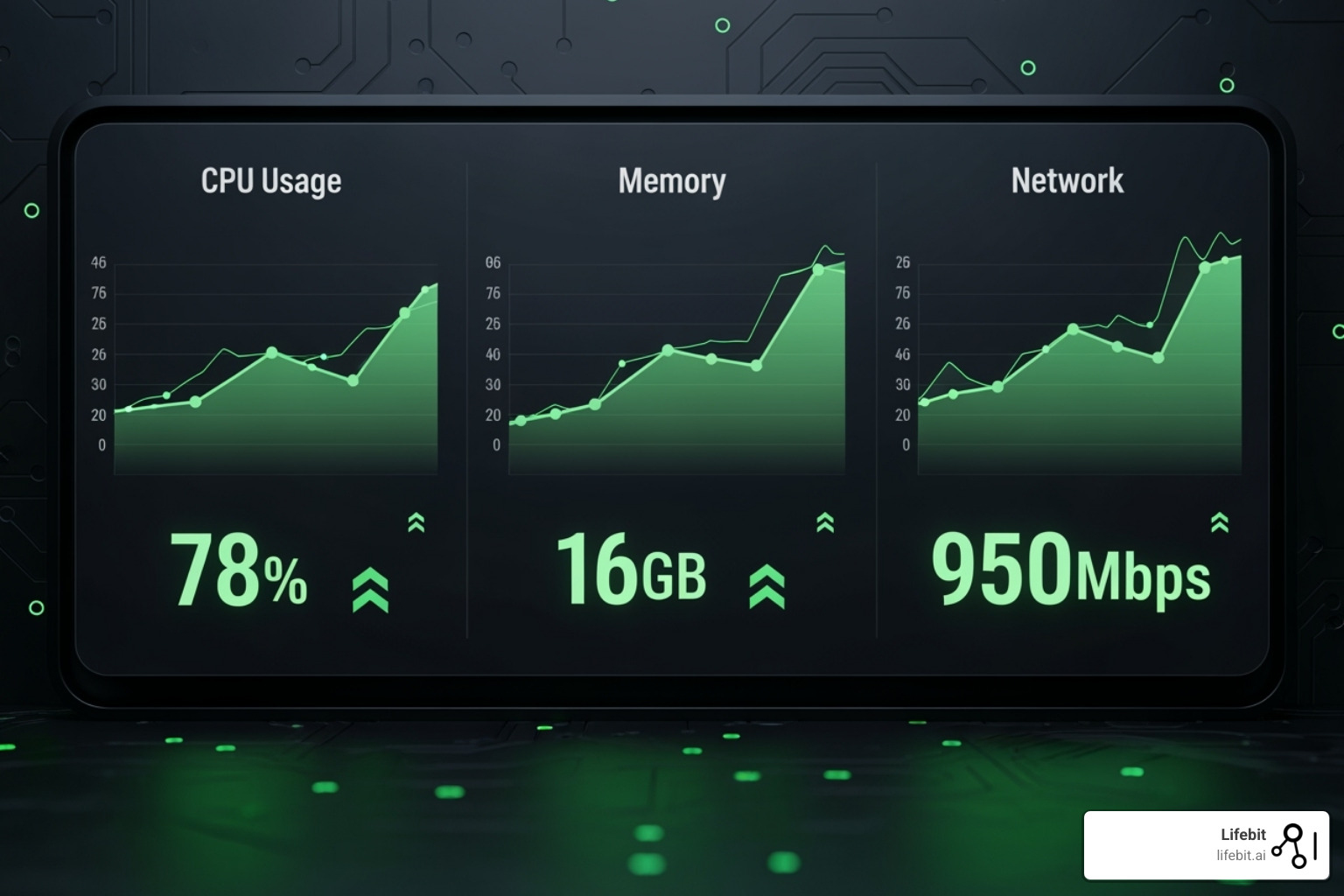

docker genai troubleshoot my-container. - Resource Optimization: For AI workloads, optimizing resource allocation (CPU, GPU, memory) is critical. Gordon can help us fine-tune these settings, ensuring our deep learning models run efficiently. For example,

docker genai optimize --container my-ai-workload. - Security Scanning: Security is paramount. Gordon can scan our container images for vulnerabilities, helping us ensure compliance with security best practices before deployment:

docker genai security-scan my-image:latest. - Natural Language Interaction: Perhaps the most feature is Gordon’s ability to understand natural language queries. We can simply ask

docker genai "How do I scale my container?", and Gordon will provide execution steps, best practices, and even modify configurations for us. This conversational AI for DevOps makes complex tasks accessible and reduces the learning curve.

Gordon is not just a tool; it’s a AI-powered assistant that makes our lives easier by streamlining Docker workflows. For more details, we can always refer to the official Docker GenAI Gordon Documentation.

From Code Generation to Full-Stack Application Assembly

Traditional code generation AIs are fantastic for writing source code, but applications are far more than just code. They are complex ecosystems of web servers, language runtimes, databases, message queues, and intricate configuration files. This is the “other 85%” that has historically been manual and prone to errors. Docker AI steps in to address this crucial gap, bringing intelligence to the entire application assembly process.

By leveraging the collective wisdom of millions of Docker developers, some with over 10 years of experience, Docker AI can automatically generate best practices and select up-to-date, secure images for our applications. This means that when we’re defining our application’s components – whether it’s setting up a PostgreSQL database, configuring a Node.js runtime, or deploying an NGINX web server – Docker AI provides intelligent guidance. It helps us define and troubleshoot all aspects of our app quickly and securely as we iterate in our ‘inner loop’ of development. This ensures that our full-stack applications are not only functional but also secure and optimized, right from their inception.

From Theory to Practice: Real-World Applications and Best Practices

The theoretical benefits of combining AI for docker are compelling, but what does this look like in the real world? The practical applications are diverse and impactful, demonstrating significant improvements in efficiency, cost, and performance across various industries.

From financial services leveraging AI-powered trading bots to hospitals integrating AI agents for disease diagnosis, Docker provides the robust, consistent, and scalable foundation needed for these critical applications. The ability to containerize, manage, and scale AI models effortlessly translates directly into tangible business advantages.

Real-World Success Stories

The impact of AI for docker is perhaps best illustrated through real-world case studies:

- Fintech Trading Bots: A leading fintech company deploying multiple AI-powered trading bots used Docker Swarm. They achieved a remarkable 40% improvement in execution speed, crucial for high-frequency trading. Furthermore, they reduced infrastructure costs by 30% and reported zero downtime during peak trading hours, demonstrating improved reliability. This showcases Docker’s ability to handle low-latency, dynamic scaling, and agent isolation.

- Healthcare Diagnosis: A hospital integrating AI agents with Docker and Kubernetes for disease diagnosis saw significant gains. They achieved 30% faster diagnosis times, directly impacting patient care by reducing wait times. This also led to lower operational costs and increased efficiency, highlighting Docker’s role in real-time analysis and efficient model deployment for critical healthcare applications.

- Faster Cluster Spin-ups: Retina.ai, a company managing client data for machine learning models, experienced a 3x faster cluster spin-up time by using custom Docker containers on its cloud platform. This was achieved by pre-compiling time-consuming packages within Docker containers, optimizing startup times and reducing costs associated with over-provisioning. This also unified their dependency management, ensuring reproducible data science.

These examples underscore the practical benefits: faster execution, reduced costs, improved reliability, and quicker insights – all powered by the robust foundation of Docker.

Best Practices for Containerizing with AI for Docker

To harness the full power of AI for docker, adhering to best practices is crucial. These guidelines help us build efficient, secure, and maintainable containerized AI applications:

- Keep Images Lightweight: Our goal is minimal images. We should only include necessary dependencies in our Dockerfile. This reduces build times, image size, and attack surface. Multi-stage builds are our best friend here, allowing us to separate build-time tools from runtime necessities.

- Use a

.dockerignoreFile: Similar to a.gitignorefile, a.dockerignorefile prevents certain files and directories from being copied into your Docker image. This is crucial for both performance and security. You should ignore build artifacts, local configuration files, secrets, and version control directories like.git. This not only keeps your image smaller and build context faster but also prevents sensitive information from being accidentally baked into your image where it could be exposed. - Use Multi-Stage Builds: This is a game-changer for reducing image size. We can compile our code and install dependencies in one stage, then copy only the essential artifacts to a much smaller final image. This is particularly effective for interpreted languages like Python, where we can pre-compile packages within the Docker container to optimize startup times.

- Manage Dependencies Effectively: Always use

requirements.txtor similar files to declare Python dependencies with exact versions. This ensures reproducibility and avoids “it works on my machine” scenarios due to version conflicts. - Optimize Dockerfile Layers: Each instruction in a Dockerfile creates a layer. We should combine commands where possible (e.g.,

RUN apt-get update && apt-get install -y ...) to minimize the number of layers and improve caching. - Secure Base Images: Always start with trusted, official base images (e.g.,

python:3.9-slim-busterinstead of a full OS image). Regularly update images to patch security vulnerabilities. Tools like Docker GenAI Gordon can help with security scanning.

By following these best practices, we ensure our Dockerized AI applications are not only functional but also efficient, secure, and easy to manage, from local development to large-scale deployments. For a quick reference, a Docker Cheatsheet can be incredibly useful. These practices are also key to deploying complex AI models like DeepVariant as Nextflow Pipeline on CloudOS.

The Future is Agentic: What’s Next for AI and Containerization

The journey of AI for docker is truly just beginning! While we’ve already seen incredible advancements, the future promises even more intelligent and autonomous ways for AI and containers to work together. Imagine an era where our AI applications aren’t just running inside containers, but are actively managing and optimizing those containers themselves. This exciting future is known as AIOps, leading to what we call self-managing containers. It’s like having your AI models not only do their job but also act as their own IT support team, ensuring everything runs smoothly!

This vision brings us to the rise of “agentic apps.” These aren’t just regular programs; they are autonomous, goal-driven AI systems. Think of them as intelligent agents that can make decisions on their own, adapt in real-time, and handle complex situations within dynamic environments. Docker is right there, helping us build, run, and scale these smart agents. It’s simplifying everything from defining how these agents work to making sure they can grow and adapt as needed. This includes exciting features like AI-powered debugging, where AI helps pinpoint and fix issues, automated resource optimization to make sure your models use just the right amount of power, and an improved security posture to keep everything safe and sound. All of this is seamlessly orchestrated within the Docker ecosystem.

The economic potential of this powerful combination is truly staggering. Just by itself, Generative AI is expected to add an incredible $2.6 to $4.4 trillion in annual value to the global economy. That’s a huge impact! Clearly, AI isn’t just a buzzword; it’s a strategic necessity for businesses and research alike. And guess what? Containers are the main vehicle driving its widespread adoption. Docker keeps innovating with tools like Docker AI (Gordon), which acts as your intelligent assistant, and new Docker Desktop features that make it easier to run models and manage your AI projects. We’re actively working on building autonomous AI agents with Docker, truly scaling intelligence to new heights.

This future where AI and containerization are deeply linked doesn’t just bring efficiency; it creates a whole new way of developing software. And for critical applications, especially those dealing with sensitive data like in healthcare or public sector research, this perfectly aligns with the need for robust, secure, and compliant infrastructures. This is where concepts like a Trusted Research Environment become essential for the future of AI.

Conclusion

What a journey we’ve had! We’ve truly seen the incredible impact of AI for docker. It’s amazing how Docker has grown from a simple tool for apps to an intelligent partner in the AI age. We’ve explored how it fixes those tricky problems like “dependency hell” and environment chaos. This means our AI/ML projects are always reproducible and super easy to deploy, even the really complex ones.

We talked about Docker’s awesome core features, right? Things like Dockerfiles and Compose, plus powerful GPU support and Kubernetes integration. These are the sturdy building blocks that help us create, run, and scale AI workflows. And then there’s the exciting rise of Docker AI, with clever tools like Gordon. This is kicking off a whole new era of smart container management, where tasks get automated and developers get a huge productivity boost, far beyond just writing code. We even saw real-world wins in finance and healthcare, proving Docker brings big improvements in speed, cost, and how reliable things are.

Looking ahead, the future of AI for docker is super exciting! Imagine “agentic apps” and containers that manage themselves – that’s the promise of AI and containerization working together. This isn’t just a small step forward; it’s a huge leap that gives developers more power and speeds up AI breakthroughs everywhere. Here at Lifebit, our own federated AI platform uses this exact powerful combo. It helps us do secure, large-scale AI research, giving real-time insights and smart safety monitoring in complex data environments.

The real magic of AI for docker is how it takes messy, complicated AI development and turns it into something smooth, consistent, and scalable. It’s truly a vital partnership that will keep pushing the boundaries of intelligent applications. Ready to see it in action? We invite you to Explore the Lifebit Platform for secure, large-scale AI research and find how we’re shaping the future of biomedical data analysis, right now.