The Art of Clinical Data Interpretation—Simplified

Clinical data interpretation: Mastered 2025

Why Clinical Data Interpretation is the Foundation of Modern Medicine

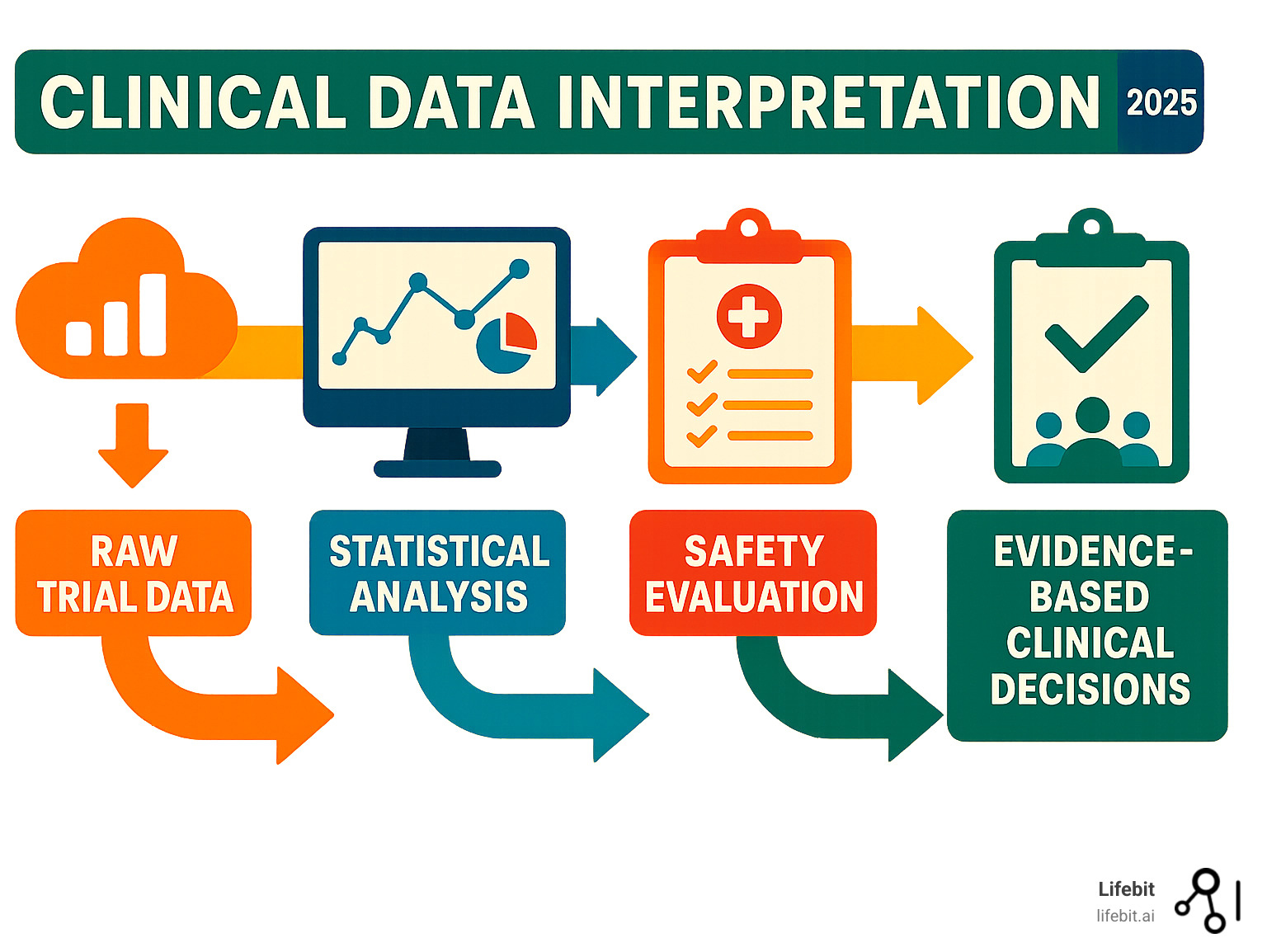

In the era of evidence-based medicine, the ability to critically evaluate clinical research is no longer an academic exercise—it is an essential skill for every healthcare professional. Clinical data interpretation is the rigorous process of analyzing information from medical studies to make informed, patient-centered decisions. It is the bridge that connects raw research data to actionable medical insights, changing complex statistics and study results into better health outcomes. This skill involves a systematic evaluation of study design, statistical methods, and safety profiles to determine the true value and applicability of new findings.

Key components of clinical data interpretation include:

- Study validity assessment: This is the first gatekeeper of quality. It involves scrutinizing a trial’s methodology to determine if the results are likely to be true. Key questions include: Was the randomization process truly random? Was blinding effective in preventing bias? Were the groups comparable at baseline? Without strong internal validity, any conclusions drawn are built on a shaky foundation.

- Statistical analysis: This goes beyond simply looking at p-values. It requires a deep understanding of confidence intervals, which provide a range of plausible values for the true effect, and effect sizes, which measure the magnitude of the treatment difference. It’s about understanding not just if there’s an effect, but how big that effect is.

- Clinical significance evaluation: This is arguably the most important step. It involves asking whether a statistically significant finding actually matters to patients. A small, statistically proven benefit may not be worth the cost, side effects, or inconvenience. This requires distinguishing between changes in lab markers and meaningful improvements in how a patient feels, functions, or survives.

- Safety profiling: No treatment is without risk. A thorough interpretation involves balancing the demonstrated benefits against potential harms. This means carefully analyzing the frequency, severity, and nature of adverse events to create a complete risk-benefit profile that can be discussed with patients.

- Generalizability assessment: This component addresses the crucial question: “Do these study results apply to my specific patient?” It involves comparing the study’s population, setting, and intervention with the real-world clinical scenario to determine if the findings can be reliably extrapolated.

As one expert noted, “Clinical trials are impactful only when we can draw meaningful conclusions from them.” This challenge is immense. With over 18,000 ongoing clinical trials in the U.S. and more than 140 new oncology drug approvals in the past five years, healthcare professionals face a deluge of data. Compounding this, studies show that fewer than 50% of papers in top medical journals properly identify their analysis methods, and many published figures contain deficiencies that can mislead readers. The rise of real-world evidence and AI adds further layers of complexity, demanding an even more sophisticated approach to interpretation.

Fortunately, clinical data interpretation follows learnable principles. Whether evaluating a randomized controlled trial or complex real-world evidence, the same fundamental framework applies.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. I’ve spent over 15 years developing computational tools that enable better clinical data interpretation across genomics, real-world evidence, and federated healthcare datasets. My experience has shown me that the principles of sound interpretation remain constant, even as data sources and analytical tools become more sophisticated.

Foundations of a Clinical Trial: The Blueprint for Interpretation

Interpreting clinical data is like detective work. Before drawing conclusions, you must understand how the evidence was collected. A clinical trial’s foundation—its design, research question, and endpoints—determines the validity of its results. If a new drug is 50% better, we must ask: Better at what? For whom? And how was success measured? A flawed foundation invalidates any subsequent findings, no matter how impressive the statistics may seem.

The PICO framework is an invaluable tool for deconstructing a study’s research question. It organizes the core components: Population (who was studied), Intervention (what was tested), Comparison (what it was compared to), and Outcome (what was measured). For example, a PICO question could be: “In adult patients with type 2 diabetes (Population), does a new SGLT2 inhibitor (Intervention) compared to placebo (Comparison) reduce the risk of major adverse cardiovascular events (Outcome)?” This structure provides a clear roadmap for interpreting any trial. When we approach clinical data interpretation, we are essentially reverse-engineering the researchers’ thinking to see if their approach was sound.

Study Design: The First Clue to Data Quality

A study’s design is the architecture of the evidence and reveals how much trust to place in its results. The hierarchy of evidence places systematic reviews and meta-analyses of RCTs at the top, followed by individual RCTs, and then various types of observational studies.

Randomized Controlled Trials (RCTs) are the gold standard for determining causality. By randomly assigning patients to treatment or control groups, researchers minimize selection bias and ensure that, on average, the groups are comparable at the start of the trial. This allows them to attribute any observed differences in outcomes to the intervention itself. For instance, without randomization in a heart medication trial, doctors might unconsciously give the new drug to healthier patients, creating the illusion that the drug is more effective than it truly is. There are different RCT designs, such as parallel-group trials, where each group receives a different treatment simultaneously, and crossover trials, where each participant receives all treatments in a sequence, serving as their own control. Crossover designs are efficient but are only suitable for chronic, stable conditions and treatments with no carry-over effects.

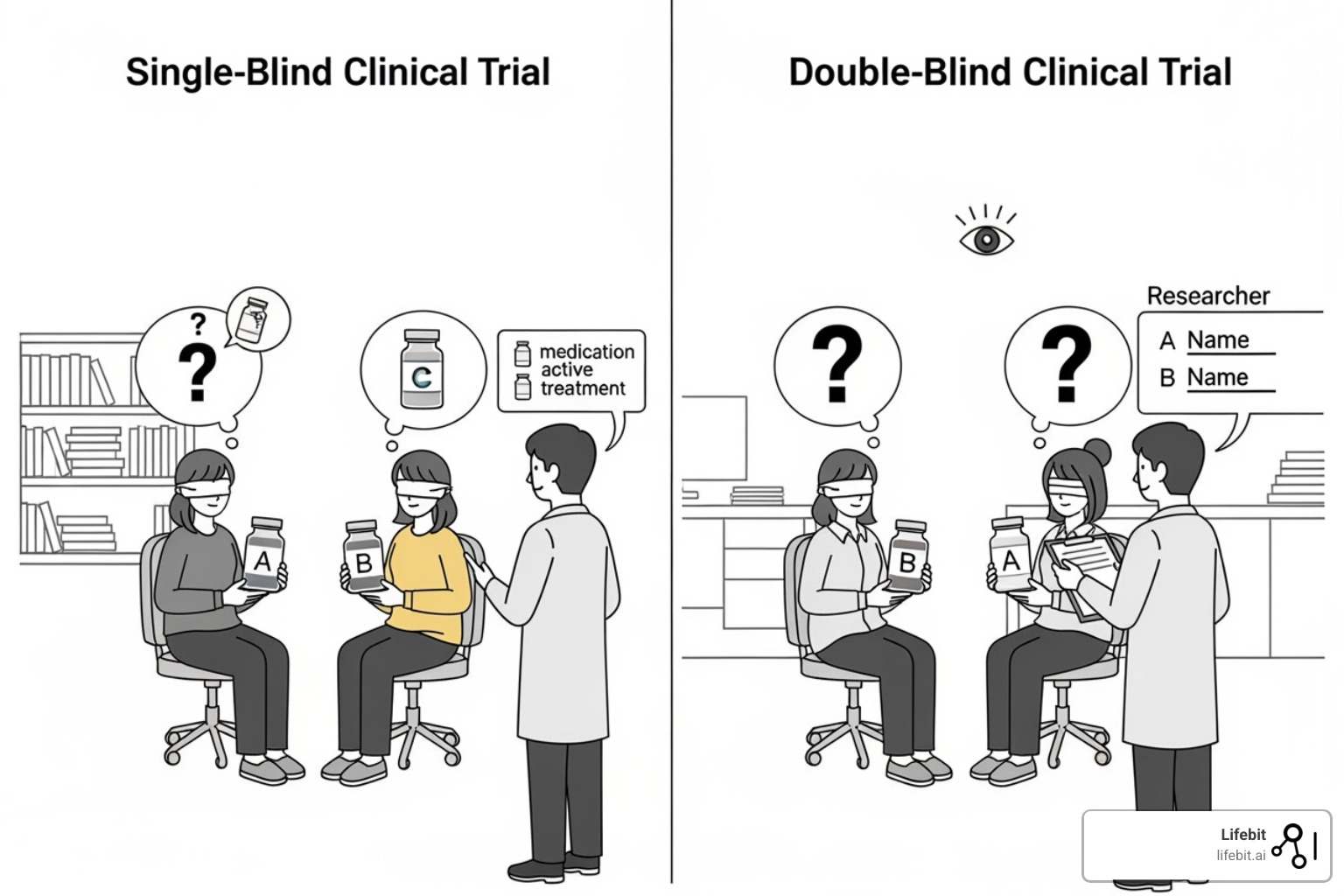

Blinding adds another critical layer of protection against bias. In a single-blind study, patients don’t know which treatment they are receiving, which helps control for the placebo effect. In a double-blind study, neither the patients nor the clinicians/researchers interacting with them know the treatment assignment. This prevents both the placebo effect and unconscious bias from researchers (e.g., paying closer attention to patients on the new drug) from influencing outcomes. However, blinding can be difficult to maintain, especially if a drug has noticeable side effects. A 2007 analysis found that only 2% of studies formally tested their blinding, and nearly half of those found it had failed, reminding us of these real-world limitations.

Observational studies examine evidence after the fact, without the researcher assigning the intervention. They are crucial for understanding real-world patterns, studying rare outcomes, and investigating questions where an RCT would be unethical. However, they are more susceptible to biases and confounding variables.

- Cohort Studies: These studies follow a group (cohort) of individuals over time to see who develops an outcome. They can be prospective (following a group into the future) or retrospective (using existing records to look back in time). The Framingham Heart Study is a famous prospective cohort study that has identified major risk factors for cardiovascular disease.

- Case-Control Studies: These studies start with the outcome and look backward. They compare a group of people with a disease (cases) to a similar group without the disease (controls) to identify past exposures that may have contributed to the outcome. They are efficient for studying rare diseases but are prone to recall bias.

- Cross-Sectional Studies: These studies are a snapshot in time, measuring both exposure and outcome simultaneously. They are good for determining prevalence but cannot establish a temporal relationship (i.e., which came first, the exposure or the outcome).

A well-executed observational study can still be more trustworthy than a poorly designed RCT.

Objectives and Endpoints: Defining What Success Looks Like

Before analyzing numbers, we must understand the trial’s definition of success by examining its endpoints.

Primary endpoints represent the main question the study was designed and powered to answer. The entire trial’s credibility rests on this outcome. Secondary endpoints explore other interesting but less central questions. Findings for secondary endpoints should be considered hypothesis-generating rather than conclusive, as the study was not optimized to test them.

Composite endpoints bundle multiple outcomes into a single measure, such as a composite of heart attack, stroke, and cardiovascular death. While this increases statistical efficiency by capturing more events, it can obscure important details. Did the treatment prevent non-fatal heart attacks but increase the risk of fatal strokes? A single composite result might not clarify this, so it’s crucial to examine the individual components.

Surrogate endpoints are indirect measures, like cholesterol levels or tumor size, that are thought to predict a real clinical outcome, like heart attacks or survival. They allow for faster and smaller trials but can be misleading if their correlation to real patient outcomes is weak or unproven. History is filled with examples of drugs that improved a surrogate endpoint but failed to improve, or even worsened, clinically meaningful outcomes. We must always prioritize clinically relevant outcomes—such as mortality, morbidity, and quality of life—that matter to patients’ lives.

The key question is always: “Does this endpoint actually matter to patients?” If a treatment improves lab values but doesn’t help people feel better, function better, or live longer, its real-world value is questionable.

How to specify objectives and outcomes

The Core of Analysis: Efficacy, Safety, and Significance

Once we understand the trial’s foundation, we can dive into the results to answer three fundamental questions: Does the treatment work (efficacy)? Is it safe? And are the findings meaningful (significance)? This stage requires a careful blend of statistical rigor and sound clinical judgment.

The Core of Clinical Data Interpretation: Statistical vs. Clinical Significance

The distinction between statistical and clinical significance is one of the most critical concepts in clinical data interpretation.

Statistical significance addresses the role of chance. It is often indicated by a p-value, which represents the probability of observing the study result (or a more extreme one) if there were truly no difference between the treatment groups. A conventional threshold for significance is p<0.05, meaning there is less than a 5% probability that the observed effect is a random fluke. Confidence Intervals (CIs) provide complementary information. A 95% CI gives a range of values within which we can be 95% confident the true effect lies. If the 95% CI for a difference between two groups does not include zero (for absolute differences) or one (for ratios), the result is statistically significant at the p<0.05 level. For example, when 95% confidence intervals for the means of two groups don’t overlap, it’s strong evidence of a real difference (P<0.006).

However, statistical significance doesn’t automatically mean the results matter to patients. As researcher Grzegorz S. Nowakowski notes, “P values don’t tell you anything about clinical benefit. They only tell you how likely your results are to be true and not a play of chance.” A massive study could find a new drug lowers blood pressure by 1 mmHg more than a placebo with a p-value of <0.001. This result is statistically significant (highly unlikely to be due to chance) but clinically trivial.

Clinical significance is about whether the effect makes a meaningful difference in a patient’s life. This is assessed using the effect size (the magnitude of the difference) and comparing it to the minimal clinically important difference (MCID)—the smallest change in an outcome that a patient would perceive as beneficial. Effect sizes like Absolute Risk Reduction (ARR) or Relative Risk (RR) quantify the treatment’s impact. This is why patient-reported outcomes (PROs), which directly capture the patient’s experience of their health and symptoms, are so valuable for assessing clinical significance.

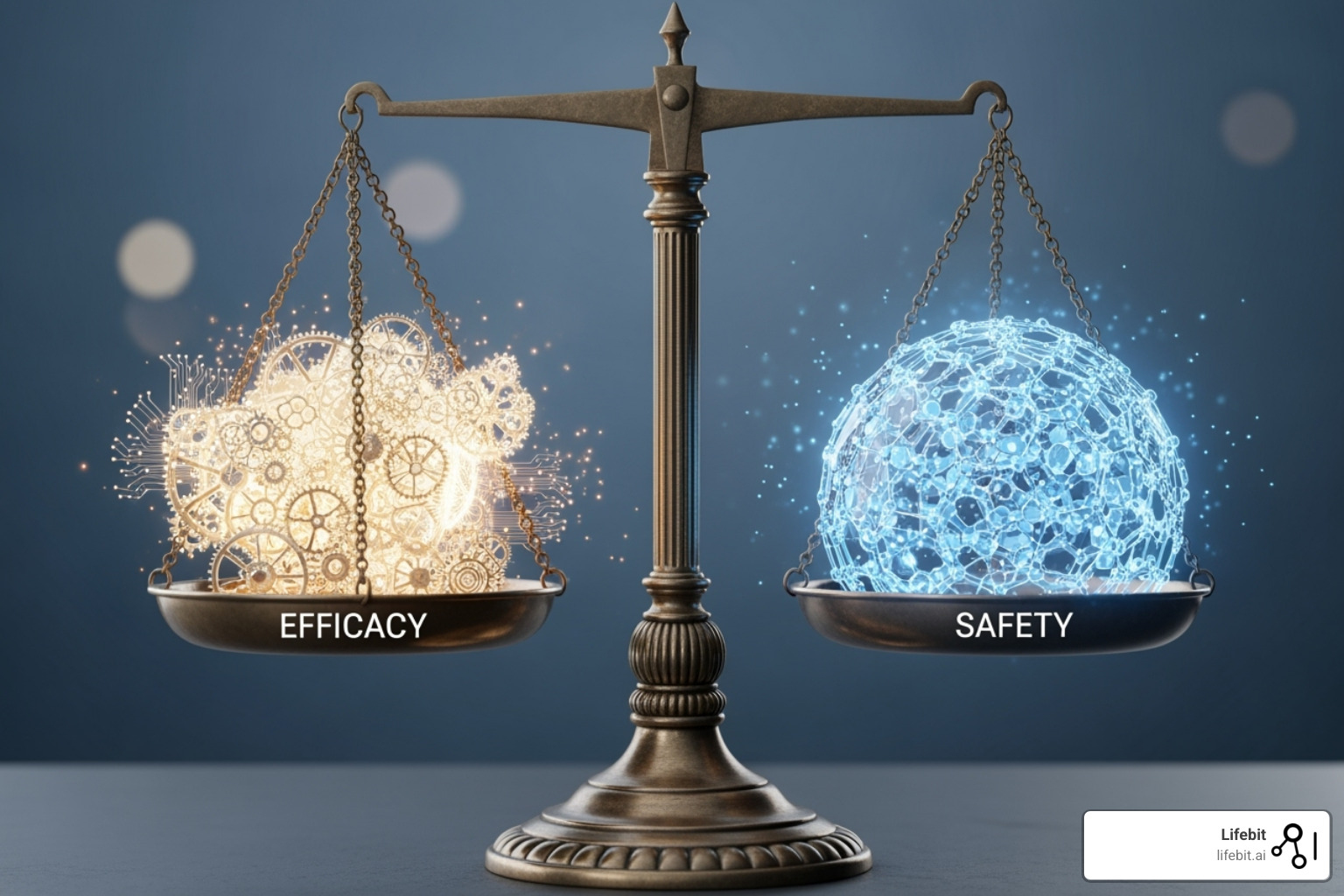

Balancing Efficacy and Safety: The Ultimate Goal

Every treatment decision is a negotiation between potential benefits and potential risks. A robust interpretation requires a comprehensive analysis of both.

Safety data involves systematically tracking Adverse Events (AEs)—any untoward medical occurrence in a patient—and Serious Adverse Events (SAEs), which are life-threatening, require hospitalization, or result in disability or death. These events are often classified using standardized systems like the Common Terminology Criteria for Adverse Events (CTCAE), which grades severity from 1 (mild) to 5 (death). This standardization allows for consistent reporting and comparison across studies. Since 2008, regulators like the FDA and EMA have required dedicated cardiovascular safety evaluations for new diabetes drugs, recognizing that solving one problem while creating another isn’t progress.

Two key metrics help quantify this benefit-risk balance in absolute terms:

- The Number Needed to Treat (NNT) is the number of patients who need to receive a treatment for a specific period for one person to experience a beneficial outcome. It is calculated as the inverse of the Absolute Risk Reduction (1/ARR). An NNT of 11 means treating 11 patients prevents one adverse outcome. Lower NNTs are better.

- The Number Needed to Harm (NNH) is the number of patients who need to receive a treatment for one person to experience a specific harmful outcome. It is calculated as the inverse of the Absolute Risk Increase (1/ARI). Higher NNHs are better.

Comparing NNT and NNH provides a clear, tangible basis for clinical decision-making and patient discussions. For example, if a drug has an NNT of 20 for preventing a heart attack and an NNH of 100 for causing a major bleed, a clinician and patient can weigh these probabilities. The art of clinical data interpretation lies in this thoughtful assessment. A treatment that works in a trial but has intolerable side effects has little real-world value.

| Metric Type | Key Efficacy Metrics | Key Safety Metrics |

|---|---|---|

| Absolute | Absolute Risk Reduction (ARR), Number Needed to Treat (NNT) | Absolute Risk Increase (ARI), Number Needed to Harm (NNH) |

| Relative | Relative Risk (RR), Odds Ratio (OR), Hazard Ratio (HR) | Relative Risk (RR) of Adverse Events, Odds Ratio (OR) of Adverse Events |

| Other | Confidence Intervals (CIs), P-values, Effect Size, Patient-Reported Outcomes (PROs) | Frequency and Severity of Adverse Events (AEs), Serious Adverse Events (SAEs), Laboratory Abnormalities, Discontinuation Rates due to AEs |

A Picture is Worth a Thousand Data Points: Interpreting Trial Figures

Figures in research papers are powerful storytelling tools that visualize the heartbeat of clinical data. They can summarize complex information more effectively than text, but they can also mislead if not interpreted correctly. Studies have shown that many published figures have presentation flaws. Understanding the most common types of figures—flow diagrams, Kaplan-Meier plots, forest plots, and repeated measures plots—is essential for effective clinical data interpretation. How to interpret figures in clinical trial reports

The Patient Journey: Flow Diagrams

Based on the CONSORT (Consolidated Standards of Reporting Trials) guidelines, flow diagrams are mandatory for RCTs and tell the complete story of participant flow, from initial screening to final analysis. They are often the first figure in a clinical trial report.

These diagrams answer critical questions: How many participants were assessed for eligibility, excluded (and why), and randomized? How many were allocated to each group, and how many completed the study versus those lost to follow-up or who discontinued the intervention? A high or unequal dropout rate (attrition) is a major red flag, as the reasons for leaving (e.g., side effects, lack of efficacy) could systematically bias the results. The diagram should also specify the analysis population, such as Intention-to-Treat (ITT), which includes all randomized patients in their original groups regardless of adherence, preserving the benefits of randomization.

Survival Over Time: Kaplan-Meier Plots

Kaplan-Meier plots are essential for visualizing time-to-event data, such as time to death, disease recurrence, or any other dichotomous event. They are ubiquitous in oncology and cardiology trials.

To read these plots, observe the y-axis (probability of being event-free) and the x-axis (time). A steeper drop in a curve indicates more events happening more quickly. The small vertical ticks on the curves, known as censoring marks, represent participants who were lost to follow-up or had not experienced the event by the study’s end. The Hazard Ratio (HR), often provided with the plot, gives a numerical comparison of the event rates between groups over the entire study period. An HR less than 1 favors the intervention. Look for early, clear, and sustained separation between the treatment and control group curves. The log-rank test is typically used to generate a p-value for the difference between the curves.

Comparing Effects: Forest Plots

Forest plots excel at visualizing and summarizing results from multiple studies (in a meta-analysis) or from different subgroups within a single study.

Each horizontal line represents a single study or subgroup. The square in the middle of the line is the effect estimate (e.g., an Odds Ratio or Relative Risk), with its size often proportional to the study’s weight (usually based on sample size). The horizontal line itself is the confidence interval. The central vertical line represents no effect (e.g., at a value of 1 for ratios). If a confidence interval crosses this line, the result for that study/subgroup is not statistically significant. The diamond at the bottom represents the overall pooled effect from all studies combined; its width represents the confidence interval for this summary estimate. Forest plots also help assess heterogeneity—the degree of variation between study results—often quantified by the I² statistic. High heterogeneity might suggest that pooling the results is inappropriate.

Tracking Changes: Repeated Measures Plots

Repeated measures plots are used to show how a continuous variable (like blood pressure, pain score, or a lab value) changes over time for different treatment groups.

These plots display the mean score for each group at different time points, with error bars (usually representing 95% CIs or standard error) indicating the variability of the data. When interpreting, look for a clear and sustained separation between the treatment group lines over time. Significant overlap in the error bars may suggest a lack of meaningful difference between the groups at that time point. These plots are powerful because they reveal not just if a treatment works, but also the trajectory of its effect—when it starts working, if the effect is sustained, and if it wanes over time.

Mastering Advanced Clinical Data Interpretation

Advanced clinical data interpretation moves beyond accepting the authors’ conclusions at face value. It requires asking harder questions: Will this work for my specific patients? Can I truly trust this data? What subtle biases might be lurking beneath the surface? This is about developing a healthy skepticism and examining the evidence with a critical, forensic eye.

Beyond the Average: The Importance of Subgroup Analysis and Generalizability

A trial’s main result represents the average effect across a potentially diverse population. However, the “average patient” in a trial rarely exists in the clinic. An 82-year-old woman with multiple comorbidities will likely respond differently to a treatment than the 55-year-old healthy male who may have been overrepresented in the study. This is the challenge of generalizability (also known as external validity).

Subgroup analysis, which examines treatment effects in specific subsets of the trial population (e.g., by age, sex, disease severity, or biomarker status), can offer clues. However, these analyses must be interpreted with extreme caution. They have less statistical power due to smaller sample sizes and can generate spurious findings due to multiple testing. When evaluating a subgroup claim, look for these signs of credibility: the subgroup was specified before the trial began (pre-specified), there is a strong biological rationale for the difference, the effect is large and statistically significant after adjusting for multiple comparisons (using a test for interaction), and the finding is consistent across other studies.

Key questions to ask when evaluating generalizability:

- Are the study’s inclusion and exclusion criteria so restrictive that the patient population is not representative of those seen in routine practice?

- Were all clinically meaningful outcomes, including long-term effects and quality of life, considered?

- Is the intervention feasible to implement in my clinical setting, considering cost, complexity, and required resources?

- Do the benefits outweigh the risks and costs for my specific patient population, not just the average trial participant?

Long-term studies like the Framingham Heart Study and large-scale analyses like the CVD-REAL study (over 300,000 patients) provide invaluable data on how treatments work across diverse, real-world populations, helping to bridge the generalizability gap.

Ensuring Trust: Reporting Standards and Protocols

Transparent reporting is the bedrock of trust in clinical research. Reporting guidelines and trial protocols are our assurance of quality and completeness.

- CONSORT (Consolidated Standards of Reporting Trials) provides a 25-item checklist for reporting randomized trials.

- SPIRIT (Standard Protocol Items: Recommendations for Interventional Trials) focuses on the essential content of a trial protocol.

- STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) offers checklists for reporting observational studies.

- Good Clinical Practice (GCP) is an international ethical and scientific quality standard for designing, conducting, recording, and reporting trials that involve human subjects.

Adherence to these standards signals a commitment to transparency and allows readers to critically appraise the research fully.

Navigating the Pitfalls: Bias and Confounding

Even well-designed trials can suffer from bias (systematic error) and confounding (mixing of effects), which can distort results and lead to incorrect conclusions.

- Selection bias: Occurs when the groups being compared differ systematically from the start. Proper randomization with allocation concealment is the key defense.

- Performance bias: Happens when participants, clinicians, or researchers behave differently because they know the treatment allocation. For example, a doctor might provide more attentive care to a patient on a novel therapy. This is why blinding is crucial.

- Detection bias: Arises when the assessment of outcomes differs between groups. A researcher aware of the treatment might probe more deeply for side effects in the intervention group. Blinding the outcome assessors is the solution.

- Attrition bias: Occurs when participants drop out of the study unequally between groups. If patients on a new drug drop out due to side effects, an analysis of only those who remain will make the drug appear safer and more effective than it is.

- Reporting bias (or publication bias): The well-documented tendency for positive, statistically significant trials to be published more often than negative or inconclusive ones. This skews the available literature. Always check trial registries like ClinicalTrials.gov to see if the primary endpoints were changed or if the study was completed but never published.

Confounding occurs when a third factor is associated with both the intervention and the outcome, creating a spurious association. The classic example is the observed link between coffee drinking and heart disease, which was confounded by smoking (smokers were more likely to drink coffee and also more likely to have heart disease). In observational studies, statistical techniques like propensity score matching can help control for known confounders by creating pseudo-randomized groups that are balanced on measured characteristics.

Finally, human cognitive biases, like confirmation bias (favoring data that supports our existing beliefs), and deliberate “spin” in reporting can mislead even experienced readers. Developing a systematic, critical appraisal habit is the best defense against these pitfalls.

The New Frontier: RCTs, Real-World Studies, and AI

The landscape of clinical data interpretation is undergoing a profound change. While the traditional randomized controlled trial remains a cornerstone, it is now being complemented by Real-World Data (RWD) and Real-World Evidence (RWE). Powering the analysis of both is the rise of Artificial Intelligence (AI), which is changing how we generate and interpret medical information. Interpretation of Real-World Clinical Data

RCTs vs. Real-World Studies (RWS): A Complementary Relationship

Think of the relationship between RCTs and RWS this way: RCTs show if a treatment can work under ideal, controlled conditions, while RWS shows if it does work in the messy, complex reality of everyday clinical practice.

RCTs are like testing a high-performance car on a pristine, closed racetrack. They maximize internal validity, meaning we can be highly confident that the observed results are due to the treatment and not other factors. However, they often use strict inclusion/exclusion criteria, leading to a homogenous patient population that may not reflect the broader community of patients with multiple health conditions, variable adherence, and diverse backgrounds. This can limit their external validity or generalizability.

This is where Real-World Studies are invaluable. By analyzing RWD from sources like electronic health records (EHRs), insurance claims databases, patient registries, and even data from wearable devices, RWS can generate RWE on treatment effectiveness and safety in vast, heterogeneous populations. Long-term observational studies like the Framingham Heart Study have revealed crucial insights into chronic disease that short, controlled trials could not. Today, regulators are increasingly embracing RWE; a recent survey found that 27% of real-world studies were requested by regulatory bodies like the FDA and EMA. The massive CVD-REAL study, which analyzed data from hundreds of thousands of patients, is a prime example of how RWE can provide insights at a scale and level of diversity that traditional trials cannot match. RCTs and RWS are not competitors; they are essential partners that, when used together, provide a complete picture of a treatment’s value.

The Future is Now: Big Data and AI in Clinical Data Interpretation

The sheer volume, velocity, and variety of medical Big Data generated today is overwhelming for traditional analytical methods. This is where Artificial Intelligence and Machine Learning become indispensable allies, capable of identifying patterns and generating insights from datasets of immense scale and complexity.

At Lifebit, our federated AI platform is at the forefront of this revolution, enabling secure, real-time access to global biomedical data. The core challenge with sensitive health data is that it is often siloed and cannot be moved due to privacy, security, and regulatory constraints. Our platform overcomes this with Trusted Research Environments (TREs) and a federated architecture. This technology allows approved researchers to bring their analyses to the data, rather than moving the data to the analysis. Models are trained securely where the data resides, and only aggregated, non-identifiable results or model parameters are shared, ensuring patient privacy and compliance with regulations like HIPAA, GDPR, and GxP.

Our R.E.A.L. (Real-time Evidence & Analytics Layer) delivers dynamic insights that accelerate medical decision-making. This is especially crucial for pharmacovigilance, where AI-driven safety surveillance can analyze millions of EHR notes or claims records to identify rare but serious adverse event signals in near real-time, a process that might have taken years with traditional methods. We also leverage:

- Natural Language Processing (NLP) to open up insights from unstructured text like clinicians’ notes, pathology reports, and scientific literature.

- Predictive analytics to build models that can forecast patient outcomes, identify high-risk individuals, or predict treatment response.

However, the use of AI is not without challenges. We must be vigilant about algorithmic bias, where an AI model trained on data from one demographic may perform poorly or unfairly for another. The “black box” problem, where complex models are not easily interpretable, also poses a challenge to trust and accountability. The future lies in developing explainable AI (XAI) and ensuring that these powerful tools are developed and deployed ethically and equitably.

Conclusion

As we conclude our comprehensive journey through clinical data interpretation, the once-daunting maze of statistics, study designs, and complex figures should now appear as a clear, navigable roadmap for evaluating clinical evidence. We have moved from foundational principles to the frontiers of modern data science, equipping you with the tools for critical appraisal.

We’ve established a solid foundation: understanding a study’s blueprint through the PICO framework and the hierarchy of evidence, from observational studies to the gold-standard RCT. We’ve digd into the core of analysis, learning to distinguish statistical significance from true clinical meaning, to balance efficacy and safety using metrics like NNT and NNH, and to interpret the visual language of data through flow diagrams, Kaplan-Meier plots, and forest plots. Most importantly, we’ve cultivated a critical mindset, learning to systematically identify pitfalls like bias, confounding, and spin, and to question the generalizability of trial results to real-world patients.

The healthcare landscape is evolving at an unprecedented pace. Traditional RCTs, while still essential, are now powerfully complemented by real-world studies, which provide a richer, more complete picture of treatment effectiveness and safety in diverse populations. Powering this evolution are AI and big data, which are opening new frontiers for analysis. This is where platforms like Lifebit’s federated AI system are game-changers, enabling faster, more comprehensive insights by securely analyzing vast, distributed datasets without compromising patient privacy. Real-time evidence generation is no longer science fiction; it is becoming a clinical reality.

Despite these incredible technological advances, the core principles of sound clinical data interpretation remain constant and more important than ever. The fundamental need for human critical thinking, clinical judgment, and ethical consideration is not replaced by technology but augmented by it. The future of medicine depends on professionals who can masterfully synthesize evidence from all sources—marrying the rigor of a clinical trial with the scale of big data—to translate this wealth of information into better, more personalized patient outcomes.

Your journey in mastering clinical data interpretation is an ongoing one. As data sources and analytical tools continue to grow in sophistication, the ability to critically evaluate, question, and correctly apply evidence will be the defining skill of the modern healthcare professional.

Find Lifebit’s federated platform for real-time evidence generation